Stand up right now and walk around for a minute. We’re pretty sure you didn’t see everywhere you stepped nor did you plan each step meticulously according to visual input. So why should robots do the same? Wouldn’t your robot be more versatile if it could use its vision to plan a path, but leave most of the walking to the legs with the help of various sensors and knowledge of joint positions?

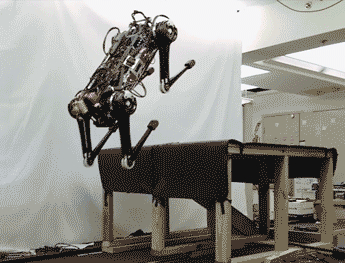

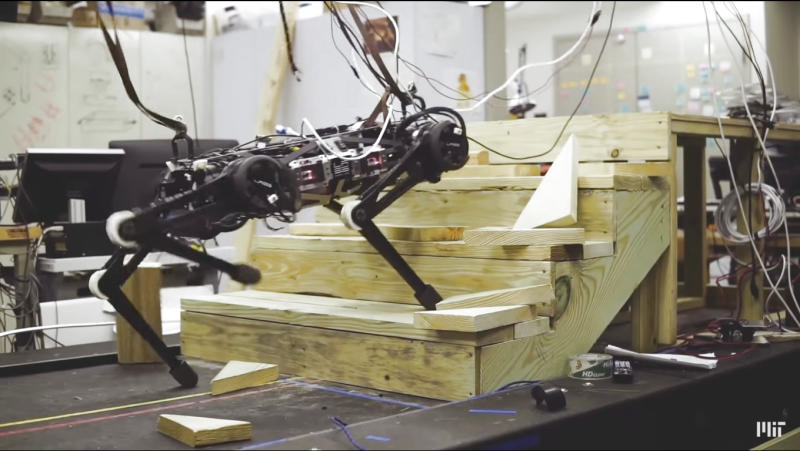

That’s the approach [Sangbae Kim] and a team of researchers at MIT are taking with their Cheetah 3. They’ve given it cameras but aren’t using them yet. Instead, they’re making sure it can move around blind first. So far they have it walking, running, jumping and even going up stairs cluttered with loose blocks and rolls of tape.

Two algorithms are at the heart of its being able to move around blind.

The first is a contact detection algorithm which decides if the legs should transition between a swing or a step based on knowledge of the joint positions and data from gyroscopes and accelerometers. If it tilted unexpectedly due to stepping on a loose block then this is the algorithm which decides what the legs should do.

The second is a model-predictive algorithm. This predicts what force a leg should apply once the decision has been made to take a step. It does this by calculating the multiplicative positions of the robot’s body and legs a half second into the future. These calculations are done 20 times a second. They’re what help it handle situations such as when someone shoves it or tugs it on a leash. The calculations enabled it to regain its balance or continue in the direction it was headed.

There are a number of other awesome features of this quadruped robot which we haven’t seen in others such as Boston Dynamics’ SpotMini like invertible knee joints and walking on three legs. Check out those features and more in the video below.

Of course, SpotMini has a whole set of neat features of its own. Let’s just say that while they look very similar, they’re on two different evolutionary paths. And the Cheetah certainly has evolved since we last looked at it a few years ago.

Our thanks to [Qes] for sending in this tip.

That jumping onto a desk left me speechless, I really didn’t expect that!

Especially since the video states it jumps 30 inches high (76cm) and not 30cm.

Fixed. Thanks!

It acts pretty animal alike.

Impressive.

“Stand up right now and walk around for a minute. We’re pretty sure you didn’t see everywhere you stepped nor did you plan each step meticulously according to visual input. So why should robots do the same?”

…This statement is completely false. The human brain does all these things automatically, just like a robot. Also, a lot of our thought processes occur the same way and often ‘pop out’ as the ‘light bulb’ moment when the answer just ‘presents itself’ to us. Some of us with more infantile minds might attribute this as ‘God talking to us’.

The thought processes that we are actually aware of constitute only a tiny fraction of the actual thought processes that are going on in the background. A good example is when we’re trying to solve a tricky math / engineering problem. The best approach is to read the problem, try and get a basic grasp of the intricacies, check that we’ve read all the problem correctly, see if there’s an easy solution ….. and if not ….. ‘sleep on it’ for a while or look at a different problem for a while.

Our mind builds a model which is what we walk around in.

I just stepped away from the computer for a while to wash dishes and I tend to walk around while drying them. I used my vision to navigate the rooms but I didn’t see the floor most of the time, or even the floor ahead of me due to whatever I was drying at the time. I went between rooms with different floorings and even on and off a carpet. At one point I approached a window to look out, again my view of the floor was blocked by the stuff I was carrying and my toe hit something on the floor. I stopped to look outdoors, but I’m not sure if I would have taken one more step had the object not been there. My brain did all this footstep processing and adapting to what my feet encountered automatically, just as you describe.

Granted, this was my place, which I’m very familiar with, so maybe my mind was using the model, as Ostracus said, but surely as I stepped partly onto the unseen carpet my feet sensed it and, probably using an internal model of carpets, understood that I could put pressure on it without hurting myself. Feedback also told me when to stop.

As for the ‘sleep on it’ trick, I do the same sometimes. Working on something else also helps.

Blind people do it all the time.

Tactile feedback of your surroundings help you walk on ice, wet floors, and unstable ground. Proprioception also helps you from tripping over yourself while recovering from a fall, finding a step in the dark or when your vision is blocked. The phrasing may be a bit clumsy but it’s not completely false. You do plan your steps based on visual input, when you have it, but it’s not necessary.

Sometimes all you need is the word: proprioception. Thanks!

Tidbit from https://en.wikipedia.org/wiki/Proprioception, “Secondary endings of muscle spindles detect changes in muscle length, and thus supply information regarding only the sense of position. Essentially, muscle spindles are stretch receptors”. We see those all the time in flex and stretch sensors in 3D printed hands to roughly determine the finger positions e.g. https://hackaday.com/2016/10/11/hackaday-prize-entry-handson-gloves-speaks-sign-language/.

Body sense for the layman.

Proprioception, the rotary encoder of the biologic world.

The perfect thing for killing people…if you are into that kind of thing.

How will that tool best be used?

Also, I am against the idea of the showing the combined efforts of a university on one the few websites that would otherwise feature the work of individuals and small grass-roots groups.

I had more of a “Mr Cheetah goes to Washington” feel.

In the UK, the likes of George Monbiot of the Guardian newspaper advocate the re-introduction of top predators ie wolves / big cats. Problem is fencing – how to stop the big cats jumping the fence into your neighbour’s sheep farm. Mr Cheetah could no doubt be geo-fenced and be trained to catch browsing herbivores, skin them, butcher them and bring back the meat all nicely jointed for Sunday roast.

And how many ranches could afford to buy let alone maintain Mr. Cheetos? Probably only the very largest. We are also talking about a field robot that will be dirty and muddy, working at night, then dealing with rain and be correct in it’s decisions 100% of the time. Very tall order that I doubt that the developers of Mr. Cheetos can reach.

I look at Boston Dynamics, Google bought then dumped them. Pretty much tells you that stuff is far away from being a commercially viable.

From the cited article: “Cheetah 3 is designed to do versatile tasks such as power plant inspection,… I think there are countless occasions where we [would] want to send robots to do simple tasks instead of humans. Dangerous, dirty, and difficult work can be done much more safely through remotely controlled robots.”

We often report on interesting and inspiring work from Universities. With the recent surge of hackers making just these sorts of quadruped robots, and many likely inspired by them, the algorithms here may be useful or at least provide food-for-thought. Having watched a lot of James Bruton’s work, it certainly seems the sort of algorithm he might make use of (https://hackaday.com/2018/06/11/james-bruton-is-making-a-dog-opendog-project/).

There’s also the idea of them as a part of telepresence, and AR/VR.

Yep, if fielded they will be very much a high ticket items that require very expensive maintenance. That leaves the military as prime user of this monstrosity. They’d probably use it to assassinate people, maybe as a suicide bomber. Imagine the terror of seeing this abortion running toward you while carrying 30lbs TNT.

I don’t see being used in industry unless the costs are very competitive with current solutions. This is just not a one-off purchase. It is a ongoing expense.

Apparently the US military did trials with the Boston dynamics version but ditched the idea as the bots were far too noisy. The dog would have to spot a twig on the ground, or better still, ‘feel’ it with it’s paw before stepping on it with full body weight.

Boston Dynamics is planning to try again, this time selling its SpotMini in 2019. They don’t give a price in the video.

[youtube=https://www.youtube.com/watch?v=sp_d-LeJjis&w=470&h=295]

Geeks always going for the harder option. A Hitman is a lot cheaper and just as effective.

Your local “hitman” may be cheap but you cannot be sure they will succeed, escape or implicate you. Robots only do exactly what they are programmed to do and are completely expendable while being easy to replace. On top of that, purely electric robots have the minimal requirement of needing electricity which can be collected by simply staying idle in sunlight.

If Cheetah 3 met Spot Mini would it be a cat & dog fight?