[Ben Eater] has been working on building computers on breadboards for a while now, alongside doing a few tutorials and guides as YouTube videos. A few enterprising hackers have already duplicated [Ben]’s efforts, but so far all of these builds are just a bunch of LEDs and switches. The next frontier is a video card, but one only capable of displaying thousands of pixels from circuitry built entirely on a breadboard.

This review begins with a review of VGA standards, eventually settling on an 800×600 resolution display with 60 MHz timing. The pixel clock of this video card is being clocked down from 40 MHz to 10 MHz, and the resulting display will have a resolution of 200×150. That’s good enough to display an image, but first [Ben] needs to get the horizontal timing right. This means a circuit to count pixels, and inject the front porch, sync pulse, and back porch at the end of each horizontal line.

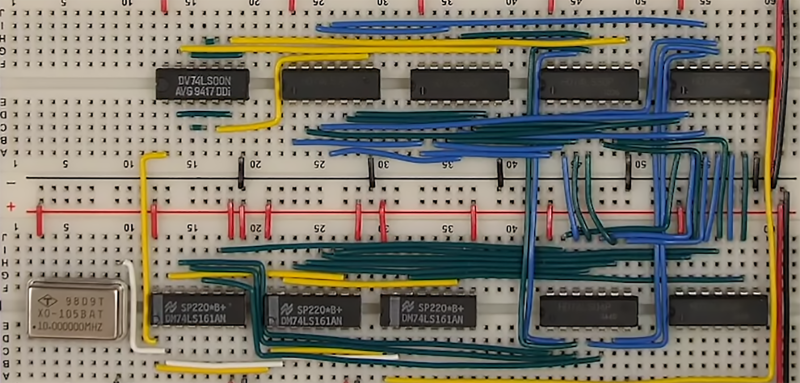

To generate a single horizontal line, [Ben]’s circuit first has to count out 200 pixels, send a blanking interval, then set the sync low, and finally another blanking interval before rolling down to the next line. This is done with a series of 74LS161 binary counters set up to simply count from 0 to 264. To generate the front porch, sync, and back porch, a trio of 8-input NAND gates are set up to send a low signal at the relevant point in a horizontal scan line.

The entire build takes up four solderless breadboards and uses twenty logic chips, but this isn’t done yet: all this confabulation of chips and wires does is step through the pixel data for the horizontal and vertical lines. A VGA monitor detects it’s in the right mode, but there’s no actual data — that’ll be the focus of the next part of this build where [Ben] starts pushing pixels to a monitor.

Ben Eater has a real talent for explaining complex concepts clearly and eloquently, I loved this vid. His 8 bit CPU videos are also fantastic.

+1

I’ve seen much of his content in school or out in the field, but it’s been a long time. The refreshers are extremely helpful.

I’ve also had a very hard time wrapping my head around CRCs. [Ben Eater] has clearly explained the concepts in a way that brings CRCs within grasping distance. I can almost see how to implement CRCs in firmware.

I just need to see how he implements CRCs using simple logic gates

I agree with Wibble that Ben Eater has a knack of explaining working with TTL to an audience who perhaps has never encountered such technology before.

I had rather hoped that the first video would have concluded with generating colour bars test pattern on the VGA monitor – but perhaps he’s leaving that excitement for the next episode.

It will be interesting to see the subsequent parts of this video series – I rather hope that the conclusion is a direct connection of this “video card” to his 8-bit computer – showing how video output can be achieved from a few chips and some clever coding.

You don’t need 8-wide nand gates, nor the inverters, you only need to check for the 1’s, since these are up-counters.

I’m pretty sure that configuration of 1’s can show up for multiple different possible numbers that could be encountered by the circuit.

nope, it’d be the same deal as not having to check the lsb because it would reset before it got there.

256: “100000000”

257: “100000001”

258: “100000010”

259: “100000011”

260: “100000100”

261: “100000101”

262: “100000110”

263: “100000111”

264: “100001000”

so, probably just need an and on bits 4 and 9.

The important numbers are:

200: 011001000 – Display Pixel Count

210: 011010010 – Front Porch

242: 011110010 – Horizontal Sync Pulse End.

264: 100001000 – Back Porch

While you are only looking at four different numbers, you are attempting to discriminate against numbers from 0 to 264 and not triggering on false positives.

If you trigger on just 4 and 9, you trigger every sixteen numbers for Display Pixel Count, and you would trigger for every number that is not a multiple of 16 and less than 256 for the Front Porch and the Horizontal Sync Pulse End.

The only number that your suggestion works with is for the end of the Back Porch.

The truth table does suggest that you can ignore bit 1, and 3, but that only reduces the number of bits from nine to seven.

I can’t speak for [Ben Eater], but hardware optimizations are often not about the number of bits you are playing with, but the real estate you are working with. Ben has eight input NAND gates, and his design works with this.

The circuit that Ben is building in the video keeps track of the state of the display driver during a scan line. These states can actually be inputs to the logic, and that may suggest an alternate design.

Pixels are only displayed from 0 to 199. The horizontal display is blanked from 200 to 264.

The Horizontal Sync pulse occurs from 210 to 241.

The horizontal pixel counter and horizontal display blanking is is reset at 264.

So, the display is not blanked until bit 8, 7, and 4 go high. That is four things being tracked.

The next event is the end of the Front Porch and the start of the Horizontal Sync Pulse. From the start of display blanking, when bits 5 and 2 both go high, the pulse starts. Three things being checked.

The next event is the end of the Horizontal Sync Pulse. When next bits 6, 5, and 2 are high, the pulse ends. Four things being checked.

The last event for each scan line that is not blanked is resetting the scan line state. This can trigger when bits 9 and 4 are both high.

If Ben has access to 4 input nand gates, or two dual 4 input nand gates, he could use them instead.

At first, I thought so too.

When looking for only 1’s matches, there would be only one exact match (the first), followed by several compatible matches. The specific counts being detected are all near the end of the counter ranges, and none will repeat before the counter is reset. This might work in this specific case. But try to find 3-/5-/6-/7-wide AND gates.

In a more general case based on an 8-bit counter, suppose you were looking for the number 42(dec) 2A(hex) for the first match, and 180(dec) B4(hex) match to reset the counter. When looking at just the 1’s, you would get at least 4 exact matches to 42 (and many more for compatible matches) before the counter resets. Can your design tolerate multiple matches? Perhaps not.

I do have an problem with using ripple counters. Glitch counts may occur when the counters increment, as flip-flops have propagation delays and they add up. So instead of inverters, use a latch registers clocked by the 10MHz clock, and make use of their Q and /Q outputs.

I was doing something very similar in the 1970’s with 74xx chips with PAL generation.

The timing was deliberately made very simple in the design of PAL and also NTSC.

The end result is that gate count and input width are very low as the timing points are the first instance of a permutation and there are no repeats before the counter reset.

VGA standards including SVGA, XGA, WXGA etc are all based on the same simple logic.

In more recent times o have made various VGA interfaces in CPLD/FPGA.

To avoid the glitches to mention you just advance the binary counters on the negative transition of the source clock and do everything else on the positive transition or vice versa.

You might be able to take advantage of the counter: 74163 having a load pin and preset input if the counter bits aren’t used for anything else.

The 10MHz crystal limits the display to 200×600 not 200×150. The vertical resolution is unaffected.

Depends on the refresh rate, right?

No

> […] 800×600 resolution display with 60 MHz timing. The pixel clock of this video card is being clocked down from 40 MHz to 10 MHz, and the resulting display will have a resolution of 200×150.

Corrections:

It’s 60Hz timing, not 60MHz.

The sync circuit as described in the video has a resolution of 200×600, not 200×150. (200×150 would be 1/16th the number of pixels, not 1/4th).

No, it’s correct. The pixel clock is 60 MHz, you’re confusing it with refresh rate or vsync frequency.

Pushing 800×600 pixels with a pixel clock of 60Hz would result in a refresh rate of 8000 seconds.

the pixel clock at the chosen mode is 40 MHz so no the statement 800×600 with 60Mhz timing is incorrect either way

There is no VGA standard at this resolution with a 60MHz pixel clock.

There is however a VGA standard of 800×600 with 40MHz pixel clock and a 60Hz frame rate or refresh rate.

So I assume (as no schematic) that it’s 40MHz / 4 or 10MHz pixel and 60Hz frame rate.

There is another consideration.

Modern LCD panels are driven via LVDS with a much higher native pixel and frame rates anyway. So as long as you get the horizontal and vertical sync and porch timing correct you can choose any pixel frequency you like.

The monitor recognises the standard by the sync timing and polarity and not the pixel frequency.

There is far greater a range of horizontal resolutions that can be chosen to make the logic simpler and easier.

Vertical resolution is however best to be to standard or a binary division give or take some blank lines if you wish.

The (not so modern) VGA monitor have a scaler chip that handle the scaling of different resolution to the native res. of the LCD panel. The pixels are sampled with ADC and sampled. It would simply see a lower res pixel as a group of 4 pixels having same color.

Can’t you use a CPLD/Small FPGA or microcontroller for all this? Seems cheaper/simpler to me.

Yup! In every way / form it is. This isnt about necessity its about seeing what’s possible using only wires and chips, without even soldering! Or about teaching people about the workings of a video car from the ground up, this was more about experimentation and fun than anything

In my opinion it is more comprehensible this way (one chip doing something connected to this other chip doing something else) to understand.

But if I would personnaly learn this (and this will be quite soon think), I would directly go with FPGA yes.

This is educational content, your method would probably suck for the purpose of educating people about the things this does.

For actually doing the the required function, you are probably right just because they are so cheap. In fact it would be a fantastic learning example to design using a CPLD/FPGA, applying all the detailed knowledge gained from Ben Eater’s excellent videos.

But this journey is one of learning knowledge, and using fundamental building blocks is one of the easiest ways to transfer understanding. At the end of the day everything that is truly complex is just a bunch of really simple things fitted together, and usually hidden by multiple layers of abstraction. Even the logic gates used are a layer of abstraction. There is no one, there is no zero, there are only electrons!

From a software coding perspective, there are many ways to implement ideas. But two are particularly common when reading other peoples code:

1 several lines of short concise extremely dense code that takes hours for a human to decipher and fully understand, even the person who wrote it given enough time.

2 hundred of lines of code that can be fully understood by almost anyone in just a few minutes.

Each have advantages. The above linked video I would consider to be much closer to the latter than the former.

As for using a MCU, people have done it, even using a arduino (search for “arduino vga” in your search engine of choice.

If you watch a few of Ben’s videos they simplify and then explain slowly by add complexity to explain the reality. A excellent example would be their series of videos on how networking works from the bottom to the top. Explaining basic concept like data over a wire ( https://www.youtube.com/watch?v=XaGXPObx2Gs ), clock synchronisation using Manchester coding ( https://www.youtube.com/watch?v=8BhjXqw9MqI ), adding frames, flags, bit stuffing ( https://www.youtube.com/watch?v=xrVN9jKjIKQ ). Those thirteen videos are an excellent learning resource if you knew nothing, or even if you thought you knew everything, about how networking works.

Who cares about VGA in 2019? Most gaming mointors don’t even have D-SUB connector on them. Show people how to implement HDMI on AVR or STM32.

HDMI requires emitting new bits at no less than 251 million bits per second. I eagerly await your explanation of how to do this on anything less than dedicated silicon or an FPGA.

HDMI spec is 25MHz minimum, not 251.

Derp, nevermind, I was thinking of pixel clock, not TDMS clock.

… and that’s per-channel, over four differential bit-serial channels concurrently (including the clock pin). For a microcontroller to emulate that, it’s gonna need to be able update a GPIO byte port at a sustained and deterministic transfer rate of 251 MBytes per second…

Other than FPGA or ASIC, there is the new Parallax Propeller 2 microcontroller that can potentially bitbang that fast. See:

http://forums.parallax.com/discussion/169701/digital-video-out-64006-es-d#latest

You first mr. knowitall.

I won’t be able to implement HDMI in software on either of those platforms. Go get a real monitor, or a VGA to HDMI converter box.

You can get VGA to HDMI dongles for less than $10 these days, so not a problem.

Really great video tutorial on VGA. I had an fpga board laying around with a VGA port. Now I know how to use create a video signal out of it.

If your horizontal pixel counts are always an even number of pixels, you can use 74HC40103 8-bit down-counters and count on every other pixel to keep the count within 8 bits. This is what I do for my “Bird’s Nest” Intellivision overlay video project on AtariAge. Using down-counters also means that you don’t have to check the actual count value: you can hard-code the value and only check for when the chip reports that the count has reached zero.