Tim [Mithro] Ansell has a lot to tell you about the current state of open FPGA tooling: 115 slides in 25 minutes if you’re counting. His SymbiFlow project aims to be the GCC of FPGA toolchains: cross-platform, multi-platform, completely free, and all-encompassing. That means that it’s an umbrella framework for all of the work that everyone else is doing, from work on synthesis and verification tools, to placing and routing, to vendor-specific chip libraries. His talk catches you up with the state of the art at the end of 2019, and it’s embedded below. Spoiler alert: SymbiFlow has the big Xilinx 7-series FPGAs in its crosshairs, and is closing in. SymbiFlow is that close to getting a networked Linux system on the FPGA fabric in a Xilinx 7 today, completely independent of any vendor tools.

But let’s step back a sec for a little background. When you code for an FPGA, words you type get turned into a bitstream of ones and zeroes that flip perhaps a few million switches inside the chip. Going from a higher-level language to a bitstream is a lot like compiling normal programming languages, except with the twist that the resulting computational logic doesn’t map straight into a machine language, but rather into lower-level physical hardware on the FPGA. So “compilation” for FPGAs involves two steps: synthesis and place-and-routing. Synthesis takes the higher-level language that you write and turns it into a set of networks and timing requirements that represent the same logic, and can work across chip families. Yosys is the open-source synthesis tool of choice here.

Place-and-route then maps the program logic into the individual FPGA’s architecture and feature set, and it’s here that reverse engineering work has and is being done to accommodate more FPGA families. nextpnr takes the program logic and maps it into FPGA gates, and it can’t do this without detailed descriptions of the various chips’ internals. For this, SymbiFlow splits each FPGA chip family into its own bitstream documentation and tooling projects. So far, we’ve got Project IceStorm for the Lattice iCE40 chips, Project Trellis for the Lattice ECP5s that were on the Supercon badge, and Project X-Ray for the Xilinx 7s.

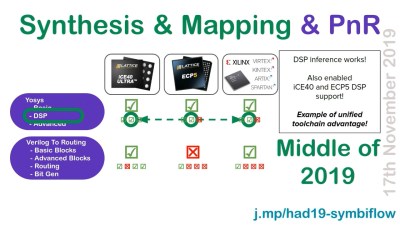

Mithro starts the talk off by mentioning that the goal is to cover all FPGAs with one toolchain. That’s an incredible goal that’s far, far away. But progress is being made ridiculously fast. In early 2018, for instance, only the Lattice iCE40 parts were really well understood and supported. This FPGA was picked because it’s accessible, cheap, and was easy to reverse engineer, but Lattice’s market share is approximately zero. Xilinx and Altera are the Intel and AMD of the FPGA world, and without coverage of their chips, the project can only be said to be just getting off the ground. However, within a year, Dave Shah had essentially completely documented the much more powerful Lattice ECP5, and we were able to bring you the Hackaday Supercon Badge. So much for Lattice.

As alluded to in the opening, the real story here is the tremendous progress made in 2019 on Xilinx parts. As Project X-ray got seriously underway, some parts of nextpnr turned out to be more Lattice-specific than previously thought, and a new place-and-route tool Verilog to Routing developed at the University of Toronto, got pulled into SymbiFlow.

As alluded to in the opening, the real story here is the tremendous progress made in 2019 on Xilinx parts. As Project X-ray got seriously underway, some parts of nextpnr turned out to be more Lattice-specific than previously thought, and a new place-and-route tool Verilog to Routing developed at the University of Toronto, got pulled into SymbiFlow.

At the same time, some of the synthesis steps in Yosys weren’t taking optimal use of some of the more advanced functionality of the Xilinx parts, like the specialized digital-signal processing blocks (DSP), and made great progress on that front in 2019. (Of course, some of the optimizations of DSP on the Xilinx 7 chips spilled over to the Lattice ECP5s, so everyone wins.)

Which brings us to the punchline. Can SymbiFlow fully replace Xilinx’s proprietary (and unwieldy) Vivado toolchain? No, because the FPGA’s high-speed peripherals don’t play nice with the open place-and-route yet. And while Mithro said in the talk that the Symbiflow consortium were working full-out to get to that point by the end of 2019, we talked to him on Dec 29th, and they were still at it. But if you’re asking us, we’d predict that we will see a full and fully open Xilinx 7 toolchain in 2020. Maybe even this summer? It’s a project that’s near and dear to our hearts, so we’ll keep you in the loop.

But while Mithro, being a Linux/software guy at heart, is focused on getting a fully open toolchain to build up a Linux system, you shouldn’t be discouraged from trying anything else on the Xilinx 7 or Lattice parts right now. Both of these flows are entirely functional for microcontrollery or IoT applications. As long as you don’t need the high-speed memory and Ethernet peripherals, you’re good to go. And if you do need those things, you can synthesize with Yosys and do place-and-route with the closed Vivado tools until SymbiFlow ticks off a couple more boxes. There’s never been a better time than NOW to get started with FPGAs, IMHO.

It’s Xilinx not XiLEX. I nearly stopped watching after the first 20 mispronunciations. But hats off to these guys for what they are doing.

Lattice market share has grown beyond 10% in 2019/2020 forecasts. It’s not a small company anymore thanks to parts like the ECP5, their mobile and automotive focus, and acquisitions like SiliconBlue. And if you look at the small to mid-sized part markets, Lattice is way beyond 10%. Xilinx and Intel make large parts that other silicon vendors uses to prototype ASICs – where the real money is.

Lattice could grow even more if they just embraced this effort and marketed to the builders of the product rather than the products themselves.

It’s a bit of a pain in the ass really though how the big boys have everything directed at you developing an ASIC, no I don’t got 4 million stuffed in the mattress and don’t need 500,000 of them. With the pricing reflecting value as a tool which gets you to ASIC quicker, saves you ASIC development costs, avoids expensive mistakes on the ASIC, chill the flip out, I wanna do this on FPGA.

Haha! That feeling takes me back. I don’t think there were any FPGAs when I first got into electronics, but there were ASICs, and *everything* was geared for big orders. Maplin was good then, supplying all sorts of things we’d otherwise have had to bulk-buy.

I have learned a bit of Verilog from books etc. but what really holds me back is not knowing where to start. Some people say Lattice, but you also have the MiSTer project using the DE-10 which is Intel and an attractive platform for the sort of thing I want to do.

Lattice are the only ones who seem to have decent free tools.

Where would you say is a good place to start?

The swapforth j1a on a lattice iceStick working on linux is a good place to start: With a project you can immediately have up and running, with a full compilation sequence, and a very minimalistic soft core and ans forth image.

Want to develop a new module? Great, instantiate it into the top level file, hook it into the IO address select part for reading and writing, and then rebuild, connect, and talk to the j1a and ask it to set/get values on your new module to test it. Or write tiny simple functions to generate test data sequences etc.

Having something that gives you those abilities to not just get something on the FPGA (blinking a LED) but also being able to trivially test parts, is a good place to start.

You can quite easily learn to write some simple state machines there.

Then you can borrow the j1a’s serial module, and strip out the actual soft core, and just have your own simple ASCII byte interpreting state machine. You really only need to implement enough machine to accept a string of ascii chars and extract a nibble from each, shifted nibblewise onto a register, as well as a mechanism to generate a string of hex ascii chars from a register to read back, to do everything except programmablity on an FPGA. (hint: put a fifo between your state machine and the return serial module, so it can generate a burst of ascii, but give the serial sender time to shift them out without losing any).

Verilog is good that way: you can just put your ascii command chars into a case statement, as it understands ascii literals out of the box (as opposed to VHDL, which was designed by military committee, and has to be ‘taught’ everything from bit states on up – and also needs anything instantiated mentioned in triplicate. Ugh.).

“Xilinx and Altera are the Intel and AMD of the FPGA world”. A little confusing Altera IS Intel… has been for a while now.

So let’s get it all sorted out, Altera is Intel, Xilinx represents AMD, so we going modern or classic, with VIA or Cyrix, but then who is the IDT Winchip, or the Rise MP6, or even… the UMC U5… then are we gonna include Harris because of that super fast 286 lifting them beyond the mere copy, or for that matter NEC who was in the game with the V20 et al, and where precisely does Nexgen fit in???

That’s Tim’s joke. I swiped it.

The point is that the FPGA world is dominated by two players, one with a lion’s share, one with a smaller share: Intel and AMD. The punchline is that in the FPGA world, Intel is “AMD”. (Or at least, they purchased Altera, who was “AMD”.)

C’mon, it’s funny!

And now AMD owns Xilinx.

Thank you for the encouragement to explore FPGA’s. I think sometimes the real “twist” is in mentally stopping at the machine language, and not seeing the underlying bitstream in any processor. Having to wrap one’s mind around FPGA’s has probably eliminated more than one mental block, when looking for the best way to do a job.

I agree. I wish people would just stop the computer analogy all together. bitstreams are not machine code. compiling HDL is completely a different process then compiling software. This analogy is what makes teaching FPGA coding so difficult, students always expect HDL is just another language. It is not, it is a description of a circuit, or at a minimum a description of registers and combinational logic.

I’m not really sure what this is all about. I am an embedded engineer and use FPGA’s and CPLD’s professionally. I have never paid for any tools as the vendors provide free editions that support all but the bleeding edge stuff. The vendors are after selling the IC’s not the tools.

What would be interesting and useful is an open source simulation tool to upstage the appallingly slow / nobbled Multisim that is provided free with the vendor tools.

Sims: try Icarus Verilog for the small stuff, and Verilator for the bigger. GTKwave for visualization.

But open isn’t about price. It’s about the ability to adapt/modify/extend the tools as you want. There are formal verification tools being built into the open toolchain. You can mix/match tools at different stages of the flow. People are making their own GUI frontends, from simpler to incredibly complex. Etc. Open is about flexibility.

But yeah. If you’re not sure what this is all about, that’s the surest sign that you should give it a try. :) Remember when you used to need to buy vendors’ compilers for CPUs?

I was thinking the same, everyone had a ‘Borland C++’ box on their desk in the 90s, now everyone uses gcc. At some point, the silicon companies will realize its easier to support FOSS toolsets for all the free labor! Why pay for a SW dev staff in your office when you can just rely on milliions of geeks around the world?

You almost answered your question yourself. The project aims to be a multi platform toolchain for FPGA development. So you prototype your project on an ice40 FPGA dev board and download and learn the Lattice Diamond vendor toolchain. Then you decide to add more features and switch to a artix 7 board. Another vendor toolchain to download and learn. Maybe you decide before moving to production you aren’ta fan of the Xilinx tools and switch back to lattice on an ECP5 FPGA. Switch toolchains again. Symbiflow would not require all that flip flop.

Not to mention some of the free vendor toolchains are only free for the lower end FPGAs. And IP cores are $$$ in those closed source ecosystems.

Except you don’t use Diamond for iCE40 development (you use Radiance). And generally simple synthesis is matter of opening a file browser, selecting a few .v files, and pressing a button. There is very little difference in vendor tools for that. Differences are mostly in support functions for your designs. But that’s not the appeal of FOSS FPGA tools.

The appeal spans a lot of areas. I’d like to use a command line tool chain on a modern Linux box. The Lattice builds are a pain to run on Linux – especially if you don’t use their distro. I’d like to run tools on the RPi directly connected to the FPGA. Good luck with that with vendor tools. I’d like to implement a PCIe core with high speed serdes ports. All the vendors want big $$ for that. Etc.

oops, got my fpgas mixed up.

I’m currently using the mach XO3L which requires diamond as there is no support in FOSS fpga tools, yet.

which is why i was looking for alternative to vendor GUI tools, thanks! I much prefer linux/command line for all. It looks like Symbiflow toolset answers my call. It also brings in python, cmake, etc. which most SW engineers are familiar with.

A good reason to use opensource toolchains & core libraries is to eliminate the waste you get when using the vendor’s tools. Bunnie points out in his review of LiteX vs Vivado ( https://www.bunniestudios.com/blog/?p=5018 ) that the vendor provided IP cores are often not optimized (they want to sell you bigger faster chips).

I challenge anyone to out-code the Vivado FIR compiler. You will spend the better part of a month and end up with an inferior result.

I would like to get the month of my life back that Vivado has stolen. It’s so painfully slow and the most sluggish dev tool I’ve seen in ages.

Then you haven’t tried Vitis :>) Actually I have been OK with Vivado speed not because it is fast but because it was so much faster than ISE. One also has to separate the synth speed from the P&R speed. While there are faster Synth engines, the total time is dominated by P&R, so getting fast synth won’t impact my dev time.

So can anyone tell me when i will get new gen MISTer that could hardware emulate 90ties PCs and consoles?

Fer Gawd’s sake: there’s a N in XILINK. It’s zzz-eye-links, not zzz-eye-licks.

This is the second footage of an FPGA presentation that I’ve seen where it seems that the person editing has seemed to doze off. At 12:50 for instance, the guy POINTS to the presentation, yet the editor could not be bothered to put up the slides in the video.

Editing was being done during the live stream. The link to the slides was published in the YouTube description so you can follow along if you like:

https://j.mp/had19-symbiflow

Thanks for the slides!

If you want to go in to production, then “open source” is the equivalent of try before you buy, and boy is that tool chain going to cost you! A $1500 unit made with $12 of parts? $8000 software and a $150 a month fee? and that’s for one tool in the chain. That’s fine if you’re going to sell thousands if not millions of units, but what if you are going in to a niche of high quality, low quantity? Some people don’t want to get rich, they just want to share what they love. To afford this you need to sell in high volume, cut every corner you can, and use the cheapest crap you can get away with selling to the “user”. No high quality product, Period. There was a time when a bug was a catastrophe, now they are expected. Almost no one has ever heard high quality sound, and cars are less fuel efficient per horse power than 50 years ago, cars are now “vehicles” for selling ASICs. There’s the Chinese option, they don’t pay that initial fee and yearly fee, but that’s all I’ll say about Chinese electronics. Did anyone carefully read the user agreements? These companies essentially own you, your company, and your work if you agree to them. No thank you. Do they really need all that money to compensate for their inadequacies? If you need to get rich, why not sell in high volume at a low price, that always worked in the past, China has been the master merchants of the world for thousands of years. Historically, every society that engaged in the “gouge ’em” business model has collapsed, but who reads history. Why not sell ICs and then easily facilitate their use? The best electronic I have ever encountered are one-offs being enjoyed by their creators, they can’t afford millions of dollars to run their basement lab to sell a few units a year. There’s a lot of stupidly self-destructive business practices. I guess we’re stuck with our slow, under-powered, poor quality, foreign, slave-labor produced computers a.k.a. “phones”, it gives us something to stare at on the unemployment line and in the soup kitchens, along with all the other computer scientists, though I don’t use “phones”, I read something called “books”, some of you may remember them.