1987 was a glorious year. It brought us the PS/2 keyboard standard that’s still present on many a motherboard back panel to this day. (It also marked the North America/Europe release of The Legend of Zelda but that’s another article.) Up until this point, peripherals were using DIN-5 and DE-9 (often mistakenly called DB9 and common for mice at the time) connectors or — gasp — non-standard proprietary connectors. So what was this new hotness all about? [Ben Eater] walks us through the PS/2 hall of fame by reverse-engineering the protocol.

This is a clocked data protocol, so a waveform is generated on the data pin for each key pressed that can be compared to the clock pin to establish the timing of each pulse. Every key sends a unique set of encoded pulses and voila, the whims of the user can quickly and easily be decoded by the machine.

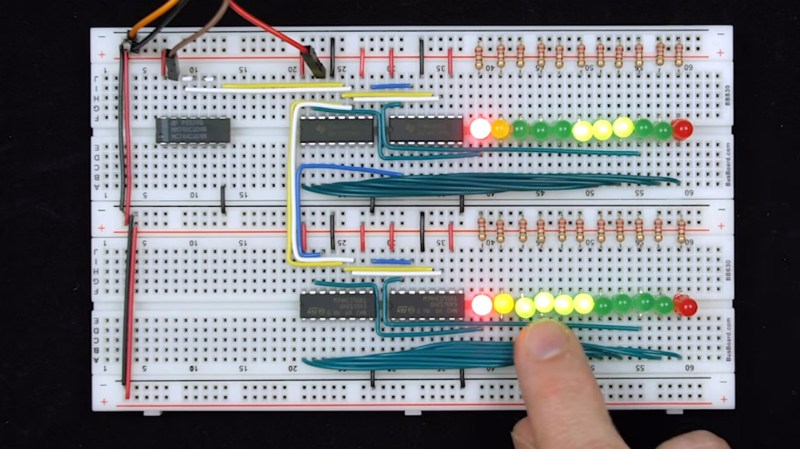

This is where [Ben’s] dive really shines, we know he’s a breadboarding ninja so he reaches for some DIP chips. A shift register is an easy way to build up a parallel PS/2 interface for breaking out each data packet. There are a few quirks along the way, like the need to invert the clock signal so the shift register triggers on the correct edge. He also uses the propagation delay of a couple inverter gates to fire the 595 shift register’s latch pin slightly late, avoiding a race condition. A second 595 stores the output for display by a set of LEDs.

Beyond simply decoding the signal, [Ben] goes into how the packets are formatted. You don’t just get the key code, but you get normal serial interface error detection; start/stop bits and a parity bit as well. He even drills down into extended keys that send more than one packet, and a key-up action packet that’s sent by this particular keyboard.

This is the perfect low-level demo of how the protocol functions. On the practicality side, it feels a bit strange to be breaking out the serial to parallel when it would be very easy to monitor the two signal lines and decode them with a microcontroller. You might want to switch it up a bit, stick with the clock and data pins, but connect them to a Raspberry Pi using just a few passive components.

As I recall, the original “IBM PC”used a 595 to get the serial keyboard signal into parallel.

It was a later iteration that used a microcontroller to handle the serial keyboard.

Tye keyboard always used a micricontroller.

While simple logic to decode the PS/2 protocol, it is unlikely it can recover gracefully glitches/ESD/accidental connector removal/reconnection. When clock bits are missed without a resynchronization, all the data collected from that point are garbled. This was one of the things why the early PC don’t handle reconnect well and requires a reboot if someone tripped on the keyboard cable.

To recover, you would need a timeout on last clock pulse and try to resynchrize the start bit. I have implement that on my PS/2 code and it always recovers.

An interesting fact about USB keyboard is that it use PS/2 protocol when the D+ and D- lines are pullup to Vdd. All USB keyboard that I tested do that and I take advantage of it when I want to use a PC keyboard with an MCU. I never used USB protocol for that purpose as implementing PS/2 protocol is a lot simpler.

Many more special (think reprogrammable) or “gamer” keyboards do not.

Which all mine are as my Logitech IN 2001 SE finally died.

Remember the little adapters that came with USB keyboards when they were introduced 20 years ago – they do the pullup:

https://commons.wikimedia.org/wiki/File:USB_to_PS2_mouse_adapter.jpg

Many keyboards I’ve bought over the last 10-ish years no longer support the usb-to-ps2 transition spec.

It was only meant for a few years anyway, but I suppose it took (is taking) a lot of time for the transition keyboards to work their way through channels. But new keyboard controllers seem to be hit or miss, with the sub-$20 ones consistently no longer supporting ps2 :(

That is true, but it relies on the firmware to detect the state of the I/O lines at startup. Depending on the state the firmware will configure the lines to behave as PS/2 I/Os, or as USB data lines (connected to the on-chip USB module). There is no magic.

Ah, so that’s how it works! Thank you Jacques.

A logic analyzer would have made this a little easier in this case.

That is one.

If he just connected clock as is it would read data on rising edge, which conveniently is at middle of data bit, so he wouldn’t need delay at all. I guess it is how this was planned to be used :)

I watched this on the way to work yesterday so wasn’t 100% concentrating. I couldn’t help but think that it’d be largely unusable if you were actually interfacing this.

It seems the best protection against garbage data is sampling constantly and just that data bursts are quick vs time between key-presses.

I guess it was an excellent demonstration but there is something niggling about the way its setup. I can’t put my finger on.

If you queue up a long enough buffer of bits, you can detect a desync with the data by the parity being off, then shift a bit window one bit at a time (at most 8 times) until you find the proper positioning where parity checks out on enough packets. Then you can recover your place.

“it would be very easy to monitor the two signal lines and decode them with a microcontroller.”

Go big (absolutely hardest way) or go home!

Aww heck, there must be a way to do it with a 555. Or maybe a whole bunch of 555s

Some history to explain some questions asked in the video.

Prior to the XT, IBM had an existing keyboard (84 keys) in production. The PS/2 scan codes came from this earlier keyboard and these represented the position of the key rather than the label (letter) printed on the key and this is why there is no correlation to ASCII. This was done for international variations. Not surprising for a company named International Business Machines. DOS had code pages for international variants.

Earlier keyboards were exclusively used for text entry and they did not indicate when a key was released. At that time the protocol was one 9 bit word. This wasn’t good for games or other input types so a second word was added to both maintain compatibility with existing hardware and indicate the release of a key.

Later keyboards were extended to 101 keys (PS/2) and then the protocol was extended to three 11 bit words. This was not compatible with older hardware and may keyboards of that era had a switch to select either “XT/AT” protocol.

I’m not sure what you are trying to say. “Prior to the XT, IBM had an existing keyboard (84 keys) in production.” That sounds like you think IBM had only one keyboard, maybe you were thinking of the IBM Model F? The first Model F keyboard appeared on the IBM System/23 Datamaster, released one month before the IBM PC. It was an 83-key keyboard. In fact IBM had LOTS of keyboards before the XT, how do you think their terminals communicated with mainframes? The Model F variations alone came in 122 keys, 104 keys even 50 keys. Are you limiting the discussion to IBM PCs? The Compaq Portable had a “foil and foam” keyboard made Keytronics in 1983, before the XT. Keytronics also made keyboards for at least one of the TRS-80s, the Apple Lisas, Sun type 4, Wang… I guess I’m trying to say that computer keyboard making was already a mature industry by the time IBM put their first PC out. Plus, clones dominated the market by the time the PS/2 standard came out. You’re filtering out all of that history to concentrate solely on IBM’s contribution. “Earlier keyboards were exclusively used for text entry” Well, no. You seem to be vaguely aware of the System/23 Datamaster, and maybe you can say that was exclusively for text entry. But there were many keyboards out for terminals long before that, which had various uses besides text entry, A 7-bit encoding scheme, ISO/IEC 646, also called ECMA-6, was introduced at 1967 to address shortcomings of ASCII-US, and DEC terminals used a NRCS standard in ’83, which was at least as important as IBM’s contributions. If you are going to present history, then get it right! I’m still trying to figure out if you purposely meant to focus exclusively on the lineage of IBM PC keyboards, or you really think that was the be-all and end-all of electronic keyboards used with computers. I was a teenager back then and certainly had and have today a very different perspective.

I didn’t really follow that either.

The IBM PC keyboard from the start issued a code on key down, and a code on key up. I have no idea why, but it sure wasn’t about games.

The keyboard format never changed. Whether a DIN or PS/2 connector, the keyboard outputted the same form.

There was a change, and I can’t remember if it was with the AT or PS/2. I guess the AT, it added a microcontroller on the motherboard for the keyboard interface. And the keyboard had some level of the communication from the motherboard. Most important, the keycodes changed.

So the “XT/AT” switches were about generating the new keycodes. It was a transition period. I once opened a keyboard, and the switch was there, but the usefulness passed, so no need to slot tge case for it.

Key down/key up allow you to do autorepeat on the PC side, which is easier and more useful than doing it on the keyboard side

It’s also the only practical way you can track arbitrary key combinations to use special characters and commands.

The keyboard doesn’t know whether you intend to press one or multiple keys as it scans the key matrix, so it would have to scan the whole thing at least a couple times to find which keys are down, and then send a special code for that combination.

Now the problem is that the number of combinations on a 104 key board is vast. There are 5,356 unique combinations of two keys, 182,104 combinations of three, 4,598,126 combinations of four…. etc. In other words, if you want to track all possible key combinations with just a “keys down” message, each scan code has to be 104 bits long. If you send key up/down messages, an 8-bit code is enough.

I’m not sure what you are trying to say. “Prior to the XT, IBM had an existing keyboard (84 keys) in production.” That sounds like you think IBM had only one keyboard, maybe you were thinking of the IBM Model F? The first Model F keyboard appeared on the IBM System/23 Datamaster, released one month before the IBM PC. It was an 83-key keyboard. In fact IBM had LOTS of keyboards before the XT, how do you think their terminals communicated with mainframes? The Model F variations alone came in 122 keys, 104 keys even 50 keys. Are you limiting the discussion to IBM PCs? The Compaq Portable had a “foil and foam” keyboard made Keytronics in 1983, before the XT. Keytronics also made keyboards for at least one of the TRS-80s, the Apple Lisas, Sun type 4, Wang… I guess I’m trying to say that computer-keyboard-making was already a mature industry by the time IBM put their first PC out. Plus, clones dominated the market by the time the PS/2 standard came out. You’re filtering out all of that history to concentrate solely on IBM’s contribution. “Earlier keyboards were exclusively used for text entry” Well, no. You seem to be vaguely aware of the System/23 Datamaster, and maybe you can say that was exclusively for text entry. But there were many keyboards out for terminals long before that, which had various uses besides text entry. One 7-bit encoding scheme, ISO/IEC 646, also called ECMA-6, was introduced at 1967 to address shortcomings of ASCII-US, and DEC terminals used a NRCS standard in ’83, and IBM had the 8-bit EBCDIC standard, which has more to do with PC keyboards not correlating to ASCII than the “International” in their name. If you are going to present history, then get it right! I’m still trying to figure out if you purposely meant to focus exclusively on the lineage of IBM PC keyboards, or you really think that was the be-all and end-all of electronic keyboards used with computers. I was a teenager back then and certainly had, and still have today a very different perspective.

I suspect that leaving the clock uninverted would indeed be more reliable.

That said, the delay would still be required. It delays the latch clock relative to the shift register clock rather than delaying the shift register clock itself. The SN74HC595 datasheet says that when those clocks are tied together “the shift register is one clock pulse ahead of the storage register”. RCLK needs to rise at least 19ns after the previous rising edge of SRCLK to get the latest data. Without the delay the output would always be offset by one bit.

@poiuyt When he builds the actual interface for his 6502 computer he does it differently. The RCLK is driven by the broad pulse, created by integrating the 11 cycle burst of clock from the keyboard with an RC circuit between two Schmitt-triggered inverters, which also provides the interrupt for the CPU. He still inverts RCLK though, which I agree is curious, since the ‘595 needs a rising edge and the PS/2 interface provides just that and correctly timed.

This was supposed to be in reply to Darko, but jumped to the end of the list when posted :(

dammit ben eater! pretty I won’t have any project on my list that you haven’t made!

maybe I shouldn’t wait decade before doing them though.

I am looking to design a PS/2 to USb adapter. Does anyone know of a single chip solution or something similar ?

yeah, I’ve been doing that many times, using Microchip PICs :)

PS/2 Keyboard (or mouse) to USB host (computer socket) OR

USB Keyboard to PS/2 computer socket?

The PS/2 Device to USB HOST computer is the easier part to do using a uC that has native USB device capabilities like an ATTINY , PICs are good to but may take a bit longer to find and modify a library.

Most keyboards are still auto sensing so a physical adaptor works. Some Mouses are to. If you want something that is likely to be auto sensing then look for a plain keyboard with no multimedia or plain mouse with perhaps a scroll wheel but no more than that.

PS/2 protocols for both keyboard and mouse are simple. USB protocol to the PC is a little harder especially if you want more than a “standard” keyboard or mouse. Likewise – reading and interpreting the responses when playing the role of a USB host (Computer) is quite an interrogation for various compatibilities.

So step 1) lol ask the same question specifying what is the Host and what is the (attached) device.

Is it possible to use a 8251 to decode the serial data of the keyboard to parallel data thats read by the CPU?