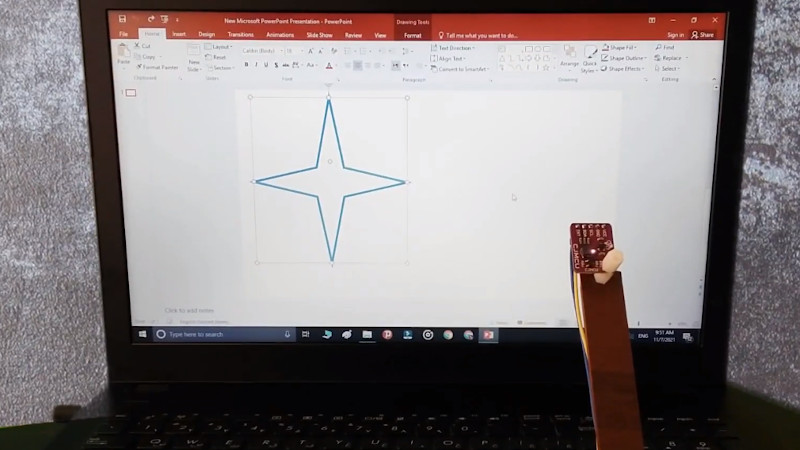

Controlling your computer with a wave of the hand seems like something from science fiction, and for good reason. From Minority Report to Iron Man, we’ve seen plenty of famous actors controlling their high-tech computer systems by wildly gesticulating in the air. Meanwhile, we’re all stuck using keyboards and mice like a bunch of chumps.

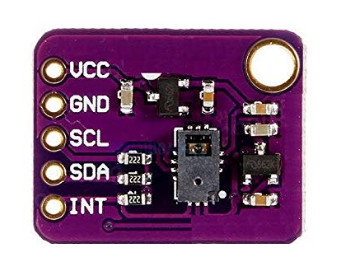

But it doesn’t have to be that way. As [Norbert Zare] demonstrates in his latest project, you can actually achieve some fairly impressive gesture control on your computer using a $10 USD PAJ7620U2 sensor. Well not just the sensor, of course. You need some way to convert the output from the I2C-enabled sensor into something your computer will understand, which is where the microcontroller comes in.

Looking through the provided source code, you can see just how easy it is to talk to the PAJ7620U2. With nothing more exotic than a

Looking through the provided source code, you can see just how easy it is to talk to the PAJ7620U2. With nothing more exotic than a switch case statement, [Norbert] is able to pick up on the gesture flags coming from the sensor. From there, it’s just a matter of using the Arduino Keyboard library to fire off the appropriate keycodes. If you’re looking to recreate this we’d go with a microcontroller that supports native USB, but technically this could be done on pretty much any Arduino. In fact, in this case he’s actually using the ATtiny85-based Digispark.

This actually isn’t the first time we’ve seen somebody use a similar sensor to pull off low-cost gesture control, but so far, none of these projects have really taken off. It seems like it works well enough in the video after the break, but looks can be deceiving. Have any Hackaday readers actually tried to use one of these modules for their day-to-day futuristic computing?

don’t lie lose.

Missing from summary: this sensor sits still and observes your hand using a low-res camera (vs motion sensors that you wear).

I have used these in a project for controlling an LED matrix as a low res arcade game. Back then, the cost was $7 a sensor so the price has risen a bit. I can’t remember where I got the detailed spec sheet but I want to say it involved an NDA. The device can be controlled by reading/writing to its registers, and I believe you can get the raw output of the camera although the frame rate is slow. The gestures work for the most part with the exception of the clockwise and CCW but the delta needed for direction detection can be quite large despite the low angle and field of view. The only solution that improves reliability is using multiple sensors and multiple threads running algorithms. Ultimately, I abandoned the Arduino and went to the ESP-32, then life happened and the entire project is just collecting dust in the garage while I wait for more time. Definitely interesting device that takes a lot of the gesture detection and leaves the processing on the device so all is needed is a simple switch statement and a wait timer.

It’s an impressive video but whats shown is just a gimmicks that don’t demonstrate any practical use. Would it work on an android phone?

Regards.

This was designed for a pc or laptop im sure ot could be used for android if some one wanted to. As for practical uses I can see many specificly for people who suffer from arthritis etc

A similar feature was tried on Android. Google’s Project Soli was in the Pixel 4 and it had similar functionality but written off as gimmicky.