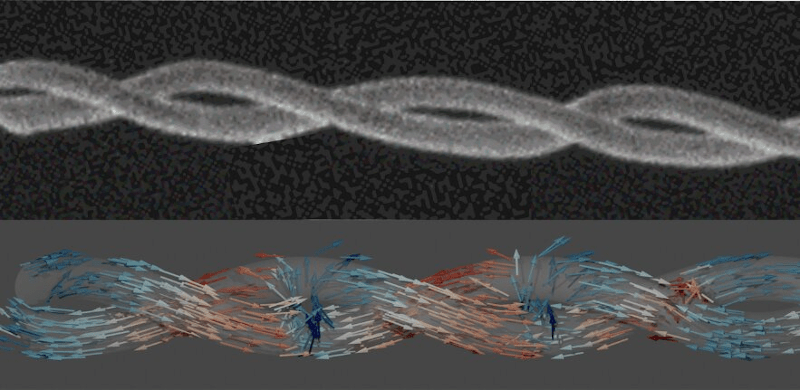

You normally associate a double helix with DNA, but an international team headquartered at Cambridge University used 3D printing to create magnetic double helixes that are about a 1,000 times smaller than a human hair. Why do such a thing? We aren’t sure why they started, but they were able to find nanoscale topological features in the magnetic field and they think it will change how magnetic devices work in the future — especially magnetic storage devices.

In particular, researchers feel this is a step towards practical “racetrack” memory that stores magnetic information in three dimensions instead of two and offer high density and RAM-like access times. You can read the full paper if you want the gory details.

The magnetic helix structure forms pair much like actual DNA. You probably won’t be able to 3D print these yourself since the team used electron beam deposition. However, we were entertained to hear they did the modeling in FreeCAD.

Will this lead to terabytes of main storage for your PC? Probably not directly, but it is a step in that direction. It wasn’t long ago that having 640K of memory and 20 megabytes of hard drive seemed like all you would need. One day, we’ll look back on our quaint computers with 32GB of RAM and terabyte disks and wonder how we ever got along.

Magnets always seem like real magic. No, really.

Is this an article from the past? Don’t we already have 32GB of RAM and terabytes of storage?

“Will this lead to terabytes of main storage for your PC?”

This is where I’m confused. We already have it, maybe you meant petabytes or RAM?

I think he meant terabytes of RAM. Although, if this technology is as fast or faster than RAM and also meets the requirements for mass storage (the big promise is no write-endurance limits as you have with SSD), then using the same technology for both RAM and mass storage may eliminate the distinction between the two.

“One day, we’ll look back on our quaint computers with 32GB of RAM and terabyte disks”

You don’t look back at them as “quaint” yet.

I do, but that perspective comes from what you are using, which probably depends on your job (10 years ago I was using machines with 128 processors – well technically it was only two UltraSPARC T2 Plus CPU’s, so 16 CPU cores in total each with 8 threads per core) with 128GB of RAM (Sun Fire T5140).

“main storage” is RAM, wheres “secondary storage” is permanent

The article is saying *today’s* 32GB of RAM and terabytes of storage will seem quaint in the future (although that will probably be true with or without “racetrack” memory.) The phrase “terabytes of main storage” does seem off–I bet the author meant “terabytes of main memory”.

My problem with “racetrack” memory as main memory is that it is sequential access just like its predecessor, magnetic bubble memory. While you can read and write individual bits at roughly the same speed as current RAM, the latency of moving a target bit at an arbitrary position to the read/write location can take a hundred times as long. That’s very suboptimal as main memory–but perfectly acceptable for online storage (like HDD’s and flash SSD’s.)

Perhaps we could weave it like thread into a block and address it in three dimensions.

Technically, there’s nothing to stop you using laser light to erase the memory. With the right frequency an electromagnetic wave could be used to read and write to it too. If the material had the correct spacing between strands, the beat frequency between two interacting waves could be used to do the work, potentially greatly increasing the access time.

I skimmed the full paper, not understanding almost any of it. The authors didn’t actually talk much about memory technology; understandably, they seemed more interested in the “nanoscale topological features in the magnetic field” that they discovered and, through manipulating these features, the possibility of creating fast, reconfigurable, non-Von Neumann computing and processing entities.

“Racetrack memory” is I guess the new term for bubble memory and mercury delay lines and such. Basically, you have a circulating loop of bits with a read/write “head” that can read or write a bit when it passes by. An example in the Wikipedia article had access times varying from 10 ns to 1,000 ns for the magnetic memory. A glance at the references section showed that research is ongoing to reduce that range much, much further.

“It wasn’t long ago that having 640K of memory and 20 megabytes of hard drive seemed like all you would need”

Merry Christmas, but please don’t peddle computing myths, this one has never been true. Bill Gates never claimed it for a start[1]:

“I’ve said some stupid things and some wrong things, but not that. No one involved in computers would ever say that a certain amount of memory is enough for all time.”

Also, the Gordon Bell (1978) paper about the pdp-11 makes it clear that memory space is never enough[2]:

“The biggest (and most common) mistake that can be made in a computer design is that of not providing enough memory bits for addressing and management. The PDP-11 followed this hallowed tradition by skimping on address bits…”

Also, even from my own experience, people were complaining about the 640kB limit for the IBM PC in 1984 or 1985.

[1] https://www.computerworld.com/article/2534312/the–640k–quote-won-t-go-away—-but-did-gates-really-say-it-.html

[2]https://gordonbell.azurewebsites.net/CGB%20Files/What%20Have%20We%20Learned%20From%20the%20PDP-11%201977%20c.pdf