Bluster around the advent of self-driving cars has become a constant in the automotive world in recent years. Much is promised by all comers, but real-world results – and customer-ready technologies – remain scarce on the street.

Today, we’ll dive in and take a look at the current state of play. What makes a self-driving car, how close are the main players, and what can we expect to come around the corner?

Levels of Autonomy

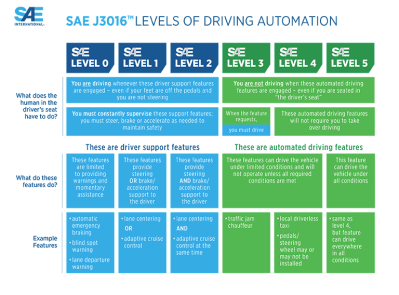

The phrase “self-driving car” may seem straightforward, but it can mean many different things to different people. Technological limitations also play a part, and so the Society of Automotive Engineers stepped up to create classifications that make clear what any given autonomous or semi-autonomous car is capable of.

Below is a full breakdown, but if you’re in a hurry, think of it like this. Level 0 cars have no automation, while basic things like adaptive cruise come under level 1. Level 2 self-driving systems can handle steering and throttle commands for you, but you’re expected to remain vigilant at all times for hazards. Level 3 systems take things up a notch, letting you take your eyes off the road while the car drives itself in the designated areas and conditions. Level 4 systems introduce the ability for the car to handle getting itself to safety in the event of an issue. Level 2, 3 and 4 systems are all conditional, only working in certain areas or under certain traffic or weather conditions. Meanwhile, Level 5 vehicles remove limitations entirely, and can basically drive themselves anywhere a human could.

Where We’re At

As it stands, the majority of new cars on the market are available with some form of Level 1 automation, usually cruise control or perhaps some basic lanekeeping assist. Typically, however, talk of self driving covers the proliferation of level 2 systems now in the marketplace. Tesla, GM, and Ford are some of the big players in this space already shipping product to market. Meanwhile, Honda and Mercedes have pushed ahead with Level 3 systems on the market and just around the corner respectively. Meanwhile, Waymo is aiming even higher.

Tesla

Tesla’s Autopilot and “Full Self Driving” systems have been roundly criticized for the manner in which they have been marketed. The systems fall strictly under the Level 2 category, as the driver is expected to maintain a continuous look out for hazards and be prepared to take over at any moment.

Sadly, not everyone takes this seriously, and there have been fatal crashes in Teslas running Autopilot where nobody was in the driver’s seat. Water bottles and other devices are often used to trick the system into thinking someone is still holding the wheel. As of April 2021, at least 20 deaths have occurred in Tesla’s driving under Autopilot, with the system known for driving directly into obstacles at speed.

Tesla has also made the controversial decision to start phasing out radar on its vehicles. The company plans to use cameras as the sole sensor for its self-driving systems, with one argument being that humans have made do with only our eyes thus far.

Tesla has pushed forward with the technology, though, releasing its “Full Self Driving” beta to limited public testing last year. The system can now handle driving on highways and on surface streets. It also has the ability to work with the navigation system, guiding the car from highway on-ramp to off-ramp and handling interchanges and taking necessary exits along the way.

Regardless of the updates, Tesla’s system is level 2 and still requires constant vigilance from the driver, and hands on the wheel. Calls have been made to rebrand or restrict the system, with plenty of footage available online of the system failing to recognise obvious obstacles in its path (language warning).

GM

GM has been selling vehicles equipped with its level 2 Super Cruise self-driving system for some time, lauded as safer than Tesla’s offerings by Consumer Reports. The system directly monitors the driver with a camera to assure attentiveness, and the latest versions coming in 2022 allow for fully-automated lane changes and even support for towing. The system allows the driver to go hands-free, but attention must still be paid to the road else the system will be disabled and hand control back to the driver.

Super Cruise can be used on over 200,000 miles of divided highways across the USA. However, unlike Tesla’s offering, GM’s cars won’t be driving themselves on city streets until the release of Ultra Cruise in a few years time. The aim is to cover 2 million miles of US and Canadian roads at launch, with the Ultra Cruise system relying on lidar and radar sensors as well as cameras to achieve safe driving in urban environments.

Ford

Ford’s upcoming BlueCruise system has only just hit the market, with a similar level of functionality to GM’s early Super Cruise system. The system lags behind Ford’s main American rival, as BlueCruise is only available for use on 130,000 miles of US highways. It also lacks the more advanced features such as automatic lane changes that GM has included in later revisions of its software.

BlueCruise also lacks user interface features like the steering wheel light bar of GM’s system, which improves clarity as to the system’s current state of operation. Fundamentally though, it’s a first step from the Blue Oval with more sure to come in following updates. As it stands, it’s a basic system that combines lane keeping and adaptive cruise control but doesn’t yet deliver much more than that.

Mercedes and Honda

Mercedes and Honda are the first two companies to deliver Level 3 systems to market. These allow the driver to kick back while activated, though outside of geofenced areas or in anomalous situations, they can be asked to take over in a timely fashion.

Honda’s system was first to launch, and has been available on the Honda Legend since earlier this year. The company’s SENSING Elite technology enables the Traffic Jam Pilot feature, which takes over driving tasks in heavy traffic on an expressway. Under these limited conditions, the driver can watch videos on the navigation screen or undertake other tasks without having to pay attention to the road ahead.

Mercedes will deliver its Drive Pilot system next year, initially enabled for 13,191 kilometers of German motorways. The system will similarly work during high-density traffic, up to a legally-permitted maximum of 60 km/h. The driver can then enjoy “secondary activities” such as browsing the internet or watching a movie.

Both systems rely on a combination of sensors, with Mercedes particularly noting the use of lidar, radar and cameras in their system. In both cases, drivers must remain ready to take over if the system requests, but they are not required to maintain the constant vigilance required with level 2 systems. This feat is particularly achieved by limiting the systems to operation in the more-predictable environment of a congested motorway.

Waymo

As a technology company rather than an automaker, Waymo has had little incentive to rush a product to market. The company has in fact abandoned development of Level 2 and Level 3 systems due to the commonly-cited issues with vigilance tasks. Even in the case of the company’s Level 3 efforts, it had issues with staff falling asleep during testing, due largely to the fact they had little to do with the car driving itself. Where Tesla has forged ahead with such systems, throwing caution to the wind and attracting an NHTSA investigation. CEO John Krafcik noted that Waymo decided such a system would draw too much liability.

Instead, the company is forging ahead with a system that will reach Level 4 or better, aiming to eliminate the contentious issue of driver handoffs. As of 2017, the company ran a system in its modified Chrysler Pacifica fleet that had a very simple interface. A button could be pressed to start travelling, and another would instruct the car to pull over safely.

The company has since started providing a driverless robotaxi service to a limited clientele as it tests its driverless vehicles. The company’s cars regularly take trips with nobody behind the wheel, though humans monitor the trips from a remote command center to help out in the case the cars get confused or face issues. The cars operate in specific geofenced areas during the development period, with the technology still a long way from ready for roll-out in all road situations.

Summary

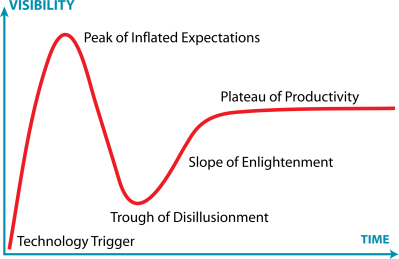

As it stands, self-driving technology will be sitting around the Trough of Disillusionment for many. Despite what we were all promised, self-driving systems remain heavily limited at this stage.

However, the tech is slowly climbing towards the point where it can be genuinely useful. Efforts like those from Mercedes and Honda already relieve the driver of the painful vigilance task and let them take their mind off driving, the primary goal of the technology from the outset. Other systems do little more than try and keep a car between lane lines while requiring the human to continually scan for hazards.

It will be sometime before the technology reaches maturity and we’re letting our driverless cars whisk us away without a second thought. Meanwhile, thousands of engineers are working hard every day to make that a reality.

>self-driving technology will be sitting around the Trough of Disillusionment for many

How about those commenters who keep proselytizing that it’s “already safer” years ago despite the complete lack of evidence and/or irrelevant statistics. Where on the hype cycle do they sit?

Tesla has enough autopilot (their term) driving miles logged to answer this question. Statistically speaking, the results are very strong with a very strong effect.

Tesla on autopilot has 1/9 the crash and fatality rate as an average human driver.

As a society we’re coming to grips with what autodriving actually means, and will be coming up with common-sense rules and behaviour based on the ongoing analysis of individual cases… but note that self driving is already much safer than human driving, so statistically speaking we can note and afford some failures without thinking that the entire system is bad.

Also of note: once a problem is discovered, Tesla can update all vehicles immediately and simultaneously. As we learn more of how self driving should operate by analyzing the failures, the system will get better over time.

(I’d link to the statistics, but the HAD system will delay a post w/link until it’s too late to matter.)

Tesla does not open their data for third party review. I have not seen any statistical analysis that is able to draw a conclusion that Tesla’s are safer then other vehicles with modern safety features.

When Tesla, or specifically Musk, complains that he doesn’t get enough credit for the lives he has saved it is because he doesn’t offer any proof of this. Until Tesla is willing to allow other parties to analyze Tesla’s data it is appropriate to treat Musk’s claims similar most of Musk’s tweets.

QCS successfully sued Tesla under the freedom of information act and were able to conduct a 3rd party analysis of Tesla’s data. The results are about what you would expect, autopilot increased accidents 60 percent. https://hackaday.com/2019/03/04/does-teslas-autosteer-make-cars-less-safe/

I honestly doubt you have read the article.

Autopilot is not a self-driving system, though. It’s a driving assistant, so you’re comparing human vs human+computer.

“Tesla on autopilot has 1/9 the crash and fatality rate as an average human driver.”

Retrospective studies have unconfoundness issues, leading to unknown biases. The drivers don’t use autopilot in situations where they don’t feel comfortable. There are plenty of reviews of Tesla’s FSD with stories of the driver taking over because of situations where things didn’t feel safe.

“Tesla can update all vehicles immediately and simultaneously.”

Why is this a good thing? Why would it help my car to be able to recognize driving patterns in California if I’m on the East Coast?

“As we learn more of how self driving should operate by analyzing the failures, the system will get better over time.”

It’s a system. It has finite capacity. It cannot continually get better, and feeding it failure data is just feeding it biased retrospective data.

Fix the infrastructure, not the vehicle.

“Fix the infrastructure, not the vehicle.”

This times 100. Self-driving vehicles are just one part; the roadways need to be fitted for communicating with vehicles, to route around jams etc.

And cars in urban centers are a problem, whose solution is not MORE CARZ. It’s urban design where people take priority over cars.

Why should we pay extra money to make less reliable vehicles work?

You don’t need to add features to help autonomous cars. You need to get rid of confusing and dangerous interchanges and signage to help everyone.

Again, why should we spend the resources to fix something that for the most part serves its purpose just fine?

People can navigate our roads just fine, except for some few places that can be tricky at times. AIs would need a lot of help that people just don’t, and arguing in terms of helping people forgets that there are diminishing returns – you can spend multiple times as much money on the road infrastructure and for the most part there won’t be any measurable reduction in accident rates. You’re just spending a ton of money.

“Again, why should we spend the resources to fix something that for the most part serves its purpose just fine?”

Uh, what are you talking about? DOTs around the US (and I’d have to guess in other countries too) are continually replacing/redoing interchanges that are dangerous, and lawsuits get filed regarding confusing signage all the time.

Plus, infrastructure repair/replacement happens all the time. It has to! If autonomous vehicle research identifies signage standardization/interchange design changes that make things simpler, it just helps everyone.

“People can navigate our roads just fine, except for some few places that can be tricky at times.”

Right, and those disproportionately contribute to accident rates. Fix those. If autonomous vehicle designers want to make their vehicles better, they should be interacting with DOTs to identify interchanges/intersections which need to be made clearer. Or (gasp) they could, y’know, contribute money themselves to do it. Kinda like Domino’s did by fixing potholes to reduce the extra cost to them.

Anyone who lives near a metropolitan area (or, probably, anywhere) probably knows of major accident intersections.

> Fix those.

Precisely. That was the point: we don’t need to spend any extra money on “baby proofing” the infrastructure for autonomous vehicles – and that goes for planning and designing as well. Why prop up an inferior technology just for the sake of itself when the existing setup works fine? The AI is supposed to be improving anyways so the investment would be pointless.

“Tesla on autopilot has 1/9 the crash and fatality rate as an average human driver.”

I’m an average human driver. I’ve never been involved in a fatal crash, and I’ve only been cited as a contributing cause in one (low speed, parking lot) incident – we backed into each other, fault was split 33.3%/33.3%/33.3% among we two drivers and the design of the parking lot.

Tesla’s Autopilot is a psychotic mass-murderer by comparison.

In some studies I’ve read, while the driver gets the blame in about 90% of fatal accidents, driver error is actually the direct cause of accident little over half the time. “Human error” decides whether to pay the insurance – so there’s a strong bias for blaming the driver over matters they had little control over.

Then “contributed to” becomes “caused by” when the statistics are read by people who really want the robot car to be better, but it’s simply not plausible that the robot has a 1/9 accident rate compared to humans when the fatal accidents that happen to humans are not caused by and could not be prevented by the driver half the time. The claimed “signal” is below the background noise.

“How about those commenters who keep proselytizing that it’s “already safer” years ago”

Well, they kinda are? Relative accident risk can vary a ton: there are definitely categories of drivers that have like, 2-3x the relative risk, although there aren’t a lot of them. So in some sense we’d be better off replacing those drivers. At some point it might make sense to make a self-driving license harder to obtain (which will screw certain economic brackets, but it’s not like that’s ever stopped things).

I actually worry a ton more about the crazy commenters who continually say “oh, such-and-such AI technology will only get better as they get more data.” Which is crazy. The networks that are being trained have finite capacity. You add more data to them, they’ll be forced to generalize more, which makes them less safe than human drivers who know stuff like “oh, everyone takes this curve way too fast, you need to watch it around here” or “it’s rained recently and this road tends to get slick.”

The other problem is that right now the fundamental decision tree’s wrong. It’s insane that when the network sees something that confuses it, it… keeps going. When you come across a section of road that doesn’t seem right, you freaking pull over and figure it out.

But that would reveal how fundamentally stupid the AI by the fact that it would just refuse to go half the time.

Systems like Tesla FSD are gambling on the odds in a game that’s already stacked in its favor by excluding all the difficult conditions and dangerous roads out of the picture. Whatever remains is rare enough that the system can actually fail at an alarmingly high rate and still look like roses.

For example, only about 6% of road fatalities occur on motorways/highways where FSD can or should be used in the first place. Exclude adverse weather conditions and drunk driving, and the odds of getting yourself into an accident are slim indeed. 99.999% of the time all the car has to do is keep to the lane, maintain speed and stop when the other cars stop. This was already accomplished in 1986. It takes less smarts than a nematode worm has.

Of course, there is a huge gap between something that can technically drive a car, and something which can do so reliably and safely.

You’re looking at something with the relative intelligence of a small mammal, which is literally billions of times more complex than anything you can run with a couple hundred watts in a laptop class CPU inside a car:

“Accurate biological models of the brain would have to include some 225,000,000,000,000,000 (225 million billion) interactions between cell types, neurotransmitters, neuromodulators, axonal branches and dendritic spines, and that doesn’t include the influences of dendritic geometry, or the approximately 1 trillion glial cells which may or may not be important for neural information processing.”

So future cars will run on rat brains?

I was thinking more along the lines of a chimpanzee or a particularly smart dog, but rats might do.

All it needs to do is drive a car. It doesn’t need to do all the other super complex stuff that “animals” have to do – eat, drink, play, mate etc etc. You’re massively exaggerating the performance required. Biological “brains” are very general purpose, this is very specific.

>You’re massively exaggerating the performance required.

“Eat, drink, mate, play”, is not “super complex”. It’s the edge cases that are difficult, and where simpler animals fail like the AI fails. For example, putting up a cardboard silhouette of a hawk above a chicken pen makes the hens behave as if there’s a real hawk up there, and they stop functioning normally. Such failures of response in nature simply cause some of the animals to die, which isn’t a problem for the species as long as enough individuals survive. It would be a problem for us because we want almost flawless performance out of a self-driving car.

Again, an earthworm will “drive a car” if you wire it up to the controls and inputs somehow; it has no hope of navigating human environments safely. You’re massively under-estimating the complexity of the problem.

Yup. And the reason it’s so effective marketing is that it’s fear-driven. People drive a ton. Everyone knows someone who’s died in an accident. So an accident-free future sounds great.

Except it’s crap. Humans are, surprisingly, exceptionally good drivers. The majority of drivers go more than 10 years without an accident. Insurers desperately try to get more information because measuring raw “accident rate” for humans is like 90% Poisson noise.

It’s so weird. Instead of not drinking, not texting, and going at reasonable speeds, people are willing to turn their lives over to something with drastically worse reasoning.

It’s not so weird if you have money and/or emotional investment in the company.

AI has gone through multiple hype cycles over the years without reaching the plateau of productivity because it lacks the computing power (and energy efficiency) and a solid scientific background or a “theory of intelligence” to begin with. It has narrow real-world applications and even where successfully used, it has very obvious flaws. E.g. Alexa telling a little kid to put a penny into a power socket.

If a hype cycle is strong enough while the actual development is very slow, you get massive overshoot and oscillation with multiple false starts, conspiracy theories (“who killed the electric car”), personality cults, repeated “breakthroughs” that turn out to be more of the same, etc. There should be an update to the Gartner cycle: technologies which keep “ringing” back because the people from the top of the previous hype cycle go, “This time I’m sure of it!” and get taken in by hucksters like Elon Musk.

“AI has gone through multiple hype cycles over the years”

It’s really because AI’s not a thing, and it’s insane that people present it as a thing. This is what you’re saying when you say there’s no “theory of intelligence” to begin with.

And there’s a reason for it: because developing any kind of real intelligence takes time. Like, physical time. You can speed up computations all you want – you can’t speed up days, or chemistry, or physics. And there’s only so much abstraction you can do to a problem. And once you try to make the problem *specific*, then it’s not what you want in the first place. Now your workforce isn’t predictable and takes years to develop, which you already have.

Which is, of course, why the idea of being “scared” of artificial intelligence is insane. Skynet doesn’t work because a single central intelligence is always going to lose versus seven billion independent ones.

Touchstone question, the answer to which illuminates this topic…

“When (not if) I or my property is damaged by such a vehicle, do I sue (a) the driver (b) the car company (c) the algorithm designer/implementor (d) some other entity?”

Generally speaking for safety-certified devices, engineers are not responsible for the end result.

This arises from the certification process, where the government certifying agency makes up all kinds of rules and “best practices” for design and testing. If the company complies with the rules to the satisfaction of the regulating agency, then neither the company nor the engineers are responsible for accidents. The design was considered “best possible engineering” and deemed safe for public use.

(Problem arise when those practices weren’t followed, as with GM ignition problems and various Airbus defects. As an engineer, so long as you are neither negligent nor fraudulent, you’re safe.)

I think in this case you would apply to the insurance of the driver for compensation, and the insurance company would probably pay without a fight. Autodrive is safer than human driving, so it would be cheaper for the insurance company to encourage FSD and simply pay claims from the accidents. It will probably be illegal to autodrive without appropriate insurance.

The same thing comes up with traffic tickets: if the car was on autodrive and is pulled over, who pays the ticket?

I think overall the concept of citing for traffic violations will go away entirely, or perhaps a cop will simply note the violation and aggregate information will be sent back to the manufacturer to help correct defects.

Or maybe the ticket will be filed with the insurance company, and *they* will pay… and also see that appropriate fixes are made with the manufacturer.

So the strategy for successful murder is to do it with a self driving car and blame the manufacturer. What’s next, commit murder with an electric knife and claim the knife was defective.

Thing is, self-driving cars will be safer because they will drive like your Uncle Harry on a Sunday afternoon: never speeding, stopping on yellows, never bumpertailing, slow, careful lane-changes…

Human drivers in other vehicles are gonna hate’em.

So you *enjoy* cars blowing past you, a car blowing through a red light that you can’t see, and other cars a foot from your bumper?

I don’t /mind/ people driving faster or slower than me. That’s their business.

Good post!

I was however expecting a full review but initiatives in Asia are missing. I read some months ago a review about robotaxis in China:

https://www.eetimes.com/chinas-robotaxis-hit-the-road/

Yeh indeed, already in Shanghai for some years now? This year Seoul?

I want a Dumb car, thank you very much. One that doesn’t take 1500 microchips to do what used to require *zero* chips. Ok, in the interest of persevering the atmosphere we all breathe, maybe a chip or two that adjusts the timing for an internal combustion engine, or keeps the lithium batteries from exploding for an electric. A backup camera is probably a good thing. But brake lights should not require network addresses. Doors should not decide when they feel like locking. I’d be a lot happier if I knew that simple physics connected my steering wheel to the actual wheels. The absolute last thing I want is “car as a service”, some idiotic robotic rent-a-taxi that might, or might not cooperate with my wishes.

All I see with the recent trends is increasing corporate empowerment. At some point very soon, it’s going to be cheaper to own a *horse* again.

Me too. Its all rentware and spyware these days. There is huge benefit to self driving for these megacorps as it will allow them to monopolise personal transport. They’ll bend their statistics whatever way they need to make the politicians get those pesky humans away from the steering wheel.

If I had the few bob I would buy a more minimalist car like a Caterham

The problem is that you all think you need BMWs and Audis, when in reality you should be voting with your wallets and buying Dacias and Skodas. They still have hand cranked windows and cost a quarter of the money.

Oh but oops, such cars are being outlawed because they don’t conform to new government regulations:

https://www.sae.org/news/2019/04/eu-to-mandate-intelligent-speed-assistance

Starting in 2022 all cars must have “intelligent speed assistance”, which is not supposed to be a speed limiter, but you can only temporarily override it. In other words, it’s a mandatory speed limiter. Also included are a bunch of other requirements such as event data recorders, so you can’t avoid a car that is stuffed full of computers that are dependent on external systems to tell you how, when, and where you can drive it.

All new cars, that is.

Wow how soon we forget the immense cost of removing horse poop from the roads, it’s like they don’t teach history at all any more.

Dont worry at this rate you will talk yourself into fully automatic vehicles in a few more paragraphs.

This is a clear example of how dangerous Tesla’s so called “Full Self Driving” is. Please watch and inform everyone you know about it. It doesn’t work as you expect it to https://youtu.be/SVuBBAWbOTk

I’m all for autonomous vehicles as long as they have their own roads with slots in them for the guide pegs – like slot car racing.

Humans are apparently perfect drivers, they never allow their vehicles to depart from the highway for any reason, so they do not need things like this.

1.34 fatalities per 100 million miles driven is damn close to being perfect.

If you assume 60 miles per hour average speed, that represents a MTBF time of 1.24 million man-hours. It is very hard to build any machine or system of machines that reaches that sort of reliability against critical errors, because it represents one critical error for every ~140 years of operation.

A “good” computer will have a MTBF of around 100,000 hours which means simple hardware failures will cause more accidents even if the AI itself was as good a driver as any person.

https://www.mdpi.com/2071-1050/12/19/8244/htm

>Vehicle breakdowns accounted for about 30% of all types of incidents in the state of New South Wales (NSW), Australia, 64% of all incidents on a motorway in the United Kingdom [31], and 32% of total incidents on urban freeways in South East Queensland, Australia

These “incidents” aren’t usually fatal, because the driver is able to bring the vehicle safely to a stop. If the driver IS the vehicle that breaks down, the passengers are unlikely to do anything before it crashes into something.

I’m for them because they make lidar cheaper and more available for other things.

https://youtu.be/NOk_M1Ib5F0?t=192

Why was there no mention of Comma AI and OpenPilot? It allows you to add advanced lane keep with lane changes and a better adaptive cruise control to several excusing cars with the best driver monitoring and really good safety measures. They are working on navigation and stop signs/traffic lights. (The later basically works, just needs more tuning)

I don’t think it’s accurate to be saying there’s been autopilot crashes where there was no one in the driver’s seat, when the NTSB refuted that rumor, and it was widely reported months ago

https://www.theverge.com/2021/10/21/22738834/tesla-crash-texas-driver-seat-occupied-ntsb

My favorite Tesla-FSD demonstration:

https://www.facebook.com/groups/1939836749567520/posts/3012951912255993/

Some guy wedges a drink bottle into his steering wheel to trick the car into thinking that there are hands on the wheel.

Then he gets into the passenger seat.

Then he gets a quick lesson on the distinction between level 2 and level 3 autonomy.

What level is a commercial aircraft autopilot equivalent to? I’m under the impression it’s level 2 since there’s always supposed to be a pilot able to immediately take over if there’s a problem.

Perhaps Tesla calling their system an “autopilot” isn’t misleading at all, just that the general public doesn’t know what an autopilot really is.

thing is it varies by aircraft…Airbus and Eurocopter autopilots will actively follow collision avoidance system commands, Boeing seems to fine with ignoring them. (they’ve even been fine with an assistant actively crashing the plane if it receives errorneus data…)

All of them will disconnect if the system senses a problem it’s not designed to deal with, same if you apply physical force on the controls.

Also, with planes the physical presence is kind-of a no brainer, for example if the aircraft suddenly goes into an uncommanded pitch up maneuver, you might not be able to actually get back into the seat to do anything about it.

All of them are very simple, most just hold the plane on a given course at a given altitude, the better ones can follow a simple flight path. Nothing as complicated as a public road.

Use case is different, it can’t just come to a stop like a car :P

TuSimple completed the first ever Level 8 fully autonomous semi trip between Phoenix and Tucson on an open public road (I-10) on December 22nd. No humans on board. I’m surprised that did not appear anywhere in your article or comments.

SAE J3016 describes five level of autonomy. It provides a shared set of definitions that many poeple and organizations in the industry have agreed to use, and it simplifies communication about autonomy. It’s not perfect (there are distinctions that it doesn’t capture), but it is a perfectly reasonable set of definitions for use in an article that compares autonomy across different manufacturers.

There is no level 8 in J3016.

Who defined this 8-level scheme you speak of? Who uses it, other than TuSimple?

Fred is exhibiting comprehension difficulties.

It’s a Class 8 truck (>33,000 lb).

It demonstrated Level 4 autonomy.

Note to Editors: This article confuses the current full self-driving and the full self driving beta available on tesla:

“The system can now handle driving on highways and on surface streets. It also has the ability to work with the navigation system, guiding the car from highway on-ramp to off-ramp and handling interchanges and taking necessary exits along the way.”

Not mentioned in the article is that the beta FSD allows complete self-driving from destination to destination on city streets and freeways, without intervention by the driver. Because it is a beta software release, the driver must maintain complete attention while the car drives itself.

This article is also incorrect in stating that drivers have misinterpreted the title of the particular autonomous driving in a Tesla. All owners and drivers of Tesla know full well that they should not abuse the system. These drivers intentionally abuse the system not because of what it is titled, but because they lack the ability to follow clearly published and acknowledged rules.

>All owners and drivers of Tesla know full well that they should not abuse the system.

Yeah, right.

Tesla calls it “Full Self Driving” but it isn’t. Only the Beta version is, as you said, fully self driving, except it isn’t – surely everyone understands this.