Readers are likely familiar with photogrammetry, a method of creating 3D geometry from a series of 2D photos taken of an object or scene. To pull it off you need a lot of pictures, hundreds or even thousands, all taken from slightly different perspectives. Unfortunately the technique suffers where there are significant occlusions caused by overlapping elements, and shiny or reflective surfaces that appear to be different colors in each photo can also cause problems.

But new research from NVIDIA marries photogrammetry with artificial intelligence to create what the developers are calling an Instant Neural Radiance Field (NeRF). Not only does their method require far fewer images, as little as a few dozen according to NVIDIA, but the AI is able to better cope with the pain points of traditional photogrammetry; filling in the gaps of the occluded areas and leveraging reflections to create more realistic 3D scenes that reconstruct how shiny materials looked in their original environment.

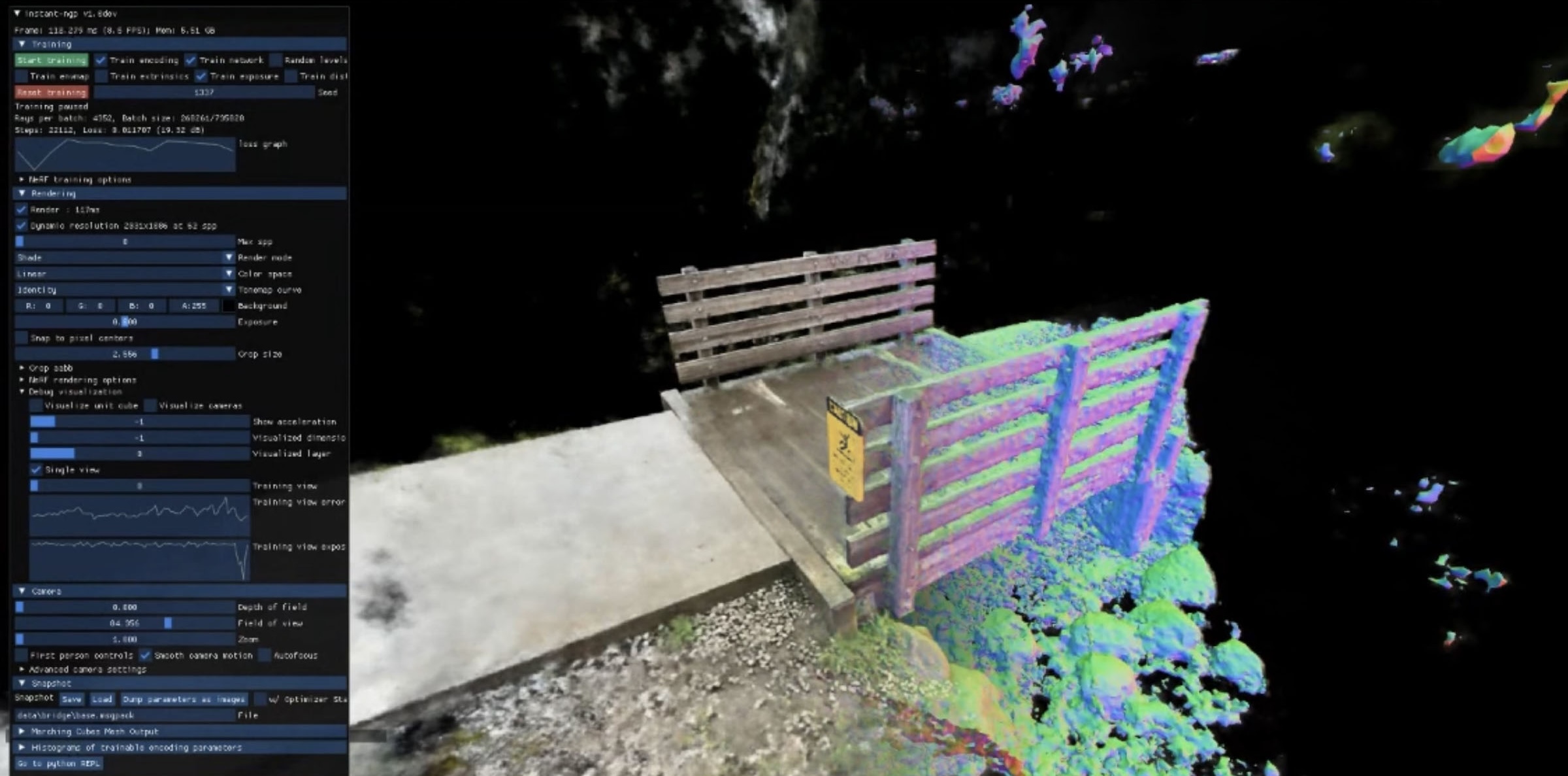

If you’ve got a CUDA-compatible NVIDIA graphics card in your machine, you can give the technique a shot right now. The tutorial video after the break will walk you through setup and some of the basics, showing how the 3D reconstruction is progressively refined over just a couple of minutes and then can be explored like a scene in a game engine. The Instant-NeRF tools include camera-path keyframing for exporting animations with higher quality results than the real-time previews. The technique seems better suited for outputting views and animations than models for 3D printing, though both are possible.

Don’t have the latest and greatest NVIDIA silicon? Don’t worry, you can still create some impressive 3D scans using “old school” photogrammetry — all you really need is a camera and a motorized turntable.

So now we get Artificial Optical Illusions (aka AOI)?

Don’t miss the videos on the subject by 2 Minute Papers, e.g.

https://www.youtube.com/watch?v=yptwRRpPEBM

So the summary of the video would be

totally rocks: Plenoxels: Radiance Fields without Neural Networks – takes minutes

totally sucks: NeRF (Neural Radiance Field) – takes days

Is that Ren narrating that video?

Maybe I should consider that a compliment!

B^)

Add Nerf to your NeRF: embed a small ‘360° camera’ (the ones with an opposing pair of >180° fisheye lenses) into one of the jumbo Nerf projectiles, then fire it through your scene to collect the input images for your subsequent photogrammetry.

With streamed video to collect the images in-flight and fast enough processing to complete the processing in near real time, you can fire a squishy projectile into a room and receive a 3D model of the interior of that room.

Tool coming to the next Tom Clancy game.

NeRF is picky as hell about the input series. I’ve tried. Even put in some photos from Google streetview, thinking surrounding photos of a given landmark would be enough – the resulting meshes were a broken jumble, to be charitable. Yes, I played with the options and such.

But what really got me was even with a even-color background and the subject having 8+ photos from different angles with overlapping coverage, the resulting mesh output was a complete mess.

It would take a while in your 3D editor of choice to clean it up, to the point where you might as well model it by hand.

That’s most likely because the photos were taken with different cameras and/or different settings. In the case of streetview photograpy, the images are even warped stitched together from multiple pictures. These implementations rely on some assumptions that are easy to fulfill in the lab and aren’t relevant to the actual technique being studied, but will completely mess up the result if ignored.

More specifically, almost all computer vision research assumes a simple pinhole camera model and assumes all images are identical in terms of optics, i.e. one pixel always maps to the same ray relative to the camera. In practice, this means you must take all images with the same camera with the exact same settings, so no zoom, no filters or auto-stabilization, don’t even change the focus! Don’t use lenses with strong distortion (so no fisheye stuff) and ideally also keep things like exposure time etc. fixed. Use a tripod or short exposure time to eliminate motion blur, close the aperture to keep everything sharp.

(This advice holds for most software that relies heavily on camera images, not just NeRFs, but also SLAM or SfM etc.)

Also, don’t just take 8 photos, use more like 30 or 40, most of them overlapping a single point. A uniform background can actually be detrimental, the more texture you have in your scene the better the tools can estimate your camera poses.

That said, I’ve tried it and while it usually works well, there is still a lot of noise and holes in some cases. I especially had troubles with reflections.