NVIDIA has recently released their lineup of 40-series graphics cards, with a novel generation of power connectors called 12VHPWR. See, the previous-generation 8-pin connectors were no longer enough to satiate the GPU’s hunger. Once cards started getting into the hands of users, surprisingly, we began seeing pictures of melted 12VHPWR plugs and sockets online — specifically, involving ATX 8-pin GPU power to 12VHPWR adapters that NVIDIA provided with their cards.

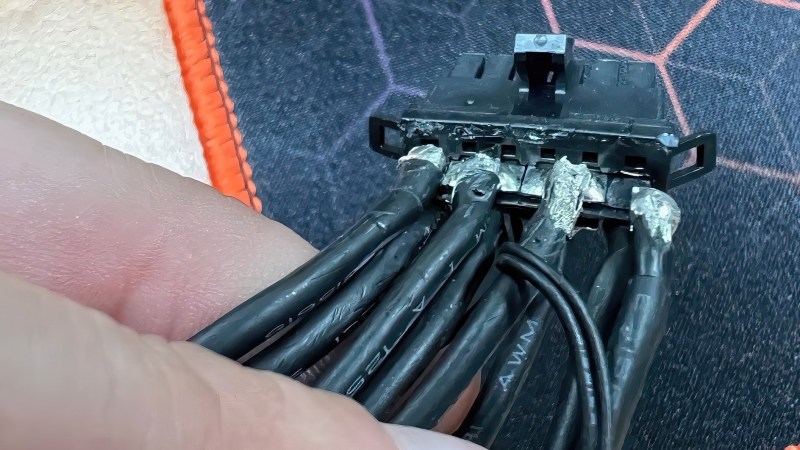

Now, [Igor Wallossek] of igor’sLAB proposes a theory about what’s going on, with convincing teardown pictures to back it up. After an unscheduled release of plastic-scented magic smoke, one of the NVIDIA-provided connectors was destructively disassembled. Turned out that these connectors weren’t crimped like we’re used to, but instead, the connectors had flat metal pads meant for wires to solder on. For power-carrying connectors, there are good reasons this isn’t the norm. That said, you can make it work, but chances are not in favor of this specific one.

The metal pads in question seem to be far too thin and structurally unsound, as one can readily spot, their cross-section is dwarfed by the cross-section of cables soldered to them. This would create a segment of increased resistance and heat loss, exacerbated by any flexing of the thick and unwieldy cabling. Due to the metal being so thin, the stress points seem quite flimsy, as one of the metal pads straight up broke off during disassembly of the connector.

The metal pads in question seem to be far too thin and structurally unsound, as one can readily spot, their cross-section is dwarfed by the cross-section of cables soldered to them. This would create a segment of increased resistance and heat loss, exacerbated by any flexing of the thick and unwieldy cabling. Due to the metal being so thin, the stress points seem quite flimsy, as one of the metal pads straight up broke off during disassembly of the connector.

If this theory is true, the situation is a blunder to blame on NVIDIA. On the upside, the 12VHPWR standard itself seems to be viable, as there are examples of PSUs with native 12HPWR connections that don’t exhibit this problem. It seems, gamers with top-of-the-line GPUs can now empathize with the problems that we hackers have been seeing in very cheap 3D printers.

exasperated, eh? !

ouch, in my draft too! I blame finger autocorrect! fixed, thank you

exacerbated was what you were looking for exagerated dosnt really fit either

ah indeed, that’s way better! thank you!

The engineer that thought this was a good idea needs to return his degree and go back to mowing lawns for a living. ANY one with a rudimentary knowledge of Ohm’s Law can see this was a failure in the making.

there are a lot of rumours that pci-sig and others raised a red flag and Nvidia happily ignored it. if this is the case its just a matter of time before they get sued….

Sometimes its not always the engineer’s choice; rather purchasing trying to get lower prices from vendors. Very common if your an OEM for big auto….

Dieselgate: “It’s the engineers fault”.

Bendgate: “It’s the engineers fault”.

Sucks to be an engineer in the public’s mind. Kind of like science.

Dieselgate is the engineers’ fault when it comes to not getting the higher-ups’ orders in writing ;-P

I think in all my career, there was only one occasion when I wasn’t happy doing what I was told, which was understating a cost estimate. I played stupid and told the (somewhat bullish) manager “I’m not sure what you’re asking me to do exactly, can you just put it in writing in an email for me?”. He ended up (grudgingly) using my estimate. I still see this manager around the office from time to time and he pretends he doesn’t know me :D

Good on you!

This is the right thing to do. I work in Germany and I will never understand why this diesel thing happened. If a company like VW would fire you because you refuse to break the law you would have a ball in court. German labour laws are very employee friendly.

Oh man, I had an issue I already solved once with a set of power switches at an industrial facility, it happened again because parts of the original design were cut/pasted into a new one by a design firm. I told management swap a regular power switch for a make before break power switch and problem solved. They came back with, “that’s not the issue, we are sure people are just inadvertently bumping into it. You will redesign it using this cover we found.” So 3 years later it’s still having issues, comes up about every 3-6 months and I do my best to stay out of it because every time I bring up the $40 fix (the conversations on what to do have to have broken $20k by now) it’s like it’s the first time anyone’s heard of it. EVERY TIME. They look at me weird when I laugh at the issue now, but that’s ok. They won’t remember it that in a week.

When things fail is the engineers fault, when things work is the CEO’s vision and dilligence

So who screwed up on the iPhone 4 antenna?

The user who was holding it wrong.

> NVIDIA has recently released their lineup of 40-series graphics cards, with a novel generation of power connectors called 12VHPWR.

Some may read it as the 12VHPWR connectors are NVIDIA’s invention. I do realize that this sentence does not imply that, but IMO the choice of working could be better.

not sure if ironic…..”working” could be better?

B^)

I could blame it on autocorrect, but… but… touché ;-)

I’ve always thought that plugging more and more cables from the system power supply into the card was a kludge and it has now become unmanageable. Why not a simple, robust connector on the rear bracket fed by an external power supply?

are you serious? a 600W+ power brick? i sure as hell don’t want that they should put a simple robust connector at the end of the cards where it would make cable management easier. i really don’t understand what Nvidia is trying to pull: need more power? just make the plug smaller and use adapters? yeah that seems very intuitive.

Yes, I’m serious. Something like a standard PC power supply in a small enclosure. I don’t see the problem.

The you create a “new” problem: both power supplies needs to power up/down at the same time.!!

Not at all – the GPU can draw power from the PCIe slot to run and just activate the PSU when it has tasks to run requiring real power. Not that there are not issues with such an idea, its just that shouldn’t be one of them as the computer can supply quite a bit of power to the card via the slot, which if that was the design criteria the GPU could easily run off and selectively activate the supplementary power.

Put an ATX style connector on the card.

The way they are going they should just put 1/2″ copper pipe fittings on the back of every video card and PSU then use plumbing as wiring. What amount of energy consumption just to play a video game becomes excessive?

There’s a reason we’re not all using a Riva and playing Quake level graphics. People are even bragging on forums about their RTX. The customers got what they wanted.

And the scalpers are already happily listing them on FB Marketplace for $2100+

With these bad connectors, combined with the mining industry bottoming out, I guess you could say these scalpers will now get burnt twice!

Not all GPU are used for gaming. It also has to be said the testing I’ve seen says the 4090 can perform really really well with rather lower power consumption if you tell it to, seems like it can out perform all of the other newish cards while drawing quite a bit less power than they do in many cases.

So stupid as the potential numbers sound if you can afford it a 4090 might actually be the most economic to run GPU by quite some margin, bringing the power consumtion down! How you use it comes down to what you actually need of course, some folks probably really do need that 4090 (or 4) cranked up as high as they will go for hours…

(and I wasn’t thinking crypto bs)

> Why not a simple, robust connector on the rear bracket fed by an external power supply?

Like a CHAdeMO or CCS Combo. Then we can really crank the clock-rate up.

BRB, forwarding this idea to nVidia CEO, hoping for big raise. 😆

I’m voting for Anderson Powerpoles, or whatever the TE/Molex compatible competitor is. Used very frequently for ham radio and FIRST robotics.

Used for charging electric forklifts! Those connectors are great, I use them all the time for low-voltage power applications. The only issue I have with those is that they don’t have a separate locking mechanism, and the smallest types can easily pull apart, except when you want them to.

I guess it is a cheap card with cheap components. Some low budget stuff.

Oh. Wait. What? 2800 €? That is… I mean, that could buy 140 half decent bottles of Whisky that last around 15 years, which is way beyond my expiration date.

Fanbois will pay that. Crazy.

I mean in 2017 I bought a 2002 Miata for 1200 € (about 5500 polish złoty). There was some welding to be done to strengthen it since it was post-accident but it’s no big problem because I’m a mechanic. This little Miata serves me to this day. Nice upgrade from old Dewoo Lanos my father gave me in 2010.

Time maybe to make the jump to a standard 48V power rail.

Maybe bus bars or at least more terminal-like.

https://www.samtec.com/connectors/micro-pitch-board-to-board/rugged/ultra-micro-power

Or jump straight to 72V! The higher the voltage, the smaller the wires, right?

/facepalm

Except 48V is already a well-established standard in computer power distribution, just not seen much in consumer & gamer-grade stuff, except in POE applications.

72V would also qualify as “high voltage” in most jurisdictions, and fall under tougher regulations.

yep

There is a 48VHPWR connector that has 2 power pins and the same 4 signal pins that has been mentioned in the PCIe 5 CEM document but not detailed. Though you can find supposed drawings of it online.

Personally, I don’t think NVIDIA is entirely to blame here. Sure, they should have paid more attention to the cables they got from their supplier before putting them in the box. However, I tend to think the bulk of the fail is with Nvidia’s supplier for selling them a poorly designed product.

I tend to agree with Jayztwocents on this, they should have just added another 8pin to it to make the connection bigger to handle the higher load. Not smaller. That’s more on pci-sig though. I hope NVIDIA issues replacement cables for the issue which are at least properly wired like Jay showed from CableMod. Do we need a better solution than just adding a 3rd 8pin? Certainly. No doubt Molex and Foxconn probably already have a suitable connector on the market. Either way though, it needs more conductor, not less.

Exactly. Until the new PSU standards are 100% realized on able-to-purchase PSU’s this stupid connector shouldn’t exist on these cards. The 3090 has them as well. Everyone’s saying “When the ATX 3.0 PSU’s are available this won’t be a problem”. So that right there’s the problem. They put the fully-loaded cart before the horse and then gave everyone a matchbox car to pull the cart.

There are many examples of substandard connectors and cabling marketed as “super-duper elite performance” products. For example, Monster Cables… tiny little 22ga wires surrounded by a quarter inch of plastic and maybe a thin ground mesh. They are functionally identical to the cheap stuff, but are puffed up to look “heavy-duty” to the ignorant, gullible consumer. Same goes for the “gold” connectors and hardware floating around.

There was another that I came across in my Cable Guy days that was an Amphenol RG-59 cable(with labeling!) repackaged and literally sheathed in foam with a thin plastic plenum in an interesting color to make it look like RG-7. And it was supposedly a “performance” brand(can’t remember that one).

I guess the industrial engineers are susceptible to the same flaws in marketing… despite having degrees certifying that they shouldn’t be.

RG-7? Gotta love standards. They’re so flexible. Yeesh.

Don’t get me started on the latest Chinese “innovation” — CCA (they abbreviate it so you don’t know it means “Copper Coated Aluminum”).

I’m old enough to remember aluminum house wiring. Someone else’s brilliant idea.

.. I thought CCA was “Copper Clad Aluminum”, but that’s me being pedantic.

And yeah- I’ve seen “18 gauge” wire that has the appropriate outside diameter, but instead has some tiny 26 gauge set of strands surrounded by plastic. Makes it a bit annoying when trying to build cable harnesses…

So, basically, the ( probably cheap ) adapters nVidia included with their gpus for people without compliant PSUs are of low quality ? Who would have imagined ….

From Astron.

https://www.notebookcheck.net/Nvidia-instructs-AIB-partners-to-send-RTX-4090-cards-affected-by-power-connector-issues-directly-to-headquarters.664695.0.html

The thing I’m wondering is, how did they (pci-sig, intel, nvidia?)come up with the spec of 9.2A per pin (https://cdrdv2.intel.com/v1/dl/getContent/336521?explicitVersion=true&wapkw=atx%20specification section 5.2.2.4.3)?

I remember hearing that 12VHPWR uses Molex Microfit connectors, but on Molex’s site they specify the current rating of each pin at 5.5A for a 12 pin plug (https://www.molex.com/pdm_docs/ps/PS-43045-001.pdf page 8), and they don’t have a crimp terminal for 16AWG wires either (thickest wire gauge is 18AWG). Even if it is from some other manufacturer, how do they squeeze nearly twice the current capacity out of the same sized terminals?

Ah, someone on Reddit pointed out the connector is Amphenol Minitek Pwr3.0 High Current Connector: https://www.reddit.com/r/nvidia/comments/yc9mzc/comment/itm5qng/ . It sounds like they use a different copper alloy that can carry higher current (and maybe slightly different electromechanical design in the terminals) to get to that current rating.

But Amphenol’s product family datasheet and the datasheet for the terminals is not very self consistent:

Product family datasheet at https://cdn.amphenol-cs.com/media/wysiwyg/files/documentation/datasheet/boardwiretoboard/bwb_minitek_pwr_cem_5_pcie.pdf says the rating is 9.5A per contact for 12 pins, and

the gs-12-1706 general specification linked directly on page 2 https://cdn.amphenol-icc.com/media/wysiwyg/files/documentation/gs-12-1706.pdf says the same.

However, if you click on the 10132447 receptacle terminal part number link, it brings up a few parts, among which https://www.amphenol-cs.com/minitek-pwr-hybrid-3-0-hcc-receptacle-10132447121plf.html shows up as preferred part. The product specification it links to at https://cdn.amphenol-cs.com/media/wysiwyg/files/documentation/gs-12-1291.pdf says the current rating is 7.5A per contact for 12 pins…

In any case the PCIe specs are pushing those connectors to the limit or over the limit. And anyone using a compatible sort of a terminal e.g. the Molex ones, or other cheaper terminals, will surely run over the limit a lot. It sounds disastrous, especially considering there are already many dodgy adapters on Amazon… Not sure why they decided to push the specs this much this time around.

I’m looking at the pictures of those (relatively) large wires soldered to those poor little thin & flimsy stamped pins, and I’m both in disbelief that this was the “solution” from a company as experienced and large as nVidia, and thinking “yeah, that’s totally something I’d have done when I was 14.” LoL

This isn’t an engineering issue, this is a ‘let’s go with the cheapest option adapter to throw in the box’ issue and they just asked Foxconn or some such to mass produce this crap for them at a budget.

And it makes sense, they are already basically giving away these cards at a mere 2 freaking grand after all..

nice thanks