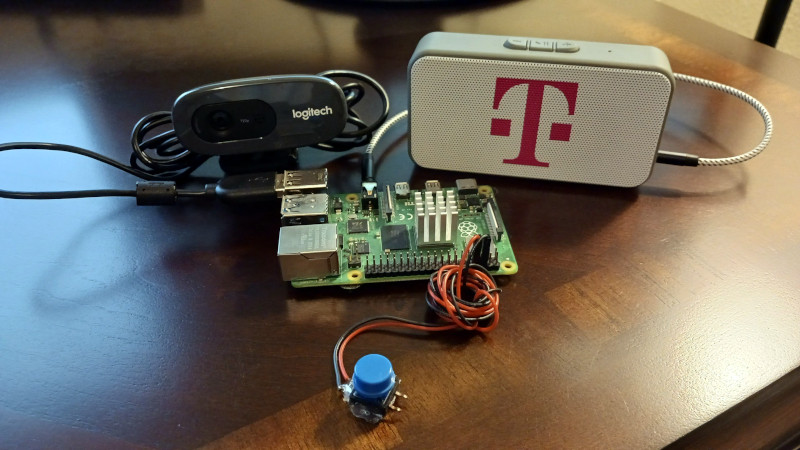

With AI being all the rage at the moment it’s been somewhat annoying that using a large language model (LLM) without significant amounts of computing power meant surrendering to an online service run by a large company. But as happens with every technological innovation the state of the art has moved on, now to such an extent that a computer as small as a Raspberry Pi can join the fun. [Nick Bild] has one running on a Pi 4, and he’s gone further than just a chatbot by making into a voice assistant.

The brains of the operation is a Tinyllama LLM, packaged as a llamafile, which is to say an executable that provides about as easy a one-step access to a local LLM as it’s currently possible to get. The whisper voice recognition sytem provides a text transcript of the input prompt, while the eSpeak speech synthesizer creates a voice output for the result. There’s a brief demo video we’ve placed below the break, which shows it working, albeit slowly.

Perhaps the most important part of this project is that it’s easy to install and he’s provided full instructions in a GitHub repository. We know that the quality and speed of these models on commodity single board computers will only increase with time, so we’d rate this as an important step towards really good and cheap local LLMs. It may however be a while before it can help you make breakfast.

Needs some blinken lights!!

I like the non-cloud based functions.. internet seems to be a Warzone and series change hands too frequently

*services

Great voice quality and, as you suggest, with time, advances in hosting hardware and AI models will surely improve response time. My “home automation” activities revolve less around pure automation and more around voice commands with the occasional ad-lib query, thus my current reliance on Alexa.

From benchmarks I can find the rpi5 is about 4x faster than the rpi4 for llm work, which means a 9-10 second delay would be about 2-3, definitely seems like it’s worth the upgrade. I need to get a decent mix for my pi5 so I can play with these.

What is the RPI 5 comparable to in x86 land?

Are those benchmarks for the kinds of work done by LLMs though? They’re quite specific and not quite the same as for general computing.