The holidays are rapidly approaching, and you probably already have a topic or two to argue with your family about. But what about with your hacker friends? We came upon an old favorite the other day: whether it “counts” as retrocomputing if you’re running a simulated version of the system or if it “needs” to run on old iron.

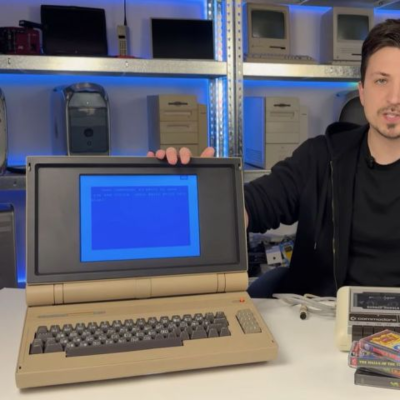

This lovely C64esque laptop sparked the controversy. It’s an absolute looker, with a custom keyboard and a retro-reimagining-period-correct flaptop design, but the beauty is only skin deep: the guts are a Raspberry Pi 5 running VICE. An emulator! Horrors!

This lovely C64esque laptop sparked the controversy. It’s an absolute looker, with a custom keyboard and a retro-reimagining-period-correct flaptop design, but the beauty is only skin deep: the guts are a Raspberry Pi 5 running VICE. An emulator! Horrors!

We’ll admit to being entirely torn. There’s something about the old computers that’s very nice to lay hands on, and we just don’t get the same feels from an emulator running on our desktop. But a physical reproduction like with many of the modern C64 recreations, or [Oscar Vermeulen]’s PiDP-8/I really floats our boat in a way that an in-the-browser emulation experience simply doesn’t.

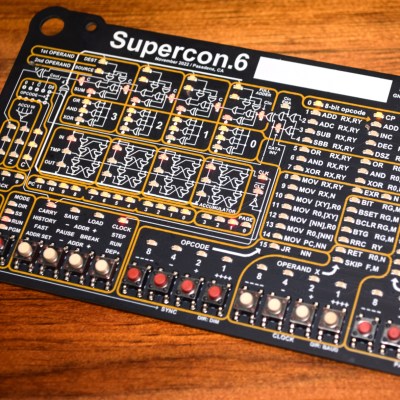

Another example was the Voja 4, the Supercon 2022 badge based on a CPU that never existed. It’s not literally retro, because [Voja Antonics] designed it during the COVID quarantines, so there’s no “old iron” at all. Worse, it’s emulated; the whole thing exists as a virtual machine inside the onboard PIC.

But we’d argue that this badge brought more people something very much like the authentic PDP-8 experience, or whatever. We saw people teaching themselves to do something functional in an imaginary 4-bit machine language over a weekend, and we know folks who’ve kept at it in the intervening years. Part of the appeal was that it reflected nearly everything about the machine state in myriad blinking lights. Or rather, it reflected the VM running on the PIC, because remember, it’s all just a trick.

But we’d argue that this badge brought more people something very much like the authentic PDP-8 experience, or whatever. We saw people teaching themselves to do something functional in an imaginary 4-bit machine language over a weekend, and we know folks who’ve kept at it in the intervening years. Part of the appeal was that it reflected nearly everything about the machine state in myriad blinking lights. Or rather, it reflected the VM running on the PIC, because remember, it’s all just a trick.

So we’ll fittingly close this newsletter with a holiday message of peace to the two retrocomputing camps: Maybe you’re both right. Maybe the physical device and its human interfaces do matter – emulation sucks – but maybe it’s not entirely relevant what’s on the inside of the box if the outside is convincing enough. After all, if we hadn’t done [Kevin Noki] dirty by showing the insides of his C64 laptop, maybe nobody would ever have known.

Maybe someone will do a tube computer as a badge?

I don’t know about a full-tube computer but a badge with a few sub-miniature tubes as a proof of concept should be doable. My biggest worry would be power. Maybe instead of a “tube badge,” create a “tube vest” that was just something very simple, like a comparator or accumulator or something to make das blinkenlights do something pretty in a “programmed” way. The nice thing about a vest is you can put some beefy batteries in the pockets.

This 2016 paper (doi) had some ideas for not-necessarily-vacuum but through-the-air-electron-flow technology that overcomes some of the limitations of the silicon tech of the last 75 years. I haven’t seen anything come out of it though, so maybe it flopped.

It. would certainly keep you nice and warm. Wouldn’t want to go out in the rain wearing it though.

You could make an x-ray tube badge and walk around giving everyone radiation poisoning. Bonus points if you hide it inside a backpack and use it to irradiate commuters on a bus.

Sounds like a great way to spend the rest of your life in a secret/black site prison. As in “even Amnesty International does not know this place exists” prison. If you think the brutality in known orisons is bad…

What if you made a vacuum circuit inside a glass hip flask?

Something simple might be possible. After all, battery-powered tube radios were a real thing for a while.

A Nuvistor tube is cute and tiny, and its filament takes just one watt, and I’ll bet you can still make it do something useful and interesting on half that.

Or even more interesting: use a vacuum fluorescent display tube as a computing element. With the right sort of multiplexing you could get 8 or more separate “devices” in a display, and the blinkenlights would be actually meaningful.

if you can integrate the cathodes into the tube housing, you may be able to use a candle instead of battery power for heating

There weren’t just the ordinary battery tubes of the size of a lighter.

There also were miniature tubes that were about as small as an 24v halogen lamp or a CR2032 coin cell.

They had wires coming out instead of relying on a socket.

I’ve been building a mashup of a system with a STDBus card cage (two 3-slot cages actually) in a 3d printed luggable case and custom boards to simulate Acorn System 1/2/3/4/5, EuroBeeb & EuroCube, as well as Z80 CP/M systems. Video, Audio, and most other I/O is handled by a pair of 20K LUT GoWin FPGAs, with the keyboard connected to the Teensy 4.1 that is also doing in-circuit emulation of the 6502, 6809, or Z80 CPUs.

The 16-bit address and 8-bit data busses are real 5v logic between the STDBus cards, and most 8-bit STDBus cards from the 1981 Pro-Log catalog will work in the luggable.

Running the CPUs in software on the Teensy has allowed breakpoint debugging, dynamic loading of the MOS ROMs for the various systems, and dynamic remapping of memory & peripherals. When talking to peripherals, everything slows down to match the speed the peripheral can respond at, but when executing code from internal SRAM or external PSRAM (gotta have those sideways RAM/ROM slots!) the simulated CPU can zip along unrestricted.

I can see the appeal of the C64 laptop for Commodore fans, but also understand why purists get mad about modern recreations / continuations of the line. (I hear arguments all the time about the CX16 and F256 computers.)

Nice, especially the Gowin cheapo FPGA part, got a link ?

I’ve not posted any project page yet for my build, but the two FPGA modules I’m using are the Tang Nano 20K and Tang Primer 20K from sipeed. The Nano is nice for it’s built in SDRAM but the trade off is fewer I/O pins exposed. The Primer has plenty of I/O available, but you’re stuck with DDR3L which can be tricky to use.

I’m inclined to think that it’s OK to emulate, but it’s increasingly less impressive the greater the Host:Target CPU performance. Emulating a 68K Mac at (mid-range) 68030 speeds on a PowerPC at 66MHz is impressive. Emulating a pdp-8/e (effectively 0.3MHz) on a quad-core PI running at 2-3GHz per core. Not so much.

Running Apple’s file system and network stack on the emulated 68k for years was ‘impressive!’

Going to say it … For the most part, leave the ‘real’ hardware to museums and just use simulators going forward. For example, I’ve found it a lot of fun to just use DOS-X for retro-computing. Running Turbo Pascal and C, Tasm, has been a blast and all running on a RPI-5 500+. I am thinking of turning one of my Lego PCs into a Reto PC now. The PiDP line is another example to experience DEC platforms with small consoles that can sit on a shelf rather than a large building with big power requirements… If you are like me, I don’t have a lot of room to store a collection of ‘retro’ sized computers.

With 3D printing, and availability of making PCBs easily, it sure make it ‘easier’ to simulate the old hardware if desired without touching the originals. Nothing wrong with bringing the old hardware to life though either for those so inclined.

The problem is that we increasingly started to lose ownership of the hardware, it’s getting more and more expensive and hard to find, how long until we can’t buy a computer and we can only stream it ? How long until someone decided that running emulators on it don’t make money enough? Hard is getting shitty, software is getting shitty (apart from Linux for now) so, I’m good to keep collecting old computers as I might find solace into this old machines from the psychotic needs of the tech bros and their capain of running all the fun we used to have.

If computing 40 years ago was “speak to the AI to make a program” and locked down to the point it can’t actually be considered a computer anymore, I would’ve lost interest right away. It would’ve been just another toy with flashing lights to chuck into the closet and forget about with no depth or fun of exploring. I’m afraid this is the kind of future that is being forced upon the world.

It makes me appreciate the big 3 ring binders of program listings and limited resource BASIC I had in the 80s all the much more.

Ok, boomer. :)

Most of us already did this 20-30 years ago when the emulator scene was young.

We played classic games on emulators such as Nesticle, Personal C64, UAE, ZSNES or Genecyst..

DOS was still our second home, it provided unresticted power to emulators.

The cool kids in town played PS1 titles on PC via Bleem! or on Mac via Connectix VGS.

In early 2000s, we ran our beloved DOS games on then-new Windows XP using VDMSound project until DOSBox had matured enough beginning with 0.63 or something.

And then most of us re-discovered/remembered how awesome real hardware is/was.

We still used the emulators, which were great when being “on the go” or when being playing on big screen, but also started to enjoy and value retro computing more and more.

PS: The gurus among of us had used PC emulators such as PC-Ditto way back in the 1980s. :)

Later in the Windows 3.1 days (early 90s), most users knew about AppleWin, a somewhat popular Apple II emulator..

So emulation isn’t exactly a new concept. Terminal emulators are ancient, for example.

I both collect old systems and get them running again, and emulate. I have a bunch of emulated things running all the time (a Pi 4 running about 10 systems: PDP-11, VAX, S/370, dps8m; also one each of Oscar’s PDP kits; finally a little PC for Alpha emulation), but I only fire up the real iron on special occasions, because (for the computers and workstations, not so much the videogame consoles) it’s hot, it’s noisy, and (for everything) every time I feed it power I’m running the risk that this is the time something difficult to fix blows.

There’s no ‘real deal’. No matter what you’re doing, the modern ecosystem means you do it much differently than you would have 30+ years ago.

For example, my friend gave me an HP Omnibook 486 (it looks like an hp95lx scaled up about twice in every dimension). When it was current, i never could have afforded it, of course. I wanted to know a little about its context so i found an old Computer Shopper magazine that someone had scanned. Which is to say, i downloaded a giant PDF in half a second and paged through it as fast as i could push the buttons — that’s not period correct! From that same issue, i found an ad for a PCMCIA SSD for it, and since the HDD it came with was suffering constant faults, i decided i wanted an SSD for it. To my surprise, i found basically the same SSD from the ad on ebay, but again, i was able to afford it, which is fantastically anachronistic. And to get it booting, I copied a bunch of files over from some modern supercomputer (budget laptop) running Linux.

Same friend left a VIA C3-based thin client with me. I found a plausible use case for it 6 months ago, so i downloaded a period-appropriate Debian install, which took a severaly inappropriate number of seconds to download. I brought it up under QEMU so i would have a good platform for cross-compiling a kernel for it. I compiled a custom kernel in a totally wrong 45 seconds. To find stuff in the kernel source, i used ‘find . -type f | xargs grep blah’, which was not a plausible way to work with source in 2003. I was overall really pleased with how much easier it is to do 2003 tasks in 2025 than it was at the time. I had actually played with this same device many years ago and it was much easier this time.

Everything we do with old computers is only really plausible because new computers are so great, IMO. Every tool we use to bring back an old computer is a new tool. There’s surely some weirdo out there using old tools on old computers but fundamentally you can’t isolate yourself from the modern supply chain. We just don’t do it like we used to.

Honestly, it does seem like you’re dancing around the actual question because it’s difficult to justify not respecting history.

“to justify not respecting history”

ain’t nobody making that point.

idk, for me it’s the input/ouput, the keyboards, the screens (CRTs, although at some point emulating a CRT should be fairly convincing on LCD, but then there was gas plasma!) The “feels” from a C64 are in no small part because of the keyboard. and using the Model M for vintage DOS -that’s respecting history to me. Also The point of the PiDPs, to me at least, is the physical console, the switches. HP Omnibook 486 – I’d be fine with replacing the entire interior, but the keyboard and screen, even the weight -it’s the experience! and using SSDs misses that point.

not really. The Model M is a good example: it’s not about the processing power in the keyboard, it IS about buckling spring key design. You might know of Unicomp and revival of Models F and M. Also, with 8-bit micros, one can have a fairly good understanding of ALL of it. That can’t be re-created with newer computers. FINALLY, these old computers couldn’t scrape user data. Sites like http://www.pcjs.org, at least theoretically, certainly can. Modern tech is increasingly dystopian, and vintage stuff IS QUITE NECESSARY for privacy these days. I like vintage laptops that are pre-TPM, for example.

That reminds me of the story line of Snatcher,

a Japanese adventure game/visual novel from late 80s that takes place in mid-21st century.

Here, the Gibson character had stored all of his investigation report on an 5,25″ floppy using his vintage computer, to make sure it’s safe.

https://en.wikipedia.org/wiki/Snatcher_(video_game)

Funny, I read old Byte Magazines at the local public library no problem.

Even did lend some issues in the 2010s and scanned the interesting articles at home (topics such as OS/2 betas, OS/2 vs NT, WfW, Mac emulation etc).

In that library they have an archive that you can order books/magazines from.

The media you can’t take home can be read at the library.

You can also bring your laptop and do your study at the library.

Really, you guys are strange.

You think that the internet is everything and that we “owe” it something.

But that’s not the case. If you’re willing to get your a** up, you can get information the classic way.

At least here in parts of Europe, were we still have real books and libraries.

By the way, real MS-DOS PCs are still being made.

There are various homebrew projects that involve XT level hardware.

Such as NuXT/NuXT 2.0, Micro8088, Xi 8088 or homebrew8088’s PC motherboard.

So you can use real 8088/V20/V30/40 CPUs and don’t have to rely on emulation (no FPGA or Pi needed).

Here’s an older HaD article:

https://hackaday.com/2022/05/21/building-your-own-8088-xt-motherboard/

Here’s an 1:1 IBM Model 5150 motherboard replica:

https://www.mtmscientific.com/pc-retro.html

Anyway, just saying. I don’t mean to advertise these, they’re just examples.

My point simply is that emulation is optional, that real hardware continues to exist.

In the future, I hope that decent CRT simulators will be available.

So that the image on an LCD will look same as it already does now

when we’re filming an CRT screen and then watching the resulting recording on an LCD.

On that note, since tabletop VFD games are being emulated in MAME, I would like to see filters that can recreate the actual look of the VFD. As in partially diffused lighting, being able to see parts of the fine hexagonal grid over the lit segments, and even the faintly red glowing filament wires crossing over the display.

As of now, I really appreciate that the emulation of these games even exists, but things still seem a bit flat compared to an actual VFD.

Is Google AI “modern software” Big Tech attempt to promote 1960s

software technologies into giga/terabyte memories low-watt Nanocomputer

software technologies?

LLVM/CLANG, frameworks, tool chains , another Big Tech BAD IDEA?

freedos has a 64-bit memory manager now. if only they could do a gpu driver. a multithreaded software renderer in dos might do the trick though. might be too much to ask of such a diminutive os.

i like neoretros better as you can screw around without breaking a priceless artifact (read: something you can sell on ebay for a few bucks).

Nothing inherently wrong with emulation as long as the emulation is provably correct, and even then only if the emulation is actively being promoted in an academic or authoritative sense. Otherwise, “good enough” can be good enough. That said, people settling for a “good enough” solution really need to keep themselves in check and not speak on things authoritatively.

The emulation of Taito’s Bubble Bobble was “good enough”, right up until someone worked out how to dump the protected 68705 microcontroller which, it turned out, had a rather significant effect on gameplay: The timing of when “extend” bubbles spawn, other item spawning, and more.

The emulation of Taito’s Operation Wolf was “good enough”, right up until the copy-protection chip was delayered and dumped as well. Then it turned out that using the best-guess simulation of the copy-protection chip, the levels were in the wrong order, bosses had the wrong amount of health, enemy patterns were wrong, and to a great extent, it wasn’t even like playing the same game.

The emulation of Capcom’s 1942 was “good enough”, then it turned out that there’s a box that displays an expanding-and-contracting animation behind the Capcom logo on the title and ending screens, which was previously shown in all emulators as two separate boxes moving back and forth. The hardware only has enough time to analyze 24 sprites per scanline, but RAM for 32 sprites: It always analyzes the first 16 sprites, but then analyzes the next or last 8 depending on how far down the screen it is. Each box was in a different group of 8 sprites, so they would be cut off at the mid-point of the screen, producing the expanding-and-contracting animation instead.

That last one may seem somewhat innocuous, but here’s the thing: Decades later, when a company went about writing a recreation of 1942 for one of those TV plug-and-play handheld things, the developers must have been using a flawed emulator as a reference, rather than a real arcade board, as the TV plug-and-play thing recreates the behavior of the flawed emulation, not the behavior of an actual arcade board.

Thus my point about not treating “good enough” as authoritative: There is literally the potential for a mistake in that “good enough” implementation to be mistaken for authoritative, perpetuating errors in what is tantamount to a rewriting of history.

Something like VICE, which is as close as possible to 100% hardware-accurate, with its results verified on real hardware? Something like WinUAE, which is the nearest thing to VICE but which covers the Amiga? Those are fine, as they are both intended to be, and verified to be, an accurate model of vintage hardware.

Sounds like an argument against DRM since the effect is “good enough” results.

Take your medication.

I emulate older machines on old machines already, so I’m fighting with myself here. Sometimes there isn’t an easy bridge to make things happen on the grumpier hardware, so emulation is the only way to get from here to there. I think that the retro computing hobby is essentially a bubbling stew pot of old and new (unless you’re rich or nuts and have one of everything).