As the first part of a series, [George Emad] takes us through a few examples of the Linux operating system being used in spacecraft. These range from SpaceX’s Dragon capsule to everyone’s favorite Martian helicopter. An interesting aspect is that the freshest Linux kernel isn’t necessarily onboard, as stability is far more important than having the latest whizzbang features. This is why SpaceX uses Linux kernel 3.2 (with real-time patches) on the primary flight computers of both Dragon and its rockets (Falcon 9 and Starship).

SpaceX’s flight computers use the typical triple redundancy setup, with three independent dual-core processors running the exact same calculations and a different Linux instance on each of its cores, and the result being compared afterwards. If any result doesn’t match that of the others, it is dropped. This approach also allows SpaceX to use fairly off-the-shelf (OTS) x86 computing hardware, with the flight software written in C++.

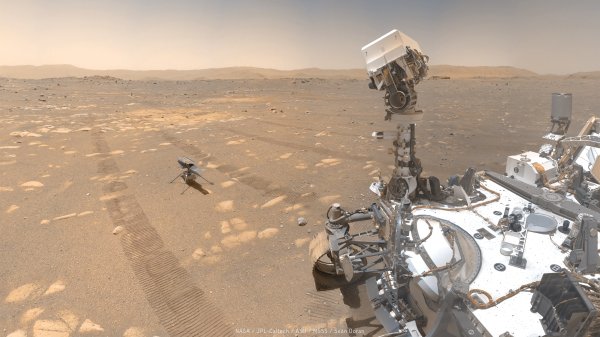

NASA’s efforts are similar, with Ingenuity in particular heavily using OTS parts, along with NASA’s open source, C++-based F’ (F Prime) framework. The chopper also uses some version of the Linux kernel on a Snapdragon 801 SoC, which as we have seen over the past 72 flights works very well.

Which is not to say using Linux is a no-brainer when it comes to use in avionics and similar critical applications. There is a lot of code in the monolithic Linux kernel that requires you to customize it for a specific task, especially if it’s on a resource-constrained platform. Linux isn’t particularly good at hard real-time applications either, but using it does provide access to a wealth of software and documentation — something that needs to be weighed up against the project’s needs.