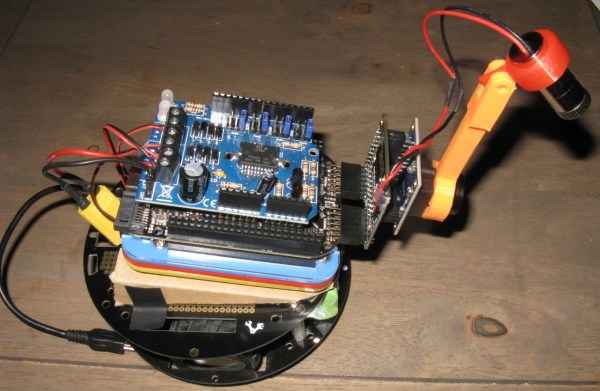

There comes a wonderful “MacGyver moment” in many hackers’ lives when we find ourselves with just the right microcosm of scrap parts to build something awesome. That’s exactly what [dragonator] did with his gifted tech box from Instructables. He’s combined RGB LEDs, a Trinket, and a hall effect sensor to add a semicircular rainbow pattern to his night ride while he rides it.

The theory behind the hack is well-known: given the time between pings from a hall-effect sensor responding to the magnet on a bike wheel, an embedded system can estimate the wheel rpm and predict the time to display a particular color on the LEDs. [dragonator] uses the known wheel speed to determine the LED pattern currently on display: either a slow breathing pulse to a half-circle rainbow that displays on the lower bike rim. He drops in the needed equations and required components to follow his trail in a well-documented instructable.

Persistence of Vision (POV) is a nice extension from blinking your first (or first hundred) LED(s). It’s just enough math to get the casual onlooker to cry “magic” and just enough embedded electronics to get those seasoned double-Es to nod their heads. If you’re new to the POV crowd, [dragonator’s] Instructable may be a great start.

Continue reading “Bike Rim Lighting Lets The Night Crowd Know When You’re Rollin’”