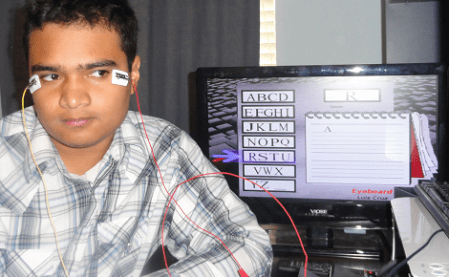

[Luis Cruz] is a Honduran High School student, and he built an amazing electrooculography system, and the writeup (PDF warning) of the project is one of the best we’ve seen.

[Luis] goes through the theory of the electrooculogram – the human eye is polarized from front to back because of a negative charge in the nerve endings in the retina. Because of this minute difference in charge, a user’s gaze can be tracked by electrodes attached to the skin around the eye. After connecting eye electrodes to opamps and a microcontroller, [Luis] imported the data with a Python script and wrote an “eyeboard” application to enable text input using only eye movement. The original goal of the project was to build an interface for severely disabled people, but [Luis] sees applications for sleep research and gathering marketing data.

We covered [Luis]’ homebrew 8-bit console last year, and he’s now controlling his Pong clone with his eye-tracking device. We’re reminded of a similar system developed by Atari, but [Luis]’ system uses a method that won’t give the user a headache after 15 minutes.

Check out [Luis] going through the capabilities of his interface after the break. Continue reading “Tracking Eye Movement By Measuring Electrons In The Eye”