There’s a lot going on in our wireless world, and the number of packets whizzing back and forth between our devices is staggering. All this information can be a rich vein to mine for IoT hackers, but how do you zero in on the information that matters? That depends, of course, but if your application involves Bluetooth, you might be able to snoop in on the conversation relatively easily.

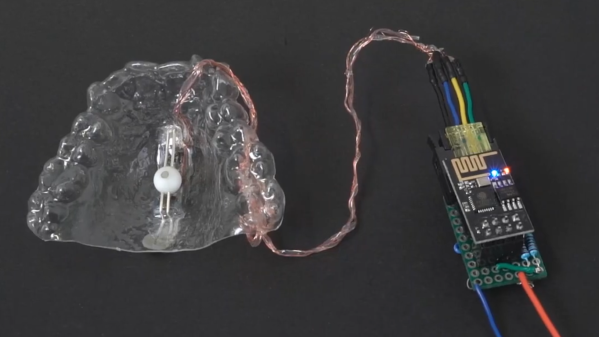

By way of explanation, we turn to [Mark Hughes] and his Boondock Echo, a device we’ve featured in these pages before. [Mark] needed to know how long the Echo would operate when powered by a battery bank, as well as specifics about the power draw over time. He had one of those Fnirsi USB power meter dongles, the kind that talks to a smartphone app over Bluetooth. To tap into the conversation, he enabled Host Control Interface logging on his phone and let the dongle and the app talk for a bit. The captured log file was then filtered through WireShark, leaving behind a list of all the Bluetooth packets to and from the dongle’s address.

That’s when the fun began. Using a little wetware pattern recognition, [Mark] was able to figure out the basic structure of each frame. Knowing the voltage range of USB power delivery helped him find the bytes representing voltage and current, which allowed him to throw together a Python program to talk to the dongle in real-time and get the critical numbers.

It’s not likely that all BLE-connected devices will be as amenable to reverse engineering as this dongle was, but this is still a great technique to keep in mind. We’ve got a couple of applications for this in mind already, in fact.

Continue reading “Bluetooth Dongle Gives Up Its Secrets With Quick Snooping Hack”

A Human-Computer Interface (or HCI) is what we use to control computers and what they use to

A Human-Computer Interface (or HCI) is what we use to control computers and what they use to