It’s that time of year again when the senior design projects come rolling in. [Ben], along with his partners [Cameron], [Carlton] and [Chris] have been working on something very ambitious since September: a robotic arm and hand controlled by a Kinect that copies the user’s movements.

The arm is a Lynxmotion AL5D, but instead of the included software suite the guys rolled their own means of controlling this arm with the help of an Arduino. The Kinect captures the user’s arm position and turns that into data for the arm’s servos.

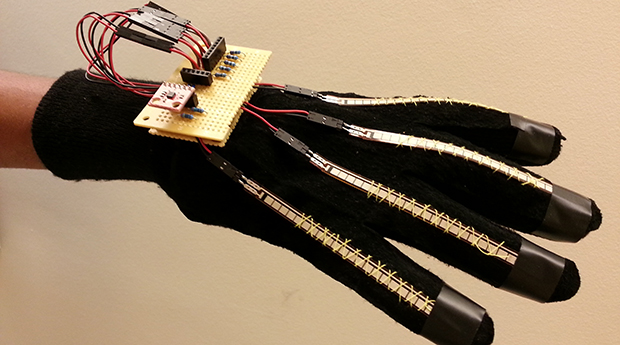

A Kinect’s resolution is limited, of course, so for everything beyond the wrist, the team turned to another technology – flex resistors. A glove combined with these flex resistors and an accelerometer provides all the data of the position of the hand and fingers in space.

This data is sent over to another Arduino on the build for orienting the wrist and fingers of the robotic arm. As shown in the videos below, the arm performs remarkably well, just like the best Waldos you’ve ever seen.

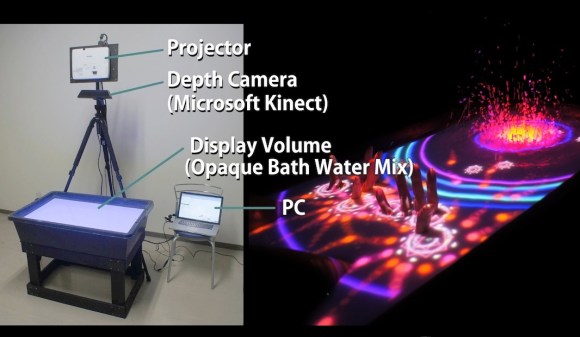

Are you ready to make a utility sink sized pool of water the location of your next living room game console? This demonstration is appealing, but maybe not ready for widespread adoption.

Are you ready to make a utility sink sized pool of water the location of your next living room game console? This demonstration is appealing, but maybe not ready for widespread adoption.