There’s an old saying (paraphrasing a quote attributed to Hoare): “I don’t know what language scientists will use in the future, but I know it will be called Fortran.” The truth is, there is a ton of very sophisticated code in Fortran, and if you want to do something more modern, it is often easier to borrow it than to reinvent the wheel. When [Valgriz] picked up a textbook on aircraft simulation, he noted that it had an F-16 simulation in it. In Fortran. The challenge? Port it to Unity3D.

If you have a gamepad, you can try the result. However, the real payoff is the blog posts describing what he did. They go back to 2021, although the most recent was a few months ago, and they cover the entire process in great detail. You can also find the code on GitHub. If you are interested in flight simulation, flying, Fortran, or Unity3D, you’ll want to settle in and read all four posts. That will take some time.

Continue reading “Porting A Fortran Flight Simulator To Unity3D”

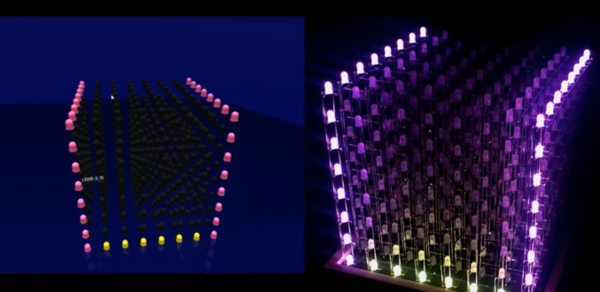

We’re not just talking about driving the LEDs themselves at a low level, but

We’re not just talking about driving the LEDs themselves at a low level, but