The word supercomputer gets thrown around quite a bit. The original Cray-1, for example, operated at about 150 MIPS and had about eight megabytes of memory. A modern Intel i7 CPU can hit almost 250,000 MIPS and is unlikely to have less than eight gigabytes of memory, and probably has quite a bit more. Sure, MIPS isn’t a great performance number, but clearly, a top-end PC is way more powerful than the old Cray. The problem is, it’s never enough.

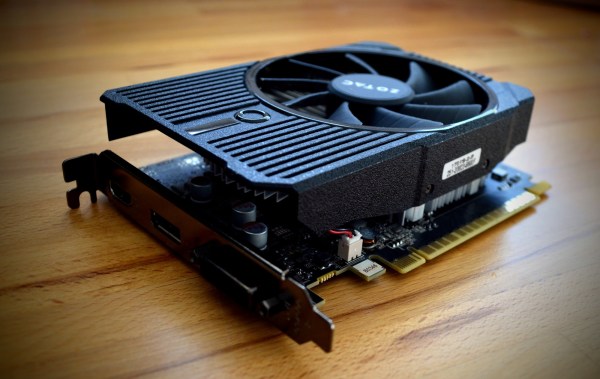

Today’s computers have to processes huge numbers of pixels, video data, audio data, neural networks, and long key encryption. Because of this, video cards have become what in the old days would have been called vector processors. That is, they are optimized to do operations on multiple data items in parallel. There are a few standards for using the video card processing for computation and today I’m going to show you how simple it is to use CUDA — the NVIDIA proprietary library for this task. You can also use OpenCL which works with many different kinds of hardware, but I’ll show you that it is a bit more verbose.

Today’s computers have to processes huge numbers of pixels, video data, audio data, neural networks, and long key encryption. Because of this, video cards have become what in the old days would have been called vector processors. That is, they are optimized to do operations on multiple data items in parallel. There are a few standards for using the video card processing for computation and today I’m going to show you how simple it is to use CUDA — the NVIDIA proprietary library for this task. You can also use OpenCL which works with many different kinds of hardware, but I’ll show you that it is a bit more verbose.

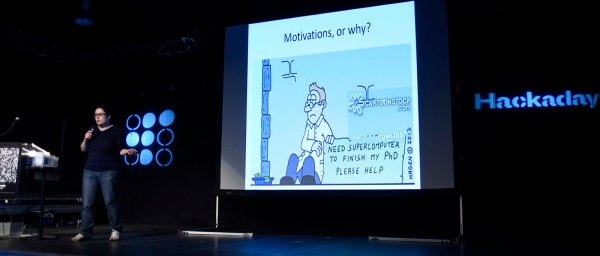

Continue reading “CUDA Is Like Owning A Supercomputer”