[Paul Stoffregen], creator of the Teensy series of microcontroller dev boards, noticed a lot of project driving huge LED arrays recently and decided to look into how fast microcontroller dev boards can receive data from a computer. More bits per second means more glowey LEDs, of course, so his benchmarking efforts are sure to be a hit with anyone planning some large-scale microcontroller projects.

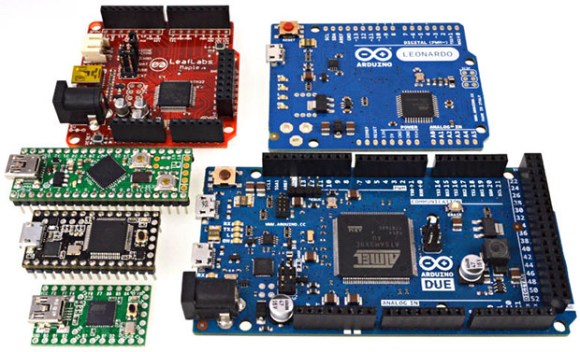

The microcontrollers [Paul] tested included the Teensy 2.0, Teensy 3.0, the Leonardo and Due Arduinos, and the Fubarino Mini and Leaflabs Maple. These were tested in Linux ( Ubuntu 12.04 live CD ), OSX Lion, and Windows 7, all running on a 2012 MacBook Pro. When not considering the Teensy 2.0 and 3.0, the results of the tests were what you would expect: faster devices were able to receive more bytes per second. When the Teensys were thrown into the mix, though, the results changed drastically. The Teensy 2.0, with the same microcontroller as the Arduino Leonardo, was able to outperform every board except for the Teensy 3.0.

[Paul] also took the effort to benchmark the different operating systems he used. Bottom line, if you’re transferring a lot of bytes at once, it really doesn’t matter which OS you’re using. For transferring small amounts of data, you may want to go with OS X. Windows is terrible for transferring single bytes; at one byte per transfer, Windows only manages 4kBps. With the same task, Linux and OS X manage about 53 and 860 (!) kBps, respectively.

So there you go. If you’re building a huge LED array, use a Teensy 3.0 with a MacBook. Of course [Paul] made all the code for his benchmarks open source, so feel free to replicate this experiment.

Totally awesome! I am just upgrading a project to a ATMega32U4 and wondered this same thing.

With native USB support I am not surprised the Teensy kicks so much ass. Everything else is capped by having to to TTL serial->USB and presumably stuck at 115200 bit rate. I do loves my Teensies.

The STM32 on the Maple at least has native USB, it’s just being set up as a virtual serial by libMaple and wirish. I’m sure if he used the USB capabilities directly, it would be faster.

Actually, it looks like all of those have USB directly to the MCU.

I don’t want to call out “that’s bullshit” but… you know.

If you got an MCU with native hardware and you’re still using USB-CDC to transfer data it’s pretty much solid proof you’re dumb.

Using a custom USB class (YES, that *includes* reading about and understanding USB and brings your fancy LED cube project from soldering twothousand LEDs together to a project where you need to think) you can achieve much higher transfer speeds with LESS code on the MCU.

For reference: I managed to get close to 3 MB/s via bulk transfers on a XMega. Of course without any of that Arduino stuff.

Do you mean 3 megabytes/second or 3 megabits/second?

3 megabytes/second would be pretty unbelievable, since the XMega chips have 12 Mbit/sec USB which has a raw wire speed of only 1.5 megabytes/sec and a maximum theoretical transfer rate of 1.216 megabytes/second with USB bulk protocol overhead (not including the extra data-dependent overhead from the bit stuffing algorithm).

3 megabits/second would be 375000 bytes/sec. That’s pretty good, roughly in the middle of the range of the6 boards I tested.

FWIW with my own stack (IMO LUFA is a mess and ASF is even worse), I was getting a hair under 10Mbps (~1.1MB/sec) with bulk reads from the Xmega, multiple outstanding queries on the host side, but no ping-pong on the MCU (empty packets, so no significant loss).

A big advantage of USB CDC is the ease of use. Except for Win 8, it requires minimal fiddling with drivers, is supported by almost any programming language and has great tools to inspect, log and manipulate the data.

Once you have your library set up (using lufa this is pretty easy), it is trivial to use and done correctly supports speeds which should be sufficient for most applications.

Sure ,if you have a large project with a custom support software on the host, large ammounts of data and speed constraints, go for a custom device, but as far as I am concerned a serial Interface is still the lingua franca of the home electronics world.

Never underestimate usefulness of readily available drivers. CDC-ACM can be had in both windows and linux, it is not speed limited, and rather simple.

So – yes, I use CDC-ACM, I wrote code for CDC-EEM (ethernet emulation model). Both are simple, both have readily available drivers and could be controlled by standard tools (a big plus in my opinion). I do not trust in my ability to write a good low level code, so I leave writing drivers or support stuff to those, who do it, and rather finish the task in hand instead of debugging the driver/connectivity utility code for ages.

Ignoring existing standard USB class, that has drivers – that is dumb in my opinion. IMO, going for non standard solution in case of protocols should be a matter of last resort, and not the result of “not invented here, so it is dumb” syndrome.

Can you share the CDC-EEM code?

I would be interested in the CDC-EEM code as well. Can you share it?

if the stellaris only transfers at 11k.Why does it connect at 1500000 in minicom through the debug port?

minicom -D/dev/ttyACM0 -b1500000

It connects and transfers just fine even though I didn’t do any actual benchmarking.

You have to set the port uart speed in your code too.

UARTStdioInitExpClk(0,1500000);

Why don’t you do some actual benchmarking? I published all the code, so you can do it pretty easily. The debug port on that Launchpad board appears to be connected through a hardware UART, so 1500000 baud ought to give 150000 bytes/sec speed, which would at least put it faster than the 2 slowest boards in the test.

So I just compiled and ran your program and I got.

port /dev/ttyACM0 opened

ignoring startup buffering…

ignoring startup buffering…

ignoring startup buffering…

ignoring startup buffering…

ignoring startup buffering…

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11527

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11527

Bytes per second = 11515

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11516

Bytes per second = 11527

Average bytes per second = 11518

Is this all the speed I’m getting?

I changed

#define BAUD 115200

to

#define BAUD 1500000

and ran make

then I got

Average bytes per second = 64101

If you’re testing Stallaris or any other board that involves a hardware serial link between 2 chips, please measure the frequency on pin 2 during the test. If you get a significantly different measurement on pin 2 (indicating the received kbytes/sec rate) than the speed reported on your computer (indicating the transmitted kbytes/sec rate), then the serial buffer is being overrun. Ordinary hardware serial does not necessarily have end-to-end flow control like USB, so it really is important to measure the speed on both sides. I put that code into the benchmark to toggle pin 2 for exactly this reason, so the speed on both sides of the link could be verified.

Perhaps I’m not understanding the main point of this in relation to driving large LED arrays. Why would I ever want to do that via USB? For small displays, sure no problem. For larger displays I’d probably go with an FPGA to convert the digital video output from a PC/Mac to control the LED array. Large format displays would likely require multiple independent refresh zones to maintain video update rates. Maybe I’m just thinking about a different design goal than the original posting.

maybe you wouldn’t want to use usb for large arrays, but others might. going from usb to fpga is a big step up, one that has a steep learning curve.

The ChipKit Max32 can support usb speeds up to 3Mbs, I use this to drive a LED strip of 2800 RGB leds

I’d say the HaD headline is a little misleading as the benchmarking is clearly only for USB CDC (virtual serial) which is not designed for high-speed bulk data transfer (and also the Host OS comparisons are also serial only rather than USB in general). USB supports other protocols (many of which are supported by the LUFA USB library) which offer much better transfer rates. If you are wanting to control a big LED matrix, using CDC is a poor implementation choice to begin with IMHO. Qudos to Paul for publishing the tests and results, within the context of Virtual Serial over USB they are very interesting.

CDC serial does use USB bulk transfer protocol. In fact, after some setup with control transfers for serial parameters, it pretty much just maps I/O operations to USB bulk transfer operations. On Macintosh, the driver is obviously doing some really crafty things to combine small writes together. Linux appears to just pass your reads and writes through as bulk protocol transfers to the host controller chip. Who knows what Windows is doing to be so slow?

I would not necessarily assume LUFA is fast. Until good measurements are made, nobody really knows. LUFA certainly does have a LOT of really complex code, which might be carefully constructed to enhance its speed, or might be syntactic sugar that’s optimized away by the complier, or might incur a lot of overhead.

You make some valid points Paul. Although I would still argue that CDC is not the way to go (for applications like LED matrix driving) since you can overcome both host and device overhead by optimising both sides to the size of data you are transferring. With CDC you are relying on the host OS to guess the right block size for you (which probably explains the big difference in speeds based on how much data you transfer). CDC also means you are running through the serial port interface code of the OS which may or may not be optimised for the higher throughput available via USB and adds more code in the way of the IO. I’d be interested in seeing a LUFA CDC benchmark though.

Your point is valid, that CDC does rely on the host driver. That’s why I went to the trouble to test the 3 different hosts at 3 different write sizes. Certainly you could do better by crafting a completely custom solution on both the host and device, which requires a LOT of work. But look at how well the Mac driver deals with small writes! If your data is naturally a large number of small messages, I believe you’d have to work very, very hard to achieve something that works as well as CDC with Apple’s driver with a Teensy3 and extremely easy programming in Arduino. Then again, if you’re targeting Windows, the driver is so bad that you really much go to a lot of extra work to either use large writes or develop a completely custom solution.

I too would like to see someone run this benchmark on LUFA. Obviously LUFA can’t run my Arduino sketches, but maybe someone could port my code to LUFA and give it a try with the host-side code I published.

Teensy is just amazing!

Using the same chip as Arduino DUE (Atmel SAM3U) on a custom pcb, I reached 30MB/s (yes megabytes) using CDC ACM Linux on the other side. I am pretty sure I can get 30 to 50% extra throughput, optimizing both ends of the usb link.

This ATMEL chip has (a fantastic) 2.0 HS (480Mb) usb, whereas most others only have 2.0 FS (12Mb) usb, this is where the difference lies.

I can second this statement as I have run into similar bitrates with my ATMEL boards (Most microcontrollers I have tested state USB 2.0 compliance but only implements Full-Speed 12Mbit). Usually one has to get a separate USB chip like a Cypress EZ-USB FX2 or FTDI FT2322H to get those sweet High-Speed USB datarates.

That being said I really like the virtual COM port implementations as they are integrated on most operating systems and thus require no special drivers and can be instantiated in virtually any software project that does not really need those high datarates.

We at my former employer (HCC-Embedded) were able to get ~20-25 MiB/s using mass-storage connected to a ramdrive on high-speed capable micro-controllers.

Similar speed can be achieved with CDC though Windows (XP and Win7) inserted big gaps between transfers. Mainly because of the size of kernel side buffers (64KiB for mass-storage and ~16 KiB for CDC).

Mass-storage was tested using dd (cygwin) with 2M block-size. CDC was tested with copy (copy data.bin COMx).

Some of the Cypress micro’s have an usb peripheral which can handle at least 24MByte/s. Saleae have built an logic analyser (24Msps, 8 channels) around this chip. These chips + pcb +case +usb connector+ 74HCxx buffer sell for less than USD10 on ebay / aliexpress.

http://sigrok.org/wiki/File:Mcu123_saleae_logic_clone_pcb_top.jpg

At the moment I’m still strugling a bit to get sigrok working.

That Cypress chip is indeed impressive. In fact, there are lots of amazing USB chips on the market. But can they run Arduino sketches and Arduino libraries, and this benchmark which shows the speed you get when using easy Arduino-style programming?

“That cypress chip” is the fx2 series – and as far as being Arduino-like? HELL NO.

They are a triple-headed beast of complexity, with one head being the configurable USB 2.0 interface, the other being a programmable state machine that can actually get to the external world at up to 48 MHz, 16 bit (96 MB/s!), and the third? An “enhanced”, von-neumanized, 8051 microcontroller, which it is necessary to program to then set up the other two “heads”.

Just to confuse matters (a LOT!) these chips have pre-programmed firmware built-in. Some of this firmware handles part of the usb request protocol, and its quite easy to think that you’ve got full control over it, whilst actually some of the original code is still running… However, this feature also makes these chips “unbrickable” since you can always temporarily disconnect or short out the eeprom chip whilst plugging in, to force the original firmware condition.

There is a version of this chip that can talk lower voltages (down to ~1.8V) which removes this feature, but it’s only available in 56-pin BGA. (also watch out – the ifclk pin will always run at 3.3v if set to be a clock output – I killed my PapilioOne that way).

By far the cleanest development packages for this thing are GPL’d by ztex.de – they sell some boards with an fx2 + fpga and ram.

(the ram is quite a bit more necessary than you’d think, if you want sustained bandwidth.)

The best you’ll actually be able to get (assuming you implement a huge fifo in the dram) is about 30 to 40 MB/s sustained. Also assuming you’re using one of the better intel root hubs, under linux, with the “fx2pipe” program that Wolfgang Wieser wrote (see triplespark.net ). The ztex SDK is implemented mostly in java, and it uses libusb to talk, which isn’t quite as fast as going straight to linux kernel like fx2pipe does.

BTW, sustained BW is hard to manage – libusb quite routinely drops the ball for >4 milliseconds or more quite often. USB is host-controlled, the host talks, and devices speak only when spoken to. This means that if the host OS couldn’t be bothered right at the moment, data goes nowhere. 4KB fifo runs out real quick at ~40 MB/s.

Cheap cy7c68013a boards can be had from ebay for about $10ea, however note that depending on the chip you get, you may find that the GPIF master input pins seem mysteriously disconnected, and just act like they’re always high. Counterfeits perhaps? Fortunately you will need at least an fpga to drive the dram necessary to cover for the PC’s time off at lunch, and slave-fifo mode still works perfectly.

Unfortunately fx2pipe isn’t quite compatible with ztex boards (yet…) because the fx2 is also responsible for resetting and configuring the fpga, and fx2pipe expects to be able to just upload it’s own firmware to the fx2 whenever it starts….

That said, the ztex boards are so cheap (compared to finding another fx2 + fpga + dram board) that there’s not much point rolling your own. I really wish I’d run across them earlier – they’re by far the easiest way to easily get heaps of bandwidth. (at least up to the ~30 MB/s mark).

Mind you, I’m talking most host -> PC bandwidth, but the opposite direction is going to run into similar issues.

Cypress also make a USB 3.0 “FX3” which will be interesting. Easiest way into one of those would presently be the BladeRF kickstarter. The FX3 is quite different – it’s a fully-fledged ARM SoC, and is only available in BGA package, and has a linux-compatible SDK.

(PS, freeSoC has a fx2 as well, but it’s wired as only a usb->serial converter, and the high bandwidth pins aren’t connected at all ):

Hi Remy,

thanks for the overview of the learning curve for these cypress chips. Another important issue would be the availability of an affordable / free C compiler for these things.

And when performance of my avr projects becomes an issue boards like the Raspi or Beaglebone Black are far more likely for me to switch over to then the cypress controllers. But those 4000+ pages of a datasheet for those processors are a bitch to get through… Probably be better of to read a book about embedded linux/kernel.

For those wondering why OSX outperforms Linux, apparently the kernel devs are looking into it.

https://plus.google.com/111049168280159033135/posts/iGEcktD99NS

It’s because Mac does buffering to send your small requests out as a larger packet. It plays merry hell with latency for anything trying to pretend it’s realtime though.

You’re right about the buffering.

However, regarding latency, I’ve done quite a bit of benchmarking there too. Those results show OS-X also achieves the lowest latencies. Some of the early benchmarking was published at neophob.com about 2 years ago and mentioned here on HaD. More recent tests have been discussed on the pjrc forums. I’ll probably redo those tests on my growing collection of boards and publish another page in a month or two.

I know that sees contradictory, excellent throughput with buffering and also the lowest latency. The only explanation I can come up with is Apple must have some really smart developers who care deeply about good results.

I think it’s just because Apple are really a computer engineering company, at heart. They must use oscilloscopes to measure performance, and they do care very deeply about performance in that way specific to engineers…

It’s only surprising if you think the slick presentation is all there is to Apple.

Well, here you have some independently verified experimental proof.

(disclaimer: I don’t work for Apple, but am a long time user, and clearly could be described somewhat as a fanboi. However, apart from my phone, I have only one Macbook. I have, at this time, 9 separate running Linux systems, mostly Ubuntu with a dash of Mint.)

I’ve played with Teensy 2.0. You can do USB bulk transfers about as fast as the USB full-speed bus can handle, if you’re willing to deal directly with USB, maybe write some assembly code, and leave little time for actual computation.

With the new Teensyduino 1.14 release, you can also have nearly that speed using only Serial.readBytes(). No difficult programming required.

Seems like the natural USB interface for driving a bunch of LEDs would be HID.

A cube is someplace between the half dozen or so LEDs typical on a

game controller and the matrix of values on an LCD.

Using HID would not have to do OS driver.

How does HID compare for speed to CDC?

Are there some limitations that mean doesn’t work for large numbers of LEDs?

HID works great for a small number of LEDs, like the 3 LEDs on your keyboard. It might work well for small to mid-size LED cubes. But HID is not suited for moving large amounts of data, like displaying 30 or 60 frames/sec video or data derived from video (eg, Ambilight) on large LED installations.

USB has 4 transfer types, each with different bandwidth allocation strategies by the host controller chip (in your PC or Mac). HID protocol uses the USB “interrupt” transfer type, which has guaranteed latency, but very limited bandwidth. You can read more about the 4 transfer types in the USB specification, or any number of articles people have written that are based on the spec with less technical explanation.

If you use “feautre reports”, then HID traffic goes over the control channel. This one is slower than bulk, but much faster than interrupt. On a full speed bus bulk is able to do ~19 transactions per frame which is about 1.2 MiB/sec. The control endpoint has an additional setup and handshake phase, which – depending on the host controller type – might go into a dedicated frame. Thus you have 2mS increase in transfer time compared to bulk. This is not so bad. On the other hand drivers above bulk are optimized different. Having a larger kernel side buffer, somewhat different control logic, and the gap between bulk and control increases. On high-speed devices the gap is far bigger because bulk uses 512 byte long packets, and control only 64.

A useful test. With the Arduino Due I got the same bad performance.

Although changing the sketch a bit, makes a big difference.

With the code below and using 4k blocks I got a throughput of 2.0MBytes/sec. More than 15 times faster.

#define USBSERIAL SerialUSB // Arduino Due, Maple

void setup() {

USBSERIAL.begin(115200);

}

void loop() {

char buf[4069];

while (USBD_Available(CDC_RX)) {

USBD_Recv(CDC_RX, &buf, 4096);

}

udd_ack_fifocon(CDC_RX);

}

For real USB 2.0 Hi Speed, you need three chips: A HiSpeed capable USB- FIFO chip, an FPGA and a DRAM.

Easy way: Get a DSLogic when they become available.

If you want one now, go get a usb-fpga board from ztex.de

Use the DRAM as a very deep fifo. (ring buffer, you’ll have to implement the controller for it in the FPGA).

No USB 2.0-fifo chip exists with sufficient buffer to actually maintain continuous transfer under anything but a real-time OS set up to never miss polling the USB bus.

Even linux will drop the ball by default, but it’s still many orders of magnitude better than windows:

Linux is like a guy who is off sick for a day about once or twice a year, Windows is like a guy who is just awol randomly for about 6 months at a time without warning. The situation is as if management doesn’t notice, because the windows guy still gets some work done over each year, and what he gets done is all that’s expected of anyone. Replace *year* in this example with “~8 ms interval” and it’s the situation with USB 2.0 HiSpeed in a nutshell. The practical upshot is that you need at least 8MB or so of buffer at an absolute minimum, if you’re running linux.

Implementing about 2 seconds of buffer will get you to 240 Mbps sustained rates, rock solid until your disk is full. (If you’re saving it – I tested this until a 11 TB RAID array was full – used XFS. Took about 101 Hrs I think.). You will actually manage a little more – maybe 256 or so, but it won’t be steady. You need the excess BW so that the buffer will empty out between OS glitches.

Oh, you will need to be using an intel root hub – there might be another root hub that can keep up, but most that I’ve tested just don’t. ( If you’re curious, a ras-pi manages about 140 Mbps, but only into memory…). I haven’t tested USB 3.0 root hubs yet….

Missing the multiple-MB buffer, you will be able to sustain maybe 20 MB/s for a couple seconds at a time, if you’re lucky…

But seriously, if you’re even slightly interesting in pushing or pulling data over USB 2.0 to your project, go support the DSLogic kickstarter.

Keep in mind you really only need one twisted pair to push data between FPGA’s at much higher rates, and there are two pairs on an expansion header on the DSLogic.

If you’re looking for an FPGA development board specifically to do high speed transfers like this, then the ztex boards are your friend. If you don’t need quite so much speed, but still want an FPGA, then the xess XuLA boards are my pick.

Good Luck!

I just tested the send speed (on a Linux Ubuntu laptop) over USB 3.0, and got over 1.2MiB/sec! Nice! I intend to use this to generate true random data at reasonably high speed.

I once did 5 megabits/s on a PIC16F1938 overclocked at 64Mhz. I could transfer floppydisk MFM timing data at about 250KByte/s without problems. I used a PL-2303 serial to USB chip :) The PL-2303 can reach 12Mbit according to the datasheet but the driver is limited to 6Mbit unfortunately. I’m interested in how the PIC32MZ high speed usb performs.