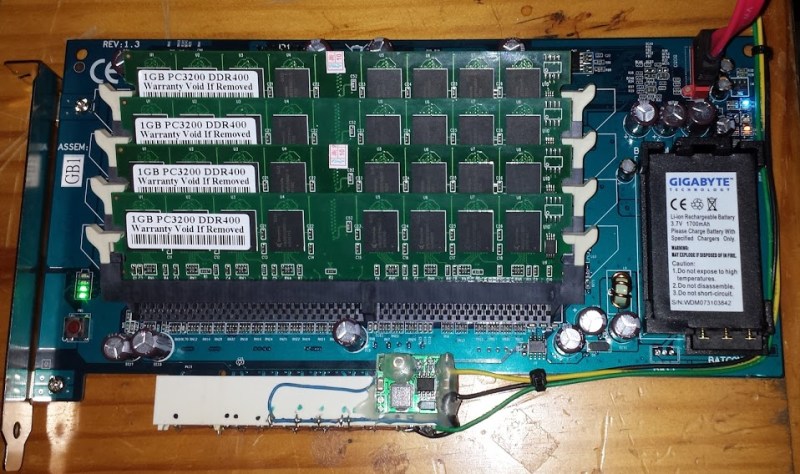

[Gnif] had a recent hard drive failure in his home server. When rebuilding his RAID array, he decided to update to the ZFS file system. While researching ZFS, [Gnif] learned that the file system allows for a small USB cache disk to greatly improve his disk performance. Since USB is rather slow, [Gnif] had an idea to try to use an old i-RAM PCI card instead.

The problem was that he didn’t have any free PCI slots left in his home server. It didn’t take long for [Gnif] to realize that the PCI card was only using the PCI slot for power. All of the data transfer is actually done via a SATA cable. [Gnif] decided that he could likely get by without an actual PCI slot with just a bit of hacking.

[Gnif] desoldered a PCI socket from an old faulty motherboard, losing half of the pins in the process. Luckily, the pins he needed still remained. [Gnif] knew that DDR memory can be very power-hungry. This meant that he couldn’t only solder one wire for each of the 3v, 5v, 12v, and ground pins. He had to connect all of them in order to share the current load. All in all, this ended up being about 20 pins. He later tested the current draw and found it reached as high as 1.2 amps, confirming his earlier decision. Finally, the reset pin needed to be pulled to 3.3V in order to make the disk accessible.

All of the wires from his adapter were run to Molex connectors. This allows [Gnif] to power the device from a computer power supply. All of the connections were covered in hot glue to prevent them from wriggling lose.

Why oh why can’t someone come out with a device that will fit in a half-height 5.25 drive bay and allow you to stuff it with a dozen old DDR2 sticks and hook up via SATA? The things are cheap as hell right now, and everyone has some laying around. Every time one of these comes around, it’s expensive, as extremely limited slots, and vanishes in two months.

Does it have supercapacitor backup with optional solar recharge? If so sign me up for a TB.

Wouldn’t it be easier to just use the memory on the motherboard ?

This.

No seriously, file system drivers and operating systems are *clever*; when possible, they cache things in RAM, which they do transparently, and whilst dynamically giving back the cache space in case the RAM is needed for application memory.

DDR3 is dirt cheap, so just don’t go for less than 16GB of it; your operating system will make the most of it.

While we’re at it: There’s a reason we don’t see huge DDR-based cache devices on the market, and it’s probability. Even extremely low probabilities of error per bit per hour, a steadily-running storage of several Gigabytes will, at some point, show bit errors. Now, this is not a problem if you add enough redundancy; you can do that in a controller by adding more “cheap” RAM, which is slower, or you can do that on-module with expensive ECC RAM; that’s server-style hardware.

The same goes for hard drives, by the way; in the age of 5ct/GB hard drives, using them with parity/redundancy seems a no-brainer; yet, no one does this…

Read up on ZFS ZIL, this information is used in case of power failure and you volatile DDR cache is lost. For zil IOPS is way more important than total transfer rate, Ind if fast enough you can set the zfs pool to use sync writes always, gaunteeing never loosing much data in case of power failire, the fast ZIL storage prevents performance loss by forcing sync writes. Read up on it before you throw this idea out.

Yes, but in a case of power failure, your DDR-based buffer will still be wiped, so this has no advantages in practical application.

If you look in the bottom left of the picture you can see the battery backup for the DRAM.

Of course, it’s anyone’s guess how long that will last, especially as it ages, but it should cover a short outage.

Ever heard of RAMSAN?

assuming a 64 bit operating system, sure, your OS will make the most of it. with a 32 bit OS however… you’re just wasting 12-ish gigs of ram…

This depends on your system board. I have a se7501wv motherboard with two 32bit xeon cpu’s and 12G ram. All of it is accessible via PAE and is usable by Linux. The limiter is how much any single process can allocate. The disk cache is free to use all or as little as necessary.

There was, it was called Gigabyte i-RAM box.

..like the ACard ANS-9010 from 2009.

Addressing and refreshing and powering RAM isn’t as easy as you seem to think. And it’s not worth the effort to develop something when DDR2 is dead, and actually not that cheap at all, A real SSD is much cheaper per GB than your whole setup.

My whole setup was extremely cheap, I had the RAM already and the board was $60USD. This gives me a device with a near infinite life expectancy that can perform in excess of 20,000 IOPS. I challenge you to find a $60 SSD that can offer that kind of performance for years on end.

Why? If you have the ram density you can just ramdisk the crap you want to be on it…

I’ve ran all kinds of shit from a ramdisk and I can tell you right now that your idea is about as useless as titts on a boar pig when up against an SSD.

There’s little point in making a RAM disk with a modern OS. If you have the RAM, the OS will automatically cache your disk accesses, with similar performance as a RAM disk, but with automatic backup to a real disk.

Read up on ZFS, you clearly do not understand the reason why you would want a cache like this. If used as a ZIL drive for the array it will prevent data loss if there is a power outage when data is cached in RAM but has not yet been written to disk. This can be done because we can tell ZFS to enforce synchronous file operations, which normally would cause a major slowdown, but if ZFS has a reliable place to write the data that is very fast (ZIL), it will perform like an asynchronous file system.

ZFS does still keep files in RAM just like any other file system on any modern operating system. If you wanted to keep more in fast ram often a SSD array is used as a L2ARC cache to allow hundreds of GB of data to be in fast cache, backed by a slower multi TB array, providing extreme performance at a reduced price.

We weren’t talking about ZFS, but about a RAM disk.

Creating a ramdisk can be useful to store files you will never want to put on actual disks. For example temporary files when compiling, database query logs, … The amount of IOops a ramdisk can do is enormous, but when using the OS cache it will typically write them to disk every so oftwen, reducing IOops and wearing SSDs.

BTW: the tmpfs on linux is implemented by using this caching facility, but without a backing store, so the cache can never be flushed to disk.

Hyper OS makes a Ram-drive like that

If you were using 2GB Chips (or higher) could you setup RAID across them (with software support I presume)?

The i-RAM only supported 1GB memory sticks so it maxed out at 4GB. They talked about making a newer version but by that point, SSDs had taken off and it wasn’t worthwhile. You’d need another entire i-RAM card with 4GB worth of RAM to do RAID-0.

Its also slower than a modern SSD, since its limited by SATA 1

Slower bulk throughput, but if the drive is used as a ZIL drive, it is more than fast enough as IOPS matters here, not bulk throughput. Since the ZIL is used in case of a power outage during write from system ram, it MUST be reliable, SSD wear is a major conern due to the sheer number of writes that are performed on a ZIL drive.

A note about the editor’s comment on ZFS using USB flash drives: that’s not really recommended. The ZFS L2ARC is recommended to be on a fast MLC SSD. You can also keep the write intent log on an SLC SSD mainly for its higher write durability.

Yeah, I was looking for this. Who on earth would cache a ZFS array on a usb drive??

Since I am new to ZFS I initially used this as an L2ARC drive, but after doing some more research it is best suited as a ZIL drive. The insane IOPS this device can handle makes it more then enough for the general small office server, and the ZIL drive barely needs more then a few hundred MB of space. I have heard of people using a USB stick for the ZIL, not the L2ARC, which makes more sense, but I would still not trust a USB stick for data that is intended to be used in the case of a failure.

Because I have a ZIL device, I can and have told ZFS to treat every file operation as synchronous. ZFS will return near instantly due to the ability to write the data to the ZIL, and asynchronously write the data to disk, allowing high performance with synchronous file operations enforced.

I remember wanting iRam when it first came out but I didn’t have the money back then. When I saw they were getting cheap, SATA2 had already taken off and some decent hard drive could do faster than what the DDR can do. SSD came out as well and now a decent $100 256GB SSD can nearly saturate SATA 6G so unless someone comes up with DDR5 based PCI drive card that costs less than comparable SSD, DRAM based drive probably got left behind in the dust.

If a SSD is faster then DDR why the heck would we have system ram at all? I think you need to learn how fast system ram is before you jump to conclusions like this. DDR2-800 is capable of 12.8GB/s, the fastest SSDs on the market at current is the Samsung 850 Pro, which is capable of 0.5GB/s.

Correction, 12.8GB/s for dual channel. 6.4GB/s for single channel

I think perhaps it is you who needs to learn how fast modern SSD’s are. The card used in this build (gigabyte i-ram) benchmarks at about 25,000 IOPS (4k), and its sequential writes speed is limited by the legacy sata connection to about 1.5gb/s. Even a low end SSD blows this out of the water, for example the samsung 840 evo (<$100 for the 120gb version) benchmarks at about 75,000 iops (4k) and nearly 4gb/s sequential write speed.

Sure ram is fast, but you need a fast connection to your processor to take advantage of it. By the time you go putting a 10 year old asic between the ram and your processor you may as well be communicating with smoke signals. There is a reason hardware raid cards use a piece of battery backed ram directly on the raid card for the cache.

I well know how fast they are, this was a response to the guy who claimed that SSDs are faster then system RAM, not a comparison of the i-Ram to SSDs.

It is very obvious the SATA link is a bottleneck here, it still doesn’t mean that this solution was not beneficial to a mechanical HDD setup, all that matters here is that the I-Ram is faster then the 3x mechanical disks in a RAID-Z array.

Yeh the ACard’s ANS-9010 was a beast i always thought it was a shame they never updated to sata 2 and ddr3

and way back in time i use to have a megaarray that ran 133 mhz ram i had 32 x128mb of at the time super fast ram disk i bloody loved

Long live Amiga RAMdisk

“This meant that he couldn’t only solder one wire for each of the 3v, 5v, 12v, and ground pins. He had to connect all of them in order to share the current load. All in all, this ended up being about 20 pins… He later tested the current draw and found it reached as high as 1.2 amps, confirming his earlier decision.”

IIRC, max current draw per PCI pin is about 1A. Not stated is which supply rail that 1.2A is drawn from, or if that’s combined figure; but chances are the majority of power is drawn from a single rail. Hooking up all 20 power pins was a foolproof way to ensure it had enough power when requirements weren’t known in advance. But for someone looking to replicate this build, it should be possible to make it work with only 6. Maybe 5, if the 12V rail isn’t actually used.

this. conductivity per wire/per pin is higher than you’d expect.

As part of the DDR4 specification, there are now NVDIMMs. These are RDIMMs (meaning there is ECC for bit errors) with DDR4 DRAM onboard, which also has SLC NAND on the DIMM. It is backed up by supercapacitors. During a power loss event, the DRAM automagically backs up to the NAND. Supermicro has motherboards that support this, Intel supports it in their Haswell-E (Xeons).

Just set up a RAMDisk in software, bingo bango, done. Problem has been identified and a solution has been standardized. If you’re going to go with a PCIe card, you’re better off using a PCIe SSD (which are available in 2.5″ now).

Can I just point to the elephant in the room, this is a hack, that it’s usefulness and efficiency is minimal; for 99% of any user. But it was useful and made sense to the initial poster. I think that while the defense of utility is weak, we wouldn’t say that about other useless hacks. Case in point: An entire subdomain of this site is dedicated to getting computational jelopys on the internet, to load up a useless page to say “we did it”. Lighten up guys.