The folks at [Design I/O] have come up with a way for you to play the world’s tiniest violin by rubbing your fingers together and actually have it play a violin sound. For those who don’t know, when you want to express mock sympathy for someone’s complaints you can rub your thumb and index finger together and say “You hear that? It’s the world’s smallest violin and it’s playing just for you”, except that now they can actually hear the violin, while your gestures control the volume and playback.

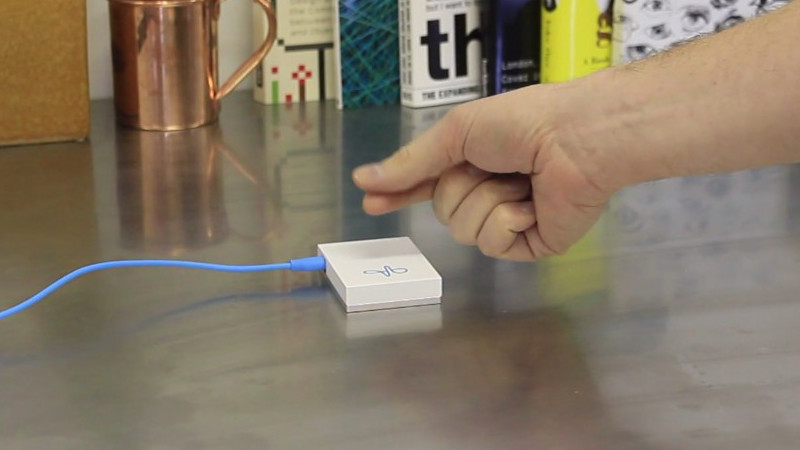

[Design I/O] combined a few technologies to accomplish this. The first is Google’s Project Soli, a tiny radar on a chip. Project Soli’s goal is to do away with physical controls by using a miniature radar for doing touchless gesture interactions. Sliding your thumb across the side of your outstretched index finger, for example, can be interpreted as moving a slider to change the numerical value of something, perhaps turning up the air conditioner in your car. Check out Google’s cool demo video of their radar and gestures below.

Project Soli’s radar is the input side for this other intriguing technology: the Wekinator, a free open source machine learning software intended for artists and musicians. The examples on their website paint an exciting picture. You give Wekinator inputs and outputs and then tell it to train its model.

The output side in this case is violin music. The input is whatever the radar detects. Wekinator does the heavy lifting for you, just give it input like radar monitored finger movements, and it’ll learn your chosen gestures and perform the appropriately trained output.

[Design I/O] is likely doing more than just using Wekinator’s front end as they’re also using openFrameworks, an open source C++ toolkit. Also interesting with Wekinator is their use of the Open Sound Control (OSC) protocol for communicating over the network to get its inputs and outputs. You can see [Design I/O]’s end result demonstrated in the video below.

And if you instead want an actual miniature instrument to play then we suggest this mini electric ukulele which, while fitting in the palm of your hand, includes microcontroller, speaker and batteries in its miniature laser-cut plywood frame.

[via GIZMODO]

A great technological achievement, really this is high tech at it’s finest. And as an engineer I can see the beauty of it.

But… it somehow scares me if I look at where we are going regarding input devices.

Because, in the past a button had a one function and was always in the same place. If we saw a device for the first time we’d look at the button and we could figure out how to operate it… most of the times no manual needed.

Currently we are using “buttons” on a touchscreen (so buttons that could be anywhere and have any function… still getting used to it because because with every next version of the OS on my phone buttons seem to have changed or moved,slightly but enough to be confusing at first).

And then in the future we will have “buttons” you cannot see, and could be anywhere in close proximity (and perhaps later even further away) of the device that can be controlled, how can this be intuitive or be considered as “better”?!?

I imagine myself as an old man with such a device trying to operate it for the first time… changing all sorts of settings except the settings I want to change. It is already difficult for old people to keep up with technology, how will they be able to keep up with things they cannot see.

As an engineer I embrace technological progress, but I sometimes long for the times when live was much more simple (though much harder in many ways). So I comfort myself with the thought that some things will never change, for example, the elevator never replaced the stairs. Computer never replaced paper and speech recognition still hasn’t replaced the keyboard.

I can envision some sort of feedback, even if it’s just an LED that turns on or something audible when you put your hand in position to begin the gesture. That would give you the chance to cancel it before beginning.

I can also see a lot of times you accidentally put your hand in place and then quickly move it away when the LED or audible indicator happens.

Cool tech though.

Also annoying are touchscreen / web app UIs that eliminate any vestige of meaningful symbology. “Menu” as a word has been replaced with the symbolic [three horizontal lines], if I were picking up a device for the first time I’d have no idea that symbol was even clickable. Squares, circles, dots, triangles…. what do they all mean?!

And yes, I love my buttons and knobs. Can manipulate them without looking once you know the device (thinking of test equipment in particular)

It’s just the evolution of UI. The reason to argue what was and what is, is if you fear one of these devices slipping through a wormhole that sends it back to the 1920s. Everyone would ignore it. The current touch screen direction is just part of that evolution. The next will a combination of AR with either a simulated keyboard that is functional through radar or leap. We have projected keyboards already but it’s something that won’t be adopted beyond the concept of a few proven interfaces. The creator of fantastic contraption has some amazing input on these types of issues.

Also physical controls have the advantage they can be touched. You can find and identify a control by touch only, in the dark or when can not look at them (e.g. when driving, on a remote control etc.). Until technology gets there, with recent ultrasonic technology or whatnot, I prefer actual “knobs” in some places.

I had a rental car with a touchscreen a few months ago. It was horrible. All controls (heat, radio, etc) on the touchscreen, with menus, making it unsafe, and nearly impossible given the location of the display, to even set the radio volume while driving, much less adjust the heat or turn on the defog. It was fun trying to pull over to the side so I could find defog, when I couldn’t see out the windows.

So this machine can detect when I touch myself?

And plays excerpt from Bach’s violin concerto accordingly.

Lol!

I bet that someone could find an animated gif for that. ;)

There must be examples of detectable strokes somewhere.

Or, in case of failure, canned laughter.

lol

I always have been suspicious about Soli. I see all the researchers in the face to face interviews wearing wedding rings and other hand jewelry. Yet all the hands in the operation demos do not have any. I’m guessing a ring would scatter microwaves sufficient enough to cause noise in the return. Has anyone here tried it? With rings on?

It would be much more interesting if the pitch depended on how close or far the fingers are from the sensor, and the speed depended on how slow or fast the fingers are “rubbed” together.

So, a theremin basically?

Next iteration: Interpret the rubbing as “kind of” playing-style and use a VSTi to play the music instead of some samples in HipHop-manner

Would have been nicer if it had played in tempo with the finger movements. Even if it was just two notes alternating for back and forth to match finger movement, or descending/ascending scales. Because as demoed, there’s little to show it’s successfully performing gesture recognition. Could just as easily be a 1D rangefinder, programmed to respond if it sees a repeated back and forth change in the distance.

sounds like it could make an interesting key logger, midi instrument interface or as part of a small scale 3d scanner.

Rather than rubbing fingers together I was thinking as something to replace an electronic pick-up on string instruments and the likes.