Why would anyone bother to create new content for a console system that’s staring down its 40th birthday? Perhaps just for the challenge of fitting a game into 40 kilobytes of storage.

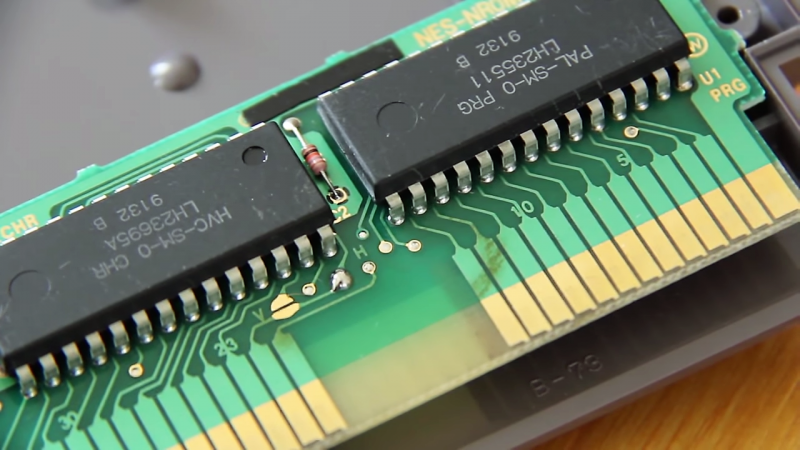

That at least seems to be the motivation behind [Morphcat Games] pending release of Micro Mages, a new game for the Nintendo Entertainment System console that takes its inspiration from Super Mario Bros. The interesting bit here is how they managed to stuff so much content into so little space. The video below goes into great detail on that, and it’s a fascinating lesson in optimization. The game logic itself is coded in assembler, which of course is far more efficient than higher level languages. Even so, that took 32 kB of ROM, leaving a mere 8 kB for background elements and foreground sprites.

Through a combination of limited sprite size, tiling of smaller sprites to make larger characters, and reusing tiles by flipping them horizontally or vertically, an impressively complete palette of animated characters was developed. Background elements were similarly deconstructed and reused, resulting in a palette of tiles used to generate all the maps for the game that takes up just 60 bytes. Turning those into playable levels involves more mirroring and some horizontal shifting of tiles, and it looks like quite an engaging playfield.

Yes, there’s a Kickstarter for the game, but we’re mainly intrigued by what it takes to cram a playable game into so little space. Don’t get us wrong – we love the Retro Pie builds too, but seeing the tricks that early game developers relied upon to make things work really gets the creative juices flowing.

[Keith O] dug up this gem for us. Thanks!

“The game logic itself is coded in assembler, which of course is far more efficient than higher level languages.”

hmm… a good compiler in combination with the correct settings and a programmer who knows what he/she is doing is very likely to output the same code these days. You could argue that it would require some extra effort, but coding in assembly takes effort too. But honestly, I prefer assembly sometimes, just because it just so much fun and because it can be fun to write a super optimized code with all sorts of trickery just to shave of one CPU cycle from the loop. But those pieces of code are exceptions.

Depends on what you are doing. When doing C/C++, there is a lot of “rules” that the compiler needs to ensure. Even if you are not depended on it, while in assembly, you have more knowledge on what details are important and not.

Just a random example, what if you need 24bit math on a 8bit CPU. C/C++ you need to do 32bit math. Assembly you can actually do 24bit math.

C doesn’t have a 24-bit wide primitive type, but then again *neither does assembly*. So there’s nothing stopping you performing 24-bit math in C the same way you did it in assembly, by combining operations on multiple smaller values.

In Microchip C, a short long is 24 bits.

How do you access the carry flag in C? Add with carry (or subtract with borrow) are key to doing math on values larger than the native word size of the CPU.

There are macros for such things.

Also if you need to access the carry flag in C then you are using a wrong concept and need to learn designing proper architecture/algorithms.

No, there are not. There’s no portable way to access the carry flag in C, and many (most?) compilers have no way to do so.

And your comment about “doing it wrong” is seriously insane. The carry bit is an architectural feature. There are tons of ways to use it, and not all are covered efficiently by the compiler.

Example: you have 2 arrays of 8 bytes, and you want to store which bytes are bigger in each into a bit array for later use. Most architectures set carry on a compare, and have a rotate which pulls in carry. So you should be able to just compare and rotate, but C won’t let you do that and compilers aren’t usually smart enough.

“The carry bit is an architectural feature.”

“no portable way to access the carry flag in C”

There is your contradiction.

That example depends heavily on the compiler and architecture in question. The C language has been ported to numerous computer architectures, many of which don’t have the standard 8/16/32/64bit integer sizes commonly used nowadays. Meaning that the bit lengths of the char/short/int/long types aren’t actually defined by the standard. (float and double are defined to be (at-least) 32 and 64bits respectively).

What if the computer you’re programming for has 12bit chars and 18bit ints? The language itself supports that, though porting code to such a machine will be more difficult natch.

In the case of the 6502, as for the NES, I doubt that common C compilers use 32bit math by default.

While that may be true for modern cpus. The 6502 has pretty mediocre options for C. In fact the architecture being memory and not register based doesn’t seem to be very well suited for C. So unless someone makes a much more efficient C compiler for 6502, it’s better to code in assembler.

You could use BCPL that was available on the BBC micro, which had a 6502 processor.

Real geeks use assembly (or butterflies sometimes).

Or rollercoaster game programming.

Sure, ASM is fun and games, but try to implement a whole WiFI stack, TCP stack, a simple HTTP server, UART routines, 16bit TFT and touch libraries in Extensa ASM first! Then debug it :-)

Squeezing 30 kBytes of ASM into these tiny cartridges is the way to go. Filling the 4Mbytes of an ESPs Flash using ASM, is pretty much a lifetime task…

I feel like there is a story here… Details?

Exactly! I could not agree more.

In other words, use the right tool for the job. And if there is any need to do some very specific part in assembly then the C-compiler should allows you to do so within the C-code itself by using a special directive so that the compiler knows that that parts needs to treated as assembly code.

I beg to differ. On embedded platforms like AVR and ARM, GCC is pretty bad. On anything other than trivial code, I can usually cut the code size down by 20-30%.

For x86 it’s a bit better, and it’s only getting close since LTO was introduced. I still don’t think you’ll see the day when you can write a 64K demo in C that competes with the hand-optimized asm.

For small embedded work, I generally prefer asm. For bigger programs (over 1k lines), I’ll use more C/C++, but if I had a high-level assembler that took care of register allocation, I’d use it for even larger programs.

Could you even program something like this outside of assembly, even with modern software development platforms?

They had to take so many shortcuts and “compress” almost everything to get it to both fit but also to be functional. Not sure you can get this to work otherwise without that.

It probably helps that the scale and scope of the entire project is digestible due to it being so small. Relatively speaking of course. Still took them several years though.

On x86 you are completely right. But this is 6502, do you know how good the optimizers are on this CPU?

I’ll give better examples then: in C, there’s no way to get the state of the zero and carry flags, so you can’t do tricks that the architecture supports, like comparing and then shifting the result of the compare into another register using the rotate instructions.

But register allocation is the bigger deal: compilers stick to rigid register calling conventions to allow for portability, and that’s where you can save a lot of time/space between the two languages.

Spot on. A lot of CPUs have an ‘alternate’ register set, which compilers use to handle interrupts and aren’t used during normal program flow. But when writing assembler, I can use some of those alternate registers because I know my interrupt routines don’t use them.

I’ve recreated a couple of ZX Spectrum classics and learned a lot about optimizing the limited memory. The 16Kb (!) game Tranz-Am for instance plays on a map of 6192 by 3112 pixels ie almost 600Kb. I’ve written a program that reduces that huge tilemap to an array that takes up less than 2048 bytes.

That’s a nice reduction.

But the original Excitebike was less than 32k

And Galaxian is 8k. This game likely has a lot more packed into it than those two, though.

Since the kickstarter is finished, if you want to buy the game it is available on indiegogo indemand: https://www.indiegogo.com/projects/micro-mages-a-new-game-for-the-nes#/

6502 assembler is very well known, and there are plenty of people out there who simply breathe it, what with the C64 demo scene. For a NES game it is simply the best option. You could probably get away with C for higher level game logic of course.

256 4-colour 8×8 bitmaps takes 4KB of memory, the big memory issue is level layouts. 60 bytes per game screen (as I interpret this article) is not too bad at all considering the NES has 960 tiles to display (I think the game uses 2×2 tiles for map elements, but that’s still ~240 bytes per screen (I guess there’s a HUD taking up some of that space)). From the Indiegogo page I can see a screenshot that appears ‘mirrored’ so that halves the data again, and maybe there’s some form of compression in play too (RLE?). Or maybe there are ‘supertiles’ made up of these 2×2 blocks.

Secondly, I’m still sick of videos. What ever happened to readable articles with screenshots I can read in a couple of minutes?