NVIDIA kicked off their line of GPU-accelerated single board computers back in 2014 with the Jetson TK1, a $200 USD development system for those looking to get involved with the burgeoning world of so-called “edge computing”. It was designed to put high performance computing in a small and energy efficient enough package that it could be integrated directly into products, rather than connecting to a data center half-way across the world.

The TK1 was an impressive piece of hardware, but not something the hacker and maker community was necessarily interested in. For one thing, it was fairly expensive. But perhaps more importantly, it was clearly geared more towards industry types than consumers. We did see the occasional project using the TK1 and the subsequent TX1 and TX2 boards, but they were few and far between.

Then came the Jetson Nano. Its 128 core Maxwell CPU still packed plenty of power and was fully compatible with NVIDIA’s CUDA architecture, but its smaller size and $99 price tag made it far more attractive for hobbyists. According to the company’s own figures, the number of active Jetson developers has more than tripled since the Nano’s introduction in March of 2019. With the platform accessible to a larger and more diverse group of users, new and innovative applications for machine learning started pouring in.

Cutting the price of the entry level Jetson hardware in half was clearly a step in the right direction, but NVIDIA wanted to bring even more developers into the fray. So why not see if lightning can strike twice? Today they’ve officially announced that the new Jetson Nano 2GB will go on sale later this month for just $59. Let’s take a close look at this new iteration of the Nano to see what’s changed (and what hasn’t) from last year’s model.

Trimming the Fat

To be clear the new Jetson Nano 2GB is not a new device, it’s essentially just a cost optimized version of the hardware that was released back in 2019. It’s still the same size, draws the same amount of power, and has the exact same Maxwell GPU. In broad terms, it’s a drop-in replacement for the more expensive Nano. In fact, it’s so similar that you might not even be able to tell the difference between the two models at first. Especially since the biggest change isn’t visible: as the name implies, the new model only has two gigabytes of RAM compared to four in the original Nano.

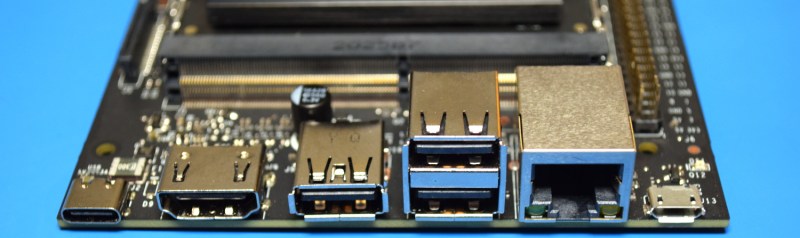

The board has lost a few ports as part of the effort to get it down to half the original price, however. The Nano 2GB drops the DisplayPort for HDMI (the previous version had both), deletes the second CSI camera connector, does away with the M.2 slot, and reduces the number of USB ports from four to three. Losing a USB port probably isn’t a deal breaker for most applications, but if you need high-speed data, it’s worth noting that only one of them is 3.0. Overall, it seems clear that NVIDIA took a close look at the sort of devices that folks were connecting to their Nano and adjusted the type and number of ports accordingly.

Of course, the 40 pin header on the side remains unchanged so the new board should remain pin-compatible with anything you’ve already built. The Gigabit Ethernet port is still there, but unfortunately wireless still didn’t make the cut this time around. So if you need WiFi for your project, count on one of those USB ports being permanently taken up with a dongle.

It’s not just slimmed down, but updated as well. The 2GB removes the old school DC barrel jack and replaces it with a USB-C port. On the original Nano you could run it off of the micro USB port for most tasks, but it was recommended to use a laptop style power supply if you were going to be pushing the hardware. Now you can just use a 15 watt USB-C power supply and be covered in all situations.

A Tight Squeeze

Since the hardware is nearly identical between the two versions of the Nano, there’s really no point running any new benchmarks on it. If your software worked on the $99 Nano, it will run just as well on the $59 one. Or at least, that’s the idea. In reality, having only half the available RAM might be a problem for some applications.

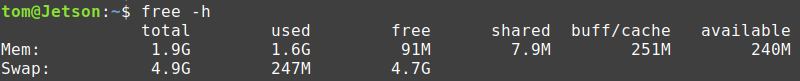

NVIDIA sent me a review unit so as a simple test, I ran the detectnet.py script that makes up part of NVIDIA’s AI training course on the live video from a Logitech C270 camera. While the Nano maintained a respectable 22 to 24 frames per second, the system ran out of RAM almost immediately and had to dip into swap to keep up. Naturally this is pretty problematic on an SD card, and certainly not something you’d want to do for any extended period of time unless you happen to own SanDisk stock.

To help combat this, NVIDIA recommends disabling the GUI on the Nano 2GB and running headless if you’re planning on doing any computationally intensive tasks. That should save you 200 to 300 MB of memory, but obviously isn’t going to work in all situations. It’s also a bit counter-intuitive considering the default Ubuntu 18.04 system image boots directly into a graphical environment. It’ll be interesting to see if some lightweight operating system choices are offered down the line to help address this issue.

Rise of the Machines

It’s probably not fair to call the Jetson Nano 2GB a direct competitor to the Raspberry Pi, but clearly NVIDIA wants to close the gap. While the lack of built-in WiFi and Bluetooth will likely give many makers pause, there’s no question that the Nano will run circles around the Pi 4 if you’re looking to experiment with things like computer vision. At $99 that might not have mattered for budget-conscious hardware hackers, but now that the Nano is essentially the same price as the mid-range Pi 4, it’s going to be a harder decision to make.

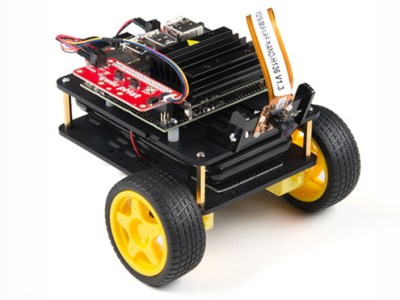

Especially since NVIDIA is using the release of the new board to help kick off the Jetson AI Certification Program. This free self-paced source is comprised of tutorials and video walkthroughs that cover everything from the fundamentals of training up to practical applications like collision avoidance and object following. To complete the Jetson AI Specialist course and be granted the certification, applicants will have to submit an open source project to NVIDIA’s Community Projects forum for review and approval.

Especially since NVIDIA is using the release of the new board to help kick off the Jetson AI Certification Program. This free self-paced source is comprised of tutorials and video walkthroughs that cover everything from the fundamentals of training up to practical applications like collision avoidance and object following. To complete the Jetson AI Specialist course and be granted the certification, applicants will have to submit an open source project to NVIDIA’s Community Projects forum for review and approval.

If you wanted to get your feet wet with AI and machine learning, picking up a Jetson Nano at $99 was a great choice. Now that there’s a $59 version that includes access to a training and certification program, there’s barely even a choice left to make. Which in the end, is exactly what NVIDIA wants.

I admit, the initial price of the Jetson kept me from even considering the purchase of one.

Time will tell if one is in my future.

“According to the company’s own figures, the number of active Jetson developers has more than tripped since the Nano’s”

Trippin’ out, man!

B^)

Would really like to see a simple cluster board for the jetson nano sometime soon. would love to have a jetson nano cluster running k8s + all those compute cores readily accessible in my closet for any software project needs.

This would be something interesting to benchmark.

was done with 64 of jetson nano 1 year before

see

https://forums.developer.nvidia.com/t/the-tale-of-the-bee-saving-christmas-tree/109482

Broadcom sells PCIe switches that support host-to-host communication and sharing of peripherals. Given that the module has a 4 lane PCIe gen 2 port, it would be cool to see a bunch of these in a cluster tied together with that fabric locally and talking with other cluster boards over 10GE.

Docker on Tegra is not very mature. Wait until JetPack 4.5

Just if it wasn’t such a closed mess :(

This is why I’ll never consider nvidia for anything, no matter how cheap they make it.

I absolutely refuse.

I mean, if you demand fully open source you’re out of the current SBC market until RISC-V starts hitting shelves. Raspberry Pi, Beaglebone, Udoo, Pine64, Orenge Pi, every SBC has closed-source portions.

There’s *quite a lot of room* between “fully open source” and “nightmare collection of undocumented binary blobs”, it’s not a binary choice. Nvidia is well and truly off the wrong end.

Nvidia has been notorious for not playing nicely with Linux. They are so paranoid that the source for their firmware and drivers will reveal something that could help competitors, or even give some smart software engineers a lead into hacking the cards to do what they weren’t sold to do. For instance, Nvidia sells professional and consumer graphics cards–but they are using the same GPU die on a slightly different PCB. It comes down to ram, really. It’s been shown that resistor hacking the consumer cards, and flashing the pro firmware to the BIOS, can trick the drivers into believing that you have a Quadro, which then gives you unrestricted access to GPU VM passthrough and full multimonitor support on cards that lock the monitor outputs to three. The limitation is in the RAM, since Quadro cards come loaded with it…but guess what? Ram chips aren’t that expensive compared to buying a pro card, and its a matter of soldering them on to the empty pads. It is difficult though if you don’t own a decent reflow set, as they are BGA nowadays. The point I’m getting at is that it could be a simple driver based modification to enable the professional features like multiple encoding streams and so on. Your consumer GPU can already do it, but they artificially locked you out in order to inflate the prices of their pro-line cards–which are the same damn cards aside from more RAM!

Literally every arm board is a closed mess. At least within NVidia you get updates and source is available for most stuff.

Still better than x86 with Intel’s ME. For fck sake it even has its own co-processor!

Horse pucky, with TI I get excellent documentation, and when something didn’t compile right in GCC it took less than 4 hours to get free forum support from one of their engineers who provided the corrected file!

And their drivers are BSD-style open source and work on any ARM board.

Perhaps the reason that you think ARM boards are a closed mess is only because you’ve been using nvidia?

Honestly out of the loop here: what’s closed nowadays on the Tegra line of parts? I remember the rage over Tegra2 years ago having everything under NDA and nVidia in a constant war with hackers over the bootloader on tablets.

Has that changed? What’s still a blob or precompiled library? Are docs public or under lock and key?

Are there any better options? RPi has improved since the early days, and scalar performance is now better than the Jetson Nano, but its GPGPU is still nowhere near anything nVidia sells and isn’t a platform that is scalable to mass production.

In principle the orange pi can work without any blob. Even the bootrom source of the asic is available (although I don’t know under which license as it is in chinese) In any case a bootrom is something you don’t compile yourself as you cant modify the maskrom anyway.

There is a power management OpenRISC CPU with a binary blob, but the thing works without using just Linux power management except for wakeup from standby. Making your own blob that makes this work should not be that difficult. But it seems most ppl don’t use standby mode.

Docs and downloads are sometimes behind a login wall. That’s positively obnoxious. I don’t think anything requires an NDA anymore. Most sources available except for the drivers themselves.

If you complain enough on the Nvidia forum they will open source stuff. The nice stuff is their developers are really responsive, so if you do have an issue, and you submit feedback, they will tend to it.

It still uses a signed binary blob to boot. The device tree is a huge pain to deal with if you need to tweak any hardware settings.

Does the blob hold the dtb, or is it possible but tedious to change devicetree settings?

is there a more open alternative for embedded which actually works without endless headaches and has real documentation? Nvidia is pretty good on providing documentation and learning resources. I’d say that more than offsets whatever closedness there is in CUDA. It’s enough to have to deal with compatibility issues within tensorflow versions itself than to also have to spend time on getting it to work with whatever GPU you have.

Pretty much everything except the drivers are open source, although some stuff that is open source is under proprietary license.

Nvidia website says this has ac-Wifi, with an asterix “initially not available in all regions”?

They actually give you a USB dongle for the $59 price, which is a nice bonus but obviously not the same as having it internal since you’ll be giving up one of the already reduced number of USB ports. Trying to pass it off as the same as having integrated wireless seems a little disingenuous to me, frankly.

As for the other regions, we were told that NVIDIA can only distribute the WiFi adapter in Europe and North America, so other countries won’t get it until they can figure out the logistics.

Also, while swapping on an SD card is obviously not recommended, for $5 you can get a USB 3.x thumb drive that’s a lot faster (or USB 2, if you’ve used the USB 3.0 port for something that needs the speed more.)

Nvidia if you’re reading this keep doing what you’re doing. The machine vision community doesn’t need more script kiddies.

Please make a sample project in visual studio though, no one likes eclipse. A friend of mine got a project up and running in visual studio with visualgdb and it works quite nicely. Give people options, I don’t know a single dev that is stoked about eclipse. Please don’t force it on people.

Nobody is forced to use eclipse. Their samples are IDE agnostic.

Well, some people has to, but that’s just because they’re into BDSM.

While i personally dont like it either, i know for a fact a ton of people like Eclipse & you shouldn’t shove it aside so easily solely based on “i dont like it so therefore nobody likes it”, besides its actually pretty close to the Visual Studio IDE if you’d just give it a chance.

Once again developers will have to be resourceful and frugal with memory… ah the good old days

If you turn off X, you get about the same free ram as on the 4GB Nano. Also you can still use graphical applications without x11. Eg. Various

GStreamer sinks will still work.

Huh? I’m not great at math, but doesn’t seem like there’s anything you could do to make 2 = 4. Without X you could probably get the system down to 200 MB used at idle, which gives you 1.8 GB free versus 3.8 GB.

Even if you left X enabled on the 4 GB, you still would have more free RAM than the new version starts with.

Of course NVIDIA wants to lock developers and engineers into the closed source CUDA ecosystem.

Of course. It’s their business model, but their business model also requires that CUDA be a good product.

Sounds like they’re putting their lack of ARM royalty payments and their soon to be incoming ARM royalty payments to good use. Or are at least betting their purchase won’t get blocked so they can subsidize this board from funds received from other vendors.

I wonder if it has hardware CAN bus support. They don’t advertise it so it’s possible the answer is no. That’s too bad, CAN is incredibly useful in robotics applications, and SPI to CAN adapters have various problems. I used hardware CAN in Jetson TX2 and it was working great.

And that makes it the 3rd NVIDIA product released in the last two weeks that I’m NOT interested in…

I pre-ordered mine! Can’t wait!

Are these jetisons super fast for vision data processing? if I could get more than 100fps for depth calculations to I could use it for a project I have in mind…

Jetsons have support for adding an Intel WiFi card, which is accesible if you remove the main acclerator from the board (just remove two screws and pull it out).

While it’s a bit of work and requires an additional item of 15$ or so, it doesn’t take an USB slot.

Raspberry PI is turning away from makers with stupid specs for hybrid desktop usage, any true maker oriented board is welcome

Most makers may complain about RPi and its shortcomings but in the end will not use anything else.

I’ve never even used it, if I needed HDMI out I always used a BeagleBone because it is based on a TI processor that I could buy separately and use myself since it is fully documented and the libraries support GCC.

“The board has lost a few ports as part of the effort to get it down to half the original price…”

The marketing “make the price end in a 9 to confuse people” strikes again. :-)

If we call the $99 board, $100 and call the $59 board $60, it’s easier to see that the price has only been reduced by 40%, not 50%. (This isn’t just semantics or rounding errors; a 10% difference is a lot in my opinion.)

i guess nvidia is sitting on a giant pile of old maxwell chips they’re desperate to get rid of?

They are also sitting on a bunch of new “broken” (128 instead of 256 cuda cores) maxwell chips for the Nintendo Switch Lite at 16nm. I’m pretty sure this Jetson Nano is 16nm.

So what can you do with it besides video image processing? Folding@Home?

Run a media server for your TV? Mine Bitcoins?