The rumor mill has recently been buzzing about Nintendo’s plans to introduce a new version of their extremely popular Switch console in time for the holidays. A faster CPU, more RAM, and an improved OLED display are all pretty much a given, as you’d expect for a mid-generation refresh. Those upgraded specifications will almost certainly come with an inflated price tag as well, but given the incredible demand for the current Switch, a $50 or even $100 bump is unlikely to dissuade many prospective buyers.

But according to a report from Bloomberg, the new Switch might have a bit more going on under the hood than you’d expect from the technologically conservative Nintendo. Their sources claim the new system will utilize an NVIDIA chipset capable of Deep Learning Super Sampling (DLSS), a feature which is currently only available on high-end GeForce RTX 20 and GeForce RTX 30 series GPUs. The technology, which has already been employed by several notable PC games over the last few years, uses machine learning to upscale rendered images in real-time. So rather than tasking the GPU with producing a native 4K image, the engine can render the game at a lower resolution and have DLSS make up the difference.

The implications of this technology, especially on computationally limited devices, is immense. For the Switch, which doubles as a battery powered handheld when removed from its dock, the use of DLSS could allow it to produce visuals similar to the far larger and more expensive Xbox and PlayStation systems it’s in competition with. If Nintendo and NVIDIA can prove DLSS to be viable on something as small as the Switch, we’ll likely see the technology come to future smartphones and tablets to make up for their relatively limited GPUs.

But why stop there? If artificial intelligence systems like DLSS can scale up a video game, it stands to reason the same techniques could be applied to other forms of content. Rather than saturating your Internet connection with a 16K video stream, will TVs of the future simply make the best of what they have using a machine learning algorithm trained on popular shows and movies?

How Low Can You Go?

Obviously, you don’t need machine learning to resize an image. You can take a standard resolution video and scale it up to high definition easily enough, and indeed, your TV or Blu-ray player is doing exactly that when you watch older content. But it doesn’t take a particularly keen eye to immediately tell the difference between a DVD that’s been blown up to fit an HD display and modern content actually produced at that resolution. Taking a 720 x 480 image and pushing it up to 1920 x 1080, or even 3840 x 2160 in the case of 4K, is going to lead to some pretty obvious image degradation.

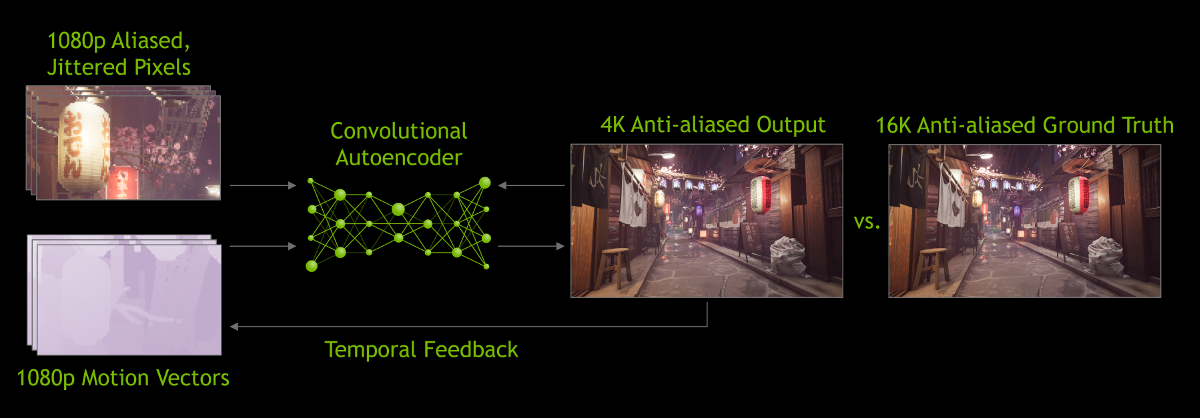

To address this fundamental issue, AI-enhanced scaling actually creates new visual data to fill in the gaps between the source and target resolutions. In the case of DLSS, NVIDIA trained their neural network by taking low and high resolution images of the same game and having their in-house supercomputer analyze the differences. To maximize the results, the high resolution images were rendered at a level of detail that would be computationally impractical or even impossible to achieve in real-time. Combined with motion vector data, the neural network was tasked with not only filling in the necessary visual information to make the low resolution image better approximate the idealistic target, but predict what the next frame of animation might look like.

While fewer than 50 PC games support the latest version of DLSS at the time of this writing, the results so far have been extremely promising. The technology will enable current computers to run newer and more complex games for longer, and for current titles, lead to substantially improved frames per second (FPS) rendering. In other words, if you have a computer powerful enough to run a game at 30 FPS in 1920 x 1080, the same computer could potentially reach 60 FPS if the game was rendered at 1280 x 720 and scaled up with DLSS.

There’s been plenty of opportunity to benchmark the real-world performance gains of DLSS on supported titles over the last year or two, and YouTube is filled with head-to-head comparisons that show what the technology is capable of. In a particularly extreme test, 2kliksphilip ran 2019’s Control and 2020’s Death Stranding at just 427 x 240 and used DLSS to scale it up to 1280 x 720. While the results weren’t perfect, both games ended up looking far better than they had any right to considering they were being rendered at a resolution we’d more likely associate with the Nintendo 64 than a modern gaming PC.

AI Enhanced Entertainment

While these may be early days, it seems pretty clear that machine learning systems like Deep Learning Super Sampling hold a lot of promise for gaming. But the idea isn’t limited to just video games. There’s also a big push towards using similar algorithms to enhance older films and television shows for which no higher resolution version exists. Both proprietary and open software is now available that leverages the computational power of modern GPUs to upscale still images as well as video.

Of the open source tools in this arena, the Video2X project is well known and under active development. This Python 3 framework makes use of the waifu2x and Anime4K upscalers, which as you might have gathered from their names, have been designed to work primarily with anime. The idea is that you could take an animated film or series that was only ever released in standard definition, and by running it through a neural network specifically trained on visually similar content, bring it up to 1080 or even 4K resolution.

Of the open source tools in this arena, the Video2X project is well known and under active development. This Python 3 framework makes use of the waifu2x and Anime4K upscalers, which as you might have gathered from their names, have been designed to work primarily with anime. The idea is that you could take an animated film or series that was only ever released in standard definition, and by running it through a neural network specifically trained on visually similar content, bring it up to 1080 or even 4K resolution.

While getting the software up and running can be somewhat fiddly given the different GPU acceleration frameworks available depending on your operating system and hardware platform, this is something that anyone with a relatively modern computer is capable of doing on their own. As an example, I’ve taken a 640 x 360 frame from Big Buck Bunny and scaled it up to 1920 x 1080 using default settings on the waifu2x upscaler backend in Video2X:

When compared to the native 1920 x 1080 image, we can see some subtle differences. The shading of the rabbit’s fur is not quite as nuanced, the eyes lack a certain luster, and most notably the grass has gone from individual blades to something that looks more like an oil painting. But would you have really noticed any of that if the two images weren’t side by side?

Some Assembly Required

In the previous example, AI was able to increase the resolution of an image by three times with negligible graphical artifacts. But what’s perhaps more impressive is that the file size of the 640 x 360 frame is only a fifth that of the original 1920 x 1080 frame. Extrapolating that difference to the length of a feature film, and it’s clear how technology could have a huge impact on the massive bandwidth and storage costs associated with streaming video.

Imagine a future where, instead of streaming an ultra-high resolution movie from the Internet, your device is instead given a video stream at 1/2 or even 1/3 of the target resolution, along with a neural network model that had been trained on that specific piece of content. Your AI-enabled player could then take this “dehydrated” video and scale it in real-time to whatever resolution was appropriate for your display. Rather than saturating your Internet connection, it would be a bit like how they delivered pizzas in Back to the Future II.

The only technical challenge standing in the way is the time it takes to perform this sort of upscaling: when running Video2X on even fairly high-end hardware, a rendering speed of 1 or 2 FPS is considered fast. It would take a huge bump in computational power to do real-time AI video scaling, but the progress NVIDIA has made with DLSS is certainly encouraging. Of course film buffs would argue that such a reproduction may not fit with the director’s intent, but when people are watching movies 30 minutes at a time on their phones while commuting to work, it’s safe to say that ship has already sailed.

This is how Skynet begins…

Yip… scary… upscaled pictures and fake news from your personal markov chains… all generated by F* or G* for exclusively you… welcome to YOUR new reality.

That’s always been the way of things though, hasn’t it? Your fake news was spread by ear and mouth rather than http, but it’s pretty much the same.

If Skynet begins by enhancing pictures of bunnies, we might just be OK. That or the whole world will end up being some sort of demented “Who framed Roger Rabbit” sort of thing.

“the use of DLSS could allow it to produce visuals similar to the far larger and more expensive Xbox and PlayStation systems”

This is false.

There’s much more than only resolution that contributes to the fidelity of a game’s visuals. . LODs, texture resolution up close, the complexity of particle effects, screen-space or raytraced reflections, lighting, GI, shadow quality (penumbra shadows), the polygon size of models, the number of batched/unbatched models (important for open world games especially) and a few other things aren’t really enhanced by DLSS, and are still a bit out of reach of lower powered systems. Eventually these smaller systems will adopt these kinds of tech, but it won’t be through DLSS, because they don’t come from simply upscaling. I’d even say the difference in resolution between 4K and 1080p is less important than the difference in having 200 objects (rocks, trees, props) rendered on screen and having 1000 objects.

I’ve been playing Breath of the Wild lately. Having a lot of fun, and it does look amazing for a handheld! But upscaling it to 4K won’t make the world have the same visual fidelity as Farcry 5 or Red Dead Redemption 2.

DLSS is a great tech for upscaling, and definitely worth putting money and research into. But the sentence I quoted is just plainly wrong, since it doesn’t attempt to make a distinction between resolution and the myriad of other things that add visual detail.

It isn’t “false” with the operating words “could” and “similar”.

Additionally, the article acknowledges that broadly upscaling isn’t the same and produces degraded images and goes on to explain a scenario with “a neural network model that had been trained on that specific piece of content.”

The article further qualifies and acknowledges your concerns about two-thirds the way through:

“we can see some subtle differences. The shading of the rabbit’s fur is not quite as nuanced, the eyes lack a certain luster”.

Reading your comment that plainly ignored the hypothetical nature of this article and potentially reveals that you did not read the entire thing was frustrating. I’m not even entirely certain why I’m bothering to interact with it really.

Talk about missing the point. The comparison between the Switch and other consoles is just establishing why Nintendo would be interested in the tech. It’s a throw away line, never mentioned again.

That’s because this post isn’t even about the Switch, but the concept of AI upscaling in media. This comment is completely irrelevant, and should be deleted.

Well we now know more on what makes for a good scene aka audience participation so someone will buy that for a dollar.

It wouldn’t directly do that, but it could indirectly aid in improving all the things you are talking about. Take two games running at 1080p, one natively and the other through DLSS which is upscaling from 720p (entirely hypothetical numbers, but they sound reasonable for a portable device). With efficient upscaling, you could free up the GPU enough to handle more polygons, more complex shaders, more particles, etc. I am not an expert in this, and I am sure that it will have some downsides, but I can absolutely see DLSS or similar upscaling techniques being used in this way.

This comment is all over the place:

1. You definitely missed the point of the whole article and just left a comment before leaving the intro. This is bad, stop it. This article isn’t even about the Switch, it’s just the first cheap device that maybe (it doesn’t even sound like it’s been confirmed) to get this tech.

2. Even if the post WAS about the Switch, you’d still be wrong. The whole idea with DLSS is to allow the game to be rendered at a lower res, and use AI to make up the difference. By rendering the game at a lower resolution, the developers can do more with the relatively limited CPU/RAM on the Switch (which remember, would be upgraded on this version also). So that means more particles, more textures, more lighting, etc, etc. Then DLSS brings that up to 1080p or perhaps even 4K.

So yes, you would be able to achieve at least SIMILAR graphics to the more powerful machines. Obviously not the same level as Microsoft and Sony’s monster machines, but nobody claimed it would be. Nintendo is just trying to at least stay in the game visually (no pun intended) given the inherent limitations of the Switch (cost, portable mode, etc).

for video content, that’s already how compressed encoding works, in principle. this is just an evolutionary step in assuming a much higher computational capacity in the decoder.

Mid-generation upgrades?

Does this mean that some existing games don’t run well on the existing switch so new hardware was needed to improve performance?

Or that new games will be coming out that don’t run well on original Switches?

Might as well just buy a PC if console versions are going to complicated like one.

Even launch games like breath of the wild struggled on the Switch so that’s part of it.

With the 3DS Nintendo made a two tier system (3, or is that 6? If you include the 2DS) when they introduced the New 3DS lines which had games that couldn’t be played on the original line up.

Actually it’s fairly checkered history of breaking generational updates when you include the DS, NDS, DSi

Well yes, but that’s how it’s been for several years now. Microsoft and Sony already did mid-generation refreshes of their consoles, and you could probably even argue the Wii U was a refresh of the Wii. The newer machines generally play the older titles (perhaps with enhancements) and then eventually get their own exclusive titles. Then the next machine comes around, and the process repeats.

You’re comparison with PC gaming is actually exactly what they’re going for. Rather than buying a console every 8 to 10 years and being stuck with a finite amount of processing power, you get a new one every 3 that takes advantage of the newest tech while still retaining backwards compatibility.

It still ends up being cheaper than trying to keep up with latest PC development, and you’re still free from the hassles of actually gaming on a computer (namely, using windows).

I think that’s specifically why they are taking this approach rather than installing a beefier GPU and then making games that support the new version of the switch (but possibly not the old). In this case, all existing games work just like they used to, all textures and geometry etc work the same, and code doesn’t need to be ported or even recompiled.

For a mid-gen refresh, this is a damn good idea. Give users of the new hardware a little something extra while not interfering with the software ecosystem.

Without real compelling content it really means nothing. Where is Metroid? Anything not Mario? The store is a cluttered mess with many subpar titles. The hardware is nice. Nintendo has really dropped the ball on games though.

DLSS for Atari 2600 when? Atari games in native resolution looks really blocky on a 4k TV.

Haha, just for kicks I used the Vertexshare upscaler to get Donkey Kong 4x larger: https://i.imgur.com/aEZlqD5.jpg

+1 except for vintage arcade games! I know it’s silly, but playing an old arcade game on an HDTV with the “feel” of a CRT arcade monitor would just be sweet. The shaders in MAME are trying, but if DLSS could do it better I’d be all for it!

And here I am gaming 4bit color at 720×576@25fps. Tales of the Arabian Night is as ridiculously difficult as I remember. Gameplay over graphics.

Technically, every game is in Pi’s digit. You only need to store the position of the game in Pi’s digits. That’s even a lot better compression that this AI stuff. Sorry, I’ve to leave, I need to finish finding Hamlet in Pi…

Your eyes must be a lot better than mine. I can’t detect any appreciable difference between the those two Big Buck Bunny stills.

DLSS might be a way to get around the issue of cable ISPs slapping on data caps to discourage competition from video streaming. For example Comcast has a 1TB/month cap. A family all streaming different HD content will hit that cap PDQ. But at 9:1 DLSS compression [e.g. from the YouTube example (1280 * 720) / (472 * 240) = 8.99], you get 9 times more streaming content before you hit the cap. But I’m not counting the download of the ML model data prior to viewing the content (perhaps one set per movie?) If the ML data sets are fairly small (they may be if the models are highly compressible), then it won’t matter much. But if the ML models are big and must be unique to each movie, then DLSS falls flat as a data cap mitigator.

Remember in-game cut-scenes, especially Sony? Note the quality. That could be another way of doing content.

You make it Nintendo, I will buy it.

MadVR already supports AI upscaling when the NGU option is used.

So Hollywood’s depiction of zooming in and enhancing crappy surveillance video in stupid cop shows is about to become reality?

Sorta. The AIs fabricate data to fill in the gaps. Usable for movies and video games but not court evidence

From Experience using the Writesonice AI tool has its merits, offering decent performance, but its drawback lies in its tendency to generate repetitive content when not prompted to explore deeper meanings. It shines with guidance and specific prompts, delivering impressive results. However, when it comes to accuracy, the tool heavily relies on the user’s provided sources. If the reference material is inaccurate, the generated information is likely to follow suit. https://www.slyautomation.com/blog/writesonic-revolutionizing-content-creation-with-ai-powered-efficiency/