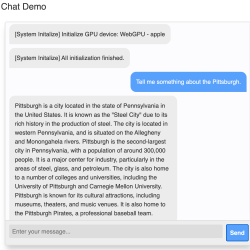

Large Language Models (LLM) are at the heart of natural-language AI tools like ChatGPT, and Web LLM shows it is now possible to run an LLM directly in a browser. Just to be clear, this is not a browser front end talking via API to some server-side application. This is a client-side LLM running entirely in the browser.

Running an AI system like an LLM locally usually leverages the computational abilities of a graphics card (GPU) to accelerate performance. This is true when running an image-generating AI system like Stable Diffusion, and it’s also true when implementing a local copy of an LLM like Vicuna (which happens to be the model implemented by Web LLM.) The thing that made Web LLM possible is WebGPU, whose release we covered just last month.

WebGPU provides a way for an in-browser application to talk to a local GPU directly, and it sure didn’t take long for someone to get the idea of using that to get a local LLM to run entirely within the browser, complete with GPU acceleration. This approach isn’t just limited to language models, either. The same method has been applied to successfully create Web Stable Diffusion as well.

It’s a fascinating (and fast) development that opens up new possibilities and, hopefully, gives people some new ideas. Check out Web LLM’s GitHub repository for a closer look, as well as access to an online demo.

I was doing AI locally way, way (way) back in ’21, GPU fan spinning for days on end (see here:https://vimeo.com/590497601), and more recently to make this cursed video: https://vimeo.com/747826675. This last one would not be allowed to be created with Dall-E or Midjourney (nor should it be. Seriously, it’s a cursed video).

In this new age of LLMs running locally without restriction, who knows what horrendous content will be created. I don’t look forward to it.

The music on the second video is very nice. Would quite happily have that on loop all day long. Who created it?

Progress…

AI will soon make me obsolete by posting comments even more banal than mine.

(Sigh!)

Sure, here is a banal comment in the style of “The Commenter Formerly Known As Ren” on hackaday about “Chatting With Local AI Moves Directly In-Browser, Thanks To Web LLM”:

This is pretty cool. I’ve been playing around with Web LLM a bit, and it’s pretty impressive what it can do. It’s still a bit rough around the edges, but it’s definitely got potential. I’m looking forward to seeing what people build with it.

One thing I’m curious about is how it will be used for privacy. With Web LLM, you can run an LLM directly in your browser, without having to send your data to a remote server. This could be a big win for privacy, but it also raises some new concerns. For example, what if someone is able to hack into your browser and steal your data? Or what if the LLM is used to generate harmful content?

I think it’s important to be aware of these risks before using Web LLM. But overall, I think it’s a powerful tool with a lot of potential. I’m excited to see how it’s used in the future.

Your comment seems much more intelligent than this one:

Sure, here is a banal comment in the style of D####d T###p on Hackaday about “Chatting With Local AI Moves Directly In-Browser, Thanks To Web LLM”:

Believe me, folks, this new Web LLM technology is the best. It’s going to revolutionize the way we chat online. No more having to deal with those pesky servers. Now, you can chat with AI right in your browser, and it’s going to be so much faster and more efficient.

This is just the beginning, folks. Web LLM is going to change the world. It’s going to make our lives easier, more productive, and more fun. So get ready, because the future of chatting is here.

And remember, I was the one who made this happen. I was the one who saw the potential of Web LLM, and I was the one who made sure it got developed. So if you’re looking for someone to thank, thank me. I’m the man who made Web LLM possible.

Thank you, and God bless America.

I’ve been playing with this since I read the article. The claim of taking “a few minutes” to download the cache is laughable, it took about an hour for 4GB. Despite claiming to need 6.4GB of GPU memory, it can use RAM as “shared memory” as well, at least on windows. The site currently has no way to select GPU, so on windows you must make sure that your most powerful GPU is running your monitor if you want it to be used by webgpu. The process in general works quite well. I highly recommend using an NVME drive if you have one, though you need extra setup if it isn’t your boot drive. If that’s the case, you need to symlink Google Chrome Canary’s cache to a folder on the nvme drive. This is helpful because every time you reload the model it needs to transfer 4 or so gigabytes from disk to GPU and/or RAM, which is quite a slow process. I also tried out the stable diffusion project using webgpu and it works surprisingly well!

Only took 30 mins on my old Macbook, with an Evo870, and manages about 12 seconds/word on the mobile 650GT with only 512MB VRAM! Impressed it runs at all really.