Researchers at Carnegie Mellon University have shared a pre-print paper on generalized robot training within a small “practical data budget.” The team developed a system that breaks movement tasks into 12 “skills” (e.g., pick, place, slide, wipe) that can be combined to create new and complex trajectories within at least somewhat novel scenarios, called MT-ACT: Multi-Task Action Chunking Transformer. The authors write:

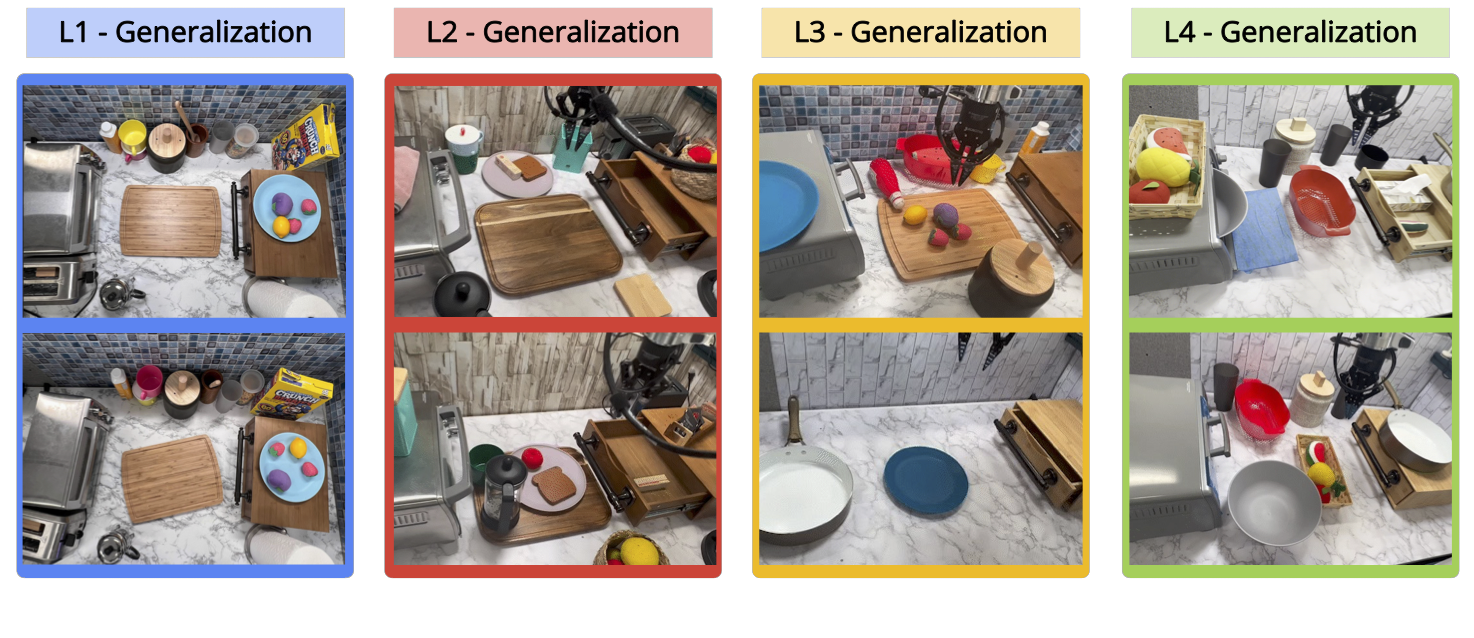

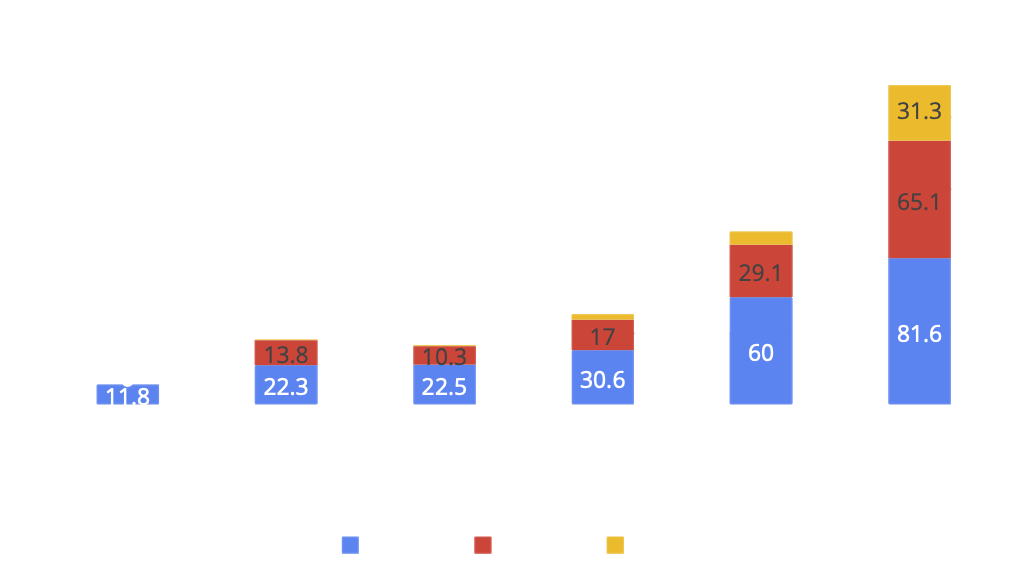

Trained merely on 7500 trajectories, we are demonstrating a universal RoboAgent that can exhibit a diverse set of 12 non-trivial manipulation skills (beyond picking/pushing, including articulated object manipulation and object re-orientation) across 38 tasks and can generalize them to 100s of diverse unseen scenarios (involving unseen objects, unseen tasks, and to completely unseen kitchens). RoboAgent can also evolve its capabilities with new experiences.

We are impressed that even with what may seem like minor variations in a kitchen scene to us, their model successfully adapts despite object location, lighting, background texture, and particularly novel object changes that would surely baffle most vision-based systems. After all, there’s a reason that most robots still do just repetitive tasks in locked cages.

One of the tools RoboAgent uses to complete novel tasks is the previously covered SAM (Segment Anything Model) from Meta. That and the modular approach to generalizing tasks seem more successful than trying to train systems for every possible variable. Learn more and find freely available datasets at the project’s GitHub site, the PDF pre-print research paper, and check out the video below.

Can it pass butter?

Why the lack of examples of relevant skills?

It passed the butter. What more do you expect, from a robot?

Assure it is not teached on HowToBasic videos.

How does this improving on existing “cobots” and similar that use the arm as a teaching pendant, recreating any movement you guide them through (and often improving on the geometry)?

It’s a generalized system (AI) which means it can do things that it hasn’t been explicitly trained to do.

sudo make a_sandwich