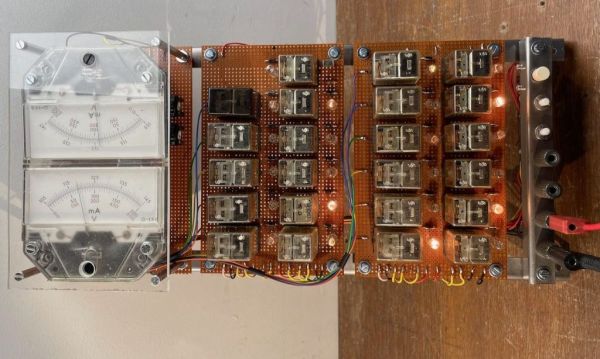

Electromechanical circuits using relays are mostly a lost art these days, but sometimes you get people like [Aart] who can’t resist to turn a stack of clackity-clack relays into a functional design, like in this case a clock (article in Dutch, Google Translate).

It was made using components that [Aart] had come in possession of over the years, with each salvaged part requiring the usual removal of old solder, before being mounted on prototype boards. The resulting design uses the 1 Hz time signal from a Hörz DCF77 master clock which he set up to drive a clock network in his house, as he describes in a forum post at Circuits Online (also in Dutch).

The digital pulses from this time signal are used by the relay network to create the minutes and hours count, which are read out via a resistor ladder made using 0.1% resistors that drive two analog meters, one for the minutes and the other for the hours.

Sadly, [Aart] did not draw up a schematic yet, and there are a few issues he would like to resolve regarding the meter indicators that will be put in front of the analog dials. These currently have weird transitions between sections on the hour side, and the 59 – 00 transition on the minute dial happens in the middle of the scale. But as [Aart] says, this gives the meter its own character, which is an assessment that is hard to argue with.

Thanks to [Lucas] for the tip.

![The hot end of the EasyThreed K9 is actually pretty nifty. (Credit: [Thomas Sanladerer])](https://hackaday.com/wp-content/uploads/2024/02/cheapest_fdm_printer_hotend.jpg?w=250)