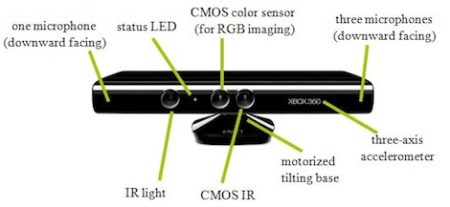

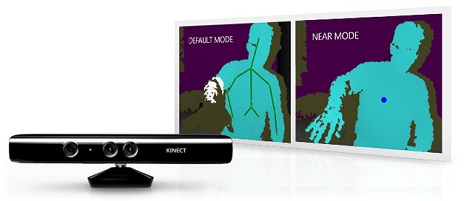

Even though we’ve seen dozens of Kinect hacks over the years, there are a few problems with the Kinect hardware itself. The range of the Kinect sensor starts at three feet, a fact not conducive to 3D scanner builds. Also, it’s not possible to connect more than one Kinect to a single computer – something that would lead to builds we can barely imagine right now.

Fear not, because Microsoft just released the Kinect for Windows. Basically, it’s designed expressly for hacking. The Kinect for Windows can reliably ‘see’ objects 40 cm (16 in) away, and supports up to four Kinects connected to the same computer.

Microsoft set the price of Kinect for Windows at $250. This is a deal breaker for us – a new Kinect for XBox sells for around half that. If you’re able to convince Microsoft you’re a student, the price of the Kinect for Windows comes down to $150. That’s not too shabby if you compare the price to that of a new XBox Kinect.

We expect most of the builders out there have already picked up a Kinect or two from their local Craigslist or Gamestop. If you haven’t (and have the all-important educational discount), this might be the one to buy.