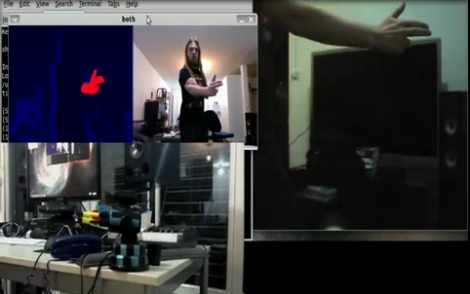

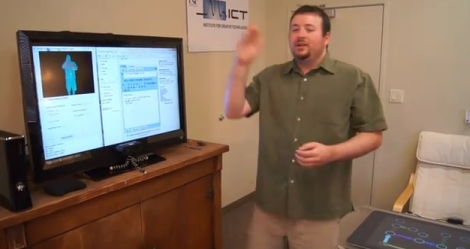

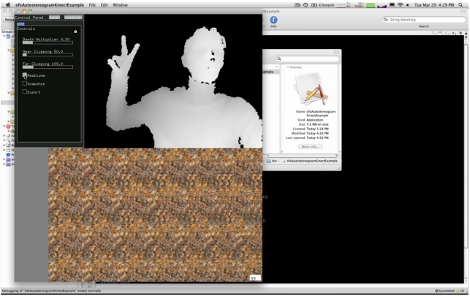

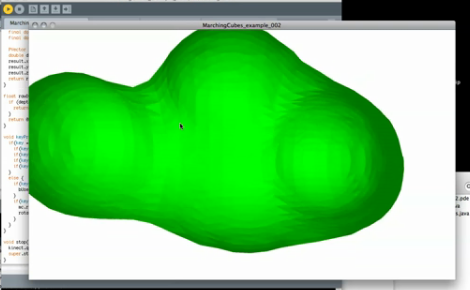

[Mike Newell] dropped us a line about his latest project, Bubble boy! Which uses the Kinect point cloud functionality to render polygonal meshes in real time. In the video [Mike] goes through the entire process from installing the libraries to grabbing code off of his site. Currently the rendering looks like a clump of dough (nightmarishly clawing at us with its nubby arms).

[Mike] is looking for suggestions on more efficient mesh and point cloud code, as he is unable to run any higher resolution than what is in the video. You can hear his computer fan spool up after just a few moments rendering! Anyone good with point clouds?

Also, check out his video after the jump.

Continue reading “3D Render Live With Kinect And Bubble Boy”