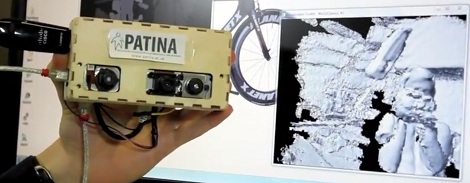

The builds using a Kinect as a 3D scanner just keep getting better and better. A team of researchers from the University of Bristol have portablized the Kinect by adding a battery, single board Linux computer, and a WiFi adapter. With their Mobile Kinect project, it’s now a snap to automatically map an environment without lugging a laptop around, or just giving your next mobile robot an awesome vision system.

By making the Kinect portable, [Mike] et al made the Microsoft’s 3D imaging device much more capable than its present task of computing the volumetric space of the inside of a cabinet. The Reconstructme project allows the Kinect to be used as a hand-held 3D scanner and Kintinuous can be used to create a 3D model of entire houses, buildings, or caves.

There’s a lot that can be done with a portabalized, WiFi’d Kinect, and hopefully a few builds replicating the team’s work (except for replacing the Gumstix board with a Raspi) will be showing up on HaD shortly.

Video after the break.