Why let the TSA have all the fun when it comes to full body scanning? Not only can you get a digital model of yourself, but you can print it out to scale.

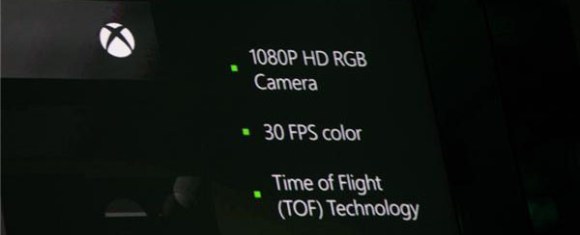

[Moheeb Zara] is still in development with a Kinect based full body scanner. But he took a bit of time to show off the first working prototype. The parts that went into the build were either cut on a bandsaw, laser cut, or 3D printed. The scanning part of the rig uses a free-standing vertical rail which allows the Kinect to move along the Z axis. The sled is held in place by gravity and moved up the rail using a winch with some steel cable looped over a pulley at the top.

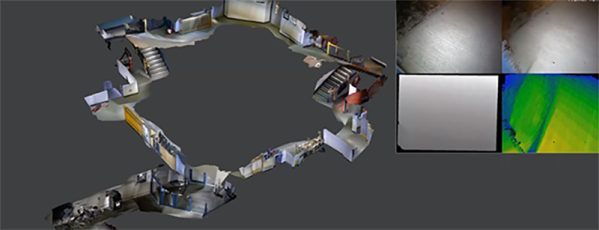

The subject stands on a rotating platform which [Moheeb] designed and assembled. Beneath the platform you’ll find a laser cut hoop with teeth on the inside. A motor mounted in a 3D printed bracket uses these teeth to rotate the platform. He’s still got some work to do in order to automate the platform. For this demo he move each step in the scanning process using manual switches. Captured data is assembled into a virtual module using ReconstructMe.

The Kinect has been used as a 3D scanner like this before. But that time it was scanning salable goods rather than people.