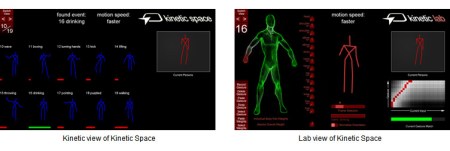

For all of you that found yourselves wanting to use Kinect to control something but had no idea what to do with it, or how to get the data from it, you’re in luck. Kineticspace is a tool available for Linux/mac/windows that gives you the tools necessary to set up gesture controls quickly and easily. As you can see in the video below, it is fairly simple to set up. You do you action, set the amount of influence from each body part (basically telling it what to ignore), and save the gesture. This system has already been used for tons of projects and has now hit version 2.0.

Continue reading “Kinetic Space: Software For Your Kinect Projects”