[Maya Posch] wrote up an insightful, and maybe a bit controversial, piece on the state of consumer goods design: The Death Of Industrial Design And The Era Of Dull Electronics. Her basic thesis is that the “form follows function” aesthetic has gone too far, and all of the functionally equivalent devices in our life now all look exactly the same. Take the cellphone, for example. They are all slabs of screen, with a tiny bezel if any. They are non-objects, meant to disappear, instead of showcases for cool industrial design.

Of course this is an extreme example, and the comments section went wild on this one. Why? Because we all want the things we build to be beautiful and functional, and that has always been in conflict. So even if you agree with [Maya] on the suppression of designed form in consumer goods, you have to admit that it’s not universal. For instance, none of our houses look alike, even though the purpose is exactly the same. (Ironically, architecture is the source of the form follows function fetish.) Cars are somewhere in between, and maybe the cellphone is the other end of the spectrum from architecture. There is plenty of room for form and function in this world.

But consider the smartphone case – the thing you’ve got around your phone right now. In a world where people have the ultimate homogeneous device in their pocket, one for which slimness is a prime selling point, nearly everyone has added a few millimeters of thickness to theirs, aftermarket, in the form of a decorative case. It’s ironically this horrendous sameness of every cell phone that makes us want to ornament them, even if that means sacrificing on the thickness specs.

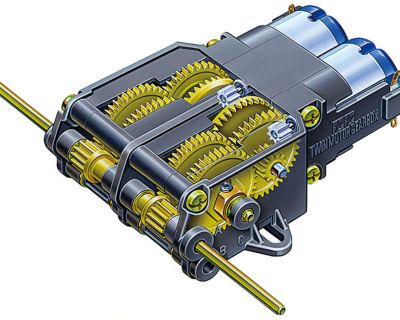

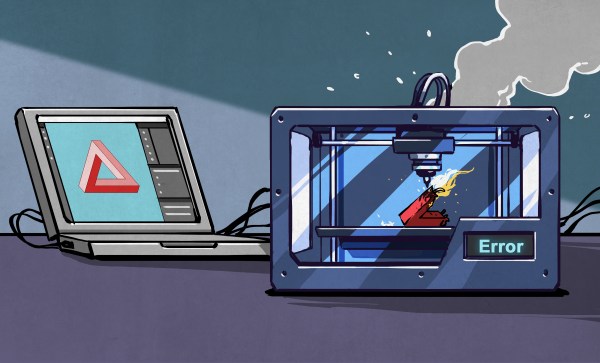

Is this the same impetus that gave us the cyberdeck movement? The custom mechanical keyboard? All kinds of sweet hacks on consumer goods? The need to make things your own and personal is pretty much universal, and maybe even a better example of what we want out of nice design: a device that speaks to you directly because it represents your work.

Is this the same impetus that gave us the cyberdeck movement? The custom mechanical keyboard? All kinds of sweet hacks on consumer goods? The need to make things your own and personal is pretty much universal, and maybe even a better example of what we want out of nice design: a device that speaks to you directly because it represents your work.

Granted, buying a phone case isn’t necessarily creative in the same way as hacking a phone is, but it at least lets you exercise a bit of your own design impulse. And it frees the designers from having to make a super-personal choice like this for you. How about a “nothing” design that affords easy personalized ornamentation? Has the slab smartphone solved the form-versus-function fight after all?