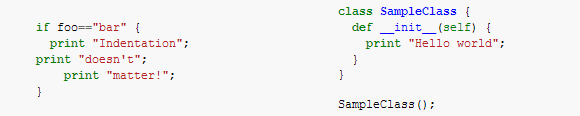

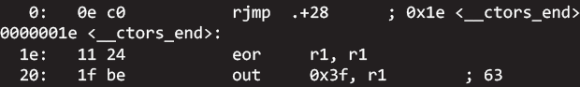

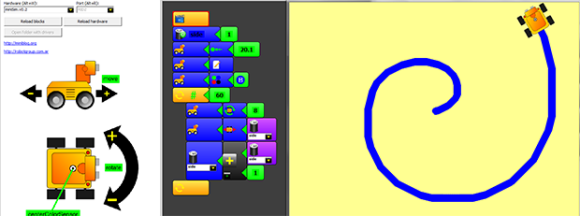

The Arduino IDE only brings the ire of actual EEs and People Who Know Better™, but if you’re teaching robotics and programming to kids, you really don’t want something as simple as a text editor with a ‘compile’ button. For that educational feat, a graphical system would be much better suited. [Julián] has been working for months to build such a tool, and now miniBloq, the graphical programming tool for just about every dev board out there, has a new release.

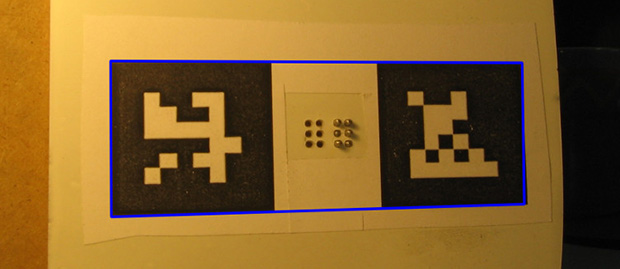

The idea of a graphical programming language for robotics has been done before, most memorably with the Lego Mindstorms programming interface. That was closed source, of course, and only worked with the magical Lego brick that allowed you to attach motors and sensors to a child’s creation. miniBloq takes the same idea and allows the same programming environment to work with dozens of dev boards for robots of every shape and size. Already, the Pi-Bot, SparkFun RedBot, Maple, Multiplo DuinoBot, and anything based on an Arduino Leonardo works with miniBloq, as will any future dev boards that understand C/C++, Python, or JavaScript. It’s not just for powering motors, either: there’s a few Python and OpenCV tutorials that demonstrate how a robot can track a colored object with a camera.

The current version of miniBloq can be downloaded from the gits, with versions available for Windows and *nix. The IDE is written with wxWidgets, so this could also be easily ported to OS X.