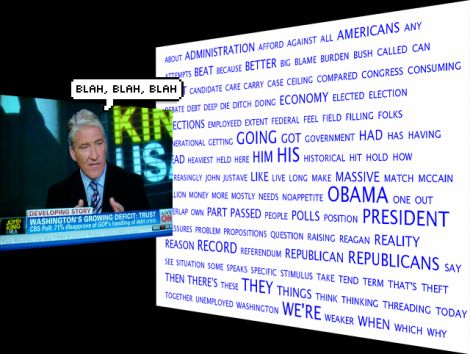

Personal head-up displays are a technology whose time ought by now to have come, but which notwithstanding attempts such as the Google Glass, have steadfastly refused to catch on. There’s an intriguing possibility in [Basel Saleh]’s CaptionIt project though, a head-up display that provides captions for everyday situations.

The hardware is a tiny I²C OLED screen with a reflector and a 3D-printed mount attached to a pair of glasses, and it’s claimed that it will work with almost any ARM v7 SBC, including more recent Raspberry Pi boards. It uses the vosc speech recognition toolkit to read audio from a USP audio device, with the resulting text being displayed on the screen.

The device is shown in action in the video below the break, and without trying it ourselves we can’t comment on its utility, but aside from the novelty we can see it could have a significant impact as an accessibility aid. But it’s as an electronic Babel fish coupled with translation software that we’d like to see it develop, so that inadvertent but hilarious international misunderstandings can be shared by all.

Regular readers will know that we’ve brought you plenty of HUD tomfoolery in the past.