While we have all types of displays these days, there’s something special about those that appear to float in the air. This HUD clock from [Kiwi Bushwalker] is one such example.

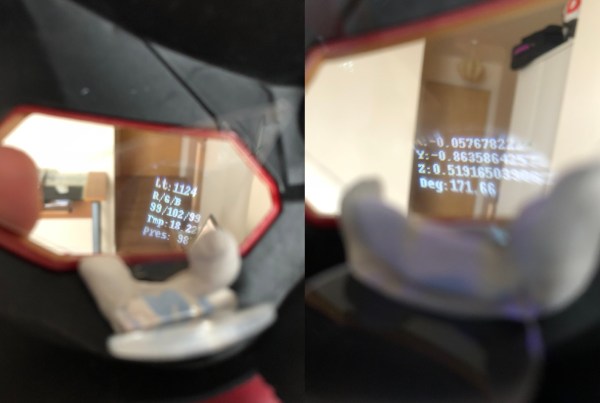

The build relies on four 8×8 LED matrixes to display the four digits that make up the time, run by the MAX7219 driver chip. However, the LEDs aren’t viewed directly — that would be too simple. Instead, the matrixes shoot their light up at an angle towards a tilted piece of clear acrylic. This creates a “heads-up display” look where the numbers appear to float in the air. The clock gets accurate time from an NTP time server over WiFi, thanks to the ESP32 microcontroller that runs the show.

It’s a straightforward clock build in many ways, but we particularly like the use of the heads-up display technique. It’s almost surprising we don’t see these projects more often, for things like car dashboard displays or targeting womp rats in a T-16 landspeeder. If you’ve been whipping up your own HUD projects, don’t hesitate to notify the tipsline!

Continue reading “HUD-Like Clock Is A Transparent Time Display”