[programing4fun] has been playing around with his Kinect-based 3D display and building a holographic WALL-E controllable with a Windows phone. It’s a ‘kid safe’ version of his Terminator personal assistant that has voice control and support for 3d anaglyph and shutter glasses.

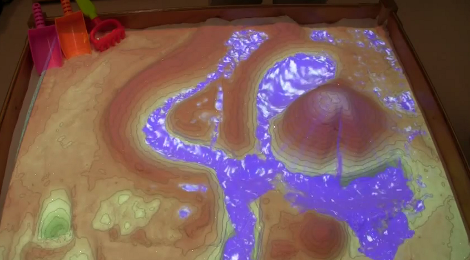

When we saw [programming4fun]’s Kinect hologram setup last summer we were blown away. By tracking a user’s head with a Kinect, [programming] was able to display a 3D image using only a projector. This build was adapted into a 3D multitouch table and real life portals, so we’re glad to see [programming4fun] refining his code and coming up with some really neat builds.

In addition to robotic avatars catering to your every wish, [programming4fun] also put together a rudimentary helicopter flight simulator controlled by tilting cell phone. It’s the same DirectX 9 heli from [programming]’s original build. with the addition of Desert Strike-esque top-down graphics. This might be the future of gaming here, so we’ll keep our eyes out for similar head-tracking 3D builds.

As always, videos after the break.

Continue reading “More Kinect Holograms From [programming4fun]”