Business cards, on the whole, haven’t changed significantly over the past 600-ish years, and arguably are not as important as they used to be, but they are still worth considering as a reminder for someone to contact you. If the format of that card and method of contact stand out as unique and related to your personal or professional interests, you have a winning combination that will cement yourself in the recipient’s memory.

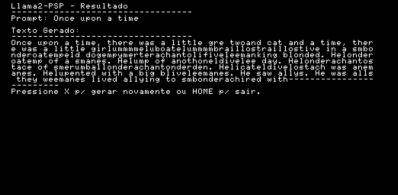

In a case study of “show, don’t tell”, [Binh]’s business card draws on technological and paranormal curiosity, blending affordable, short-run PCB manufacturing and an, LLM or, in this case, a Small Language Model, with a tiny Ouija board. While [Binh] is very much with us in the here and now, and a séance isn’t really an effective way to get a hold of him, the interactive Ouija card gives recipient’s a playful demonstration of his skills.

Continue reading “This Ouija Business Card Helps You Speak To Tiny Llamas”