Cloudflare has gotten more active in its efforts to identify and block unauthorized bots and AI crawlers that don’t respect boundaries. Their solution? AI Labyrinth, which uses generative AI to efficiently create a diverse maze of data as a defensive measure.

This is an evolution of efforts to thwart bots and AI scrapers that don’t respect things like “no crawl” directives, which accounts for an ever-growing amount of traffic. Last year we saw Cloudflare step up their game in identifying and blocking such activity, but the whole thing is akin to an arms race. Those intent on hoovering up all the data they can are constantly shifting tactics in response to mitigations, and simply identifying bad actors with honeypots and blocking them doesn’t really do the job any more. In fact, blocking requests mainly just alerts the baddies to the fact they’ve been identified.

Instead of blocking requests, Cloudflare goes in the other direction and creates an all-you-can-eat sprawl of linked AI-generated content, luring crawlers into wasting their time and resources as they happily process an endless buffet of diverse facts unrelated to the site being crawled, all while Cloudflare learns as much about them as possible.

That’s an important point: the content generated by the Labyrinth might be pointless and irrelevant, but it isn’t nonsense. After all, the content generated by the Labyrinth can plausibly end up in training data, and fraudulent data would essentially be increasing the amount of misinformation online as a side effect. For that reason, the human-looking data making up the Labyrinth isn’t wrong, it’s just useless.

It’s certainly a clever method of dealing with crawlers, but the way things are going it’ll probably be rendered obsolete sooner rather than later, as the next move in the arms race gets made.

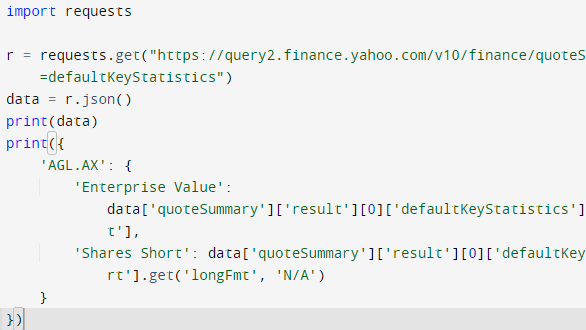

[Doug] shows that while parsing a web page for a specific piece of data (for example, a stock price) is not difficult, there are sometimes easier and faster ways to go about it. In the case of Yahoo Finance, the web page most of us look at isn’t really the actual source of the data being displayed, it’s just a front end.

[Doug] shows that while parsing a web page for a specific piece of data (for example, a stock price) is not difficult, there are sometimes easier and faster ways to go about it. In the case of Yahoo Finance, the web page most of us look at isn’t really the actual source of the data being displayed, it’s just a front end.