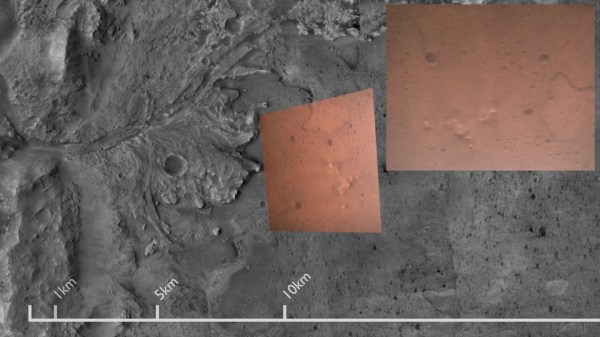

It’s always fun to look over aerial and satellite maps of places we know, seeing a perspective different from our usual ground level view. We lose that context when it’s a place we don’t know by heart. Such as, say, Mars. So [Matthew Earl] sought to give Perseverance rover’s landing video some context by projecting onto orbital imagery from ESA’s Mars Express. The resulting video (embedded below the break) is a fun watch alongside the technical writeup Reprojecting the Perseverance landing footage onto satellite imagery.

Some telemetry of rover position and orientation were transmitted live during the landing process, with the rest recorded and downloaded later. Surprisingly, none of that information was used for this project, which was based entirely on video pixels. This makes the results even more impressive and the techniques more widely applicable to other projects. The foundational piece is SIFT (Scale Invariant Feature Transform), which is one of many tools in the OpenCV toolbox. SIFT found correlations between Perseverance’s video frames and Mars Express orbital image, feeding into a processing pipeline written in Python for results rendered in Blender.

While many elements of this project sound enticing for applications in robot vision, there are a few challenges touched upon in the “Final Touches” section of the writeup. The falling heatshield interfered with automated tracking, implying this process will need help to properly understand dynamically changing environments. Furthermore, it does not seem to run fast enough for a robot’s real-time needs. But at first glance, these problems are not fundamental. They merely await some motivated people to tackle in the future.

This process bears some superficial similarities to projection mapping, which is a category of projects we’ve featured on these pages. Except everything is reversed (camera instead of video projector, etc.) making the math an entirely different can of worms. But if projection mapping sounds more to your interest, here is a starting point.

[via Dr. Tanya Harrison @TanyaOfMars]

Continue reading “Putting Perseverance Rover’s View Into Satellite View Context”