The Voynich Manuscript is a medieval codex written in an unknown alphabet and is replete with fantastic illustrations as unusual and bizarre as they are esoteric. It has captured interest for hundreds of years, and expert [Lisa Fagin Davis] shared interesting results from using multispectral imaging on some pages of this highly unusual document.

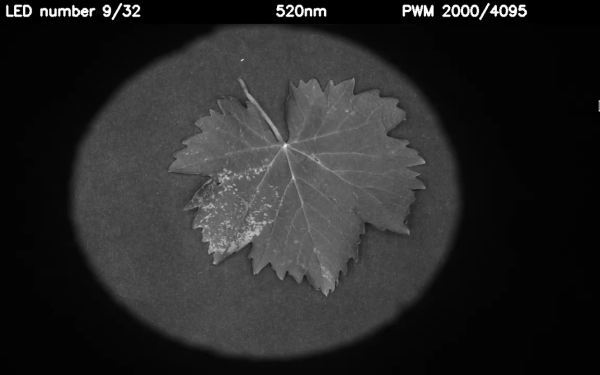

We should make it clear up front that the imaging results have not yielded a decryption key (nor a secret map or anything of the sort) but the detailed write-up and freely-downloadable imaging results are fascinating reading for anyone interested in either the manuscript itself, or just how exactly multispectral imaging is applied to rare documents. Modern imaging techniques might get leveraged into things like authenticating sealed packs of Pokémon cards, but that’s not all it can do.

We should make it clear up front that the imaging results have not yielded a decryption key (nor a secret map or anything of the sort) but the detailed write-up and freely-downloadable imaging results are fascinating reading for anyone interested in either the manuscript itself, or just how exactly multispectral imaging is applied to rare documents. Modern imaging techniques might get leveraged into things like authenticating sealed packs of Pokémon cards, but that’s not all it can do.

Because multispectral imaging involves things outside our normal perception, the results require careful analysis rather than intuitive interpretation. Here is one example: multispectral imaging may yield faded text visible “between the lines” of other text and invite leaping to conclusions about hidden or erased content. But the faded text could be the result of show-through (content from the opposite side of the page is being picked up) or an offset (when a page picks up ink and pigment from its opposing page after being closed for centuries.)

[Lisa] provides a highly detailed analysis of specific pages, and explains the kind of historical context and evidence this approach yields. Make some time to give it a read if you’re at all interested, we promise it’s worth your while.