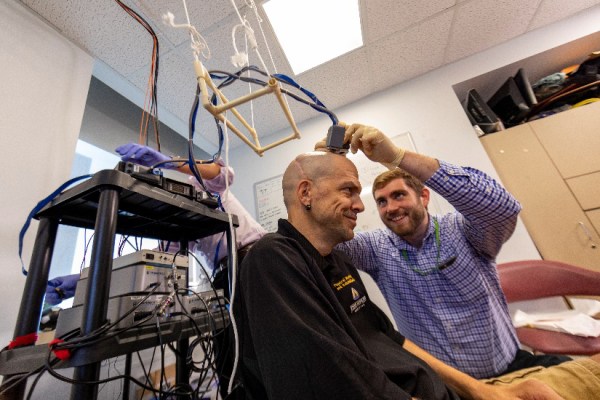

While out swimming in the ocean on vacation, a big wave caught [QuadWorker], pushed him head first into the sand, and left him paralyzed from the neck down. This talk isn’t about injury or recovery, though. It’s about the day-to-day tech that makes him able to continue living, working, and travelling, although in new ways. And it’s a fantastic first-hand insight into how assistive technology works for him.

If you can only move your head, how do you control a computer? Surprisingly well! A white dot on [QuadWorker]’s forehead is tracked by a commodity webcam and some software, while two button bumpers to the left and right of his head let him click with a second gesture. For cell phones, a time-dependent scanner app allows him to zero in successively on the X and Y coordinates of where he’d like to press. And naturally voice recognition software is a lifesaver. In the talk, he live-demos sending a coworker a text message, and it’s almost as fast as I could go. Shared whiteboards allow him to work from home most of the time, and a power wheelchair and adapted car let him get into the office as well.

The lack of day-to-day independence is the hardest for him, and he says that they things he misses most are being able to go to the bathroom, and also to scratch himself when he gets itchy – and these are yet unsolved problems. But other custom home hardware also plays an important part in [QuadWorker]’s setup. For instance, all manner of home automation allows him to control the lights, the heat, and the music in his home. Voice-activated light switches are fantastic when you can’t use your arms.

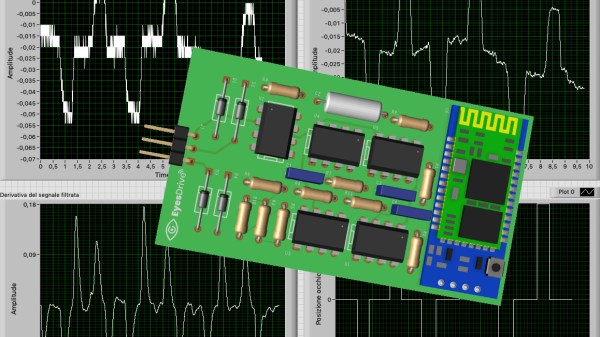

This is a must-watch talk if you’re interested in assistive tech, because it comes direct from the horse’s mouth – a person who has tried a lot, and knows not only what works and what doesn’t, but also what’s valuable. It’s no surprise that the people whose lives most benefit from assistive tech would also be most interested in it, and have their hacker spirit awakened. We’re reminded a bit of the Eyedrivomatic, which won the 2015 Hackaday Prize and was one of the most outstanding projects both from and for the quadriplegic community.

Continue reading “37C3: The Tech Behind Life With Quadraplegia”