3D printers have become indispensable in industry sectors such as biomedical and manufacturing, and are deployed as what is termed as a 3D print farm. They help reduce production costs as well as time-to-market. However, a hacker with access to these manufacturing banks can introduce defects such as microfractures and holes that are intended to compromise the quality of the printed component.

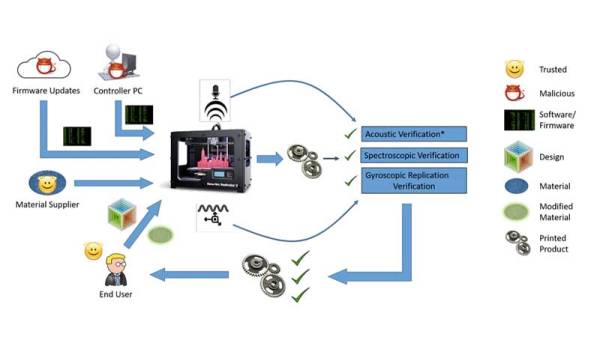

Researchers at the Rutgers University-New Brunswick and Georgia Institute of Technology have published a study on cyber physical attacks and their detection techniques. By monitoring the movement of the extruder using sensors, monitored sounds made by the printer via microphones and finally using structural imaging, they were able to audit the printing process.

A lot of studies have popped up in the last year or so including papers discussing remote data exfiltration on Makerbots that talk about the type of defects introduced. In a paper by [Belikovetsky, S. et al] titled ‘dr0wned‘, such an attack was documented which allowed a compromised 3D printed propeller to crash a UAV. In a follow-up paper, they demonstrated Digital Audio Signing to thwart Cyber-physical attacks. Check out the video below.

In this new study, the attack is identified by using not only the sound of the stepper motors but also the movement of the extruder. After the part has been manufactured, a CT scan ensures the integrity of the part thereby completing the audit.

Disconnected printers and private networks may be the way to go however automation requires connectivity and is the foundation for a lot of online 3D printing services. The universe of Skynet and Terminators may not be far-fetched either if you consider ambitious projects such as this 3D printed BLDC motor. For now, learn to listen to your 3D printer’s song. She may be telling you a story you should hear.

Thanks for the tip [Qes] Continue reading “Analysing 3D Printer Songs For Hacks”