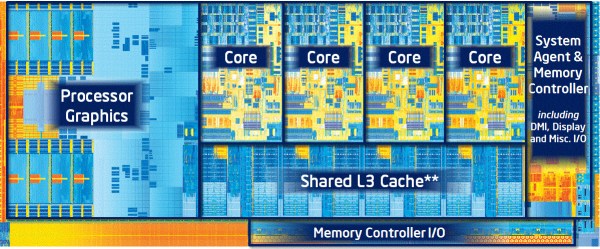

Many readers will be familiar with the idea of a glitching attack, introducing electrical noise into a computer circuit in the hope of disrupting program flow and causing unexpected behaviour which might lead to hitherto unavailable access to memory or other system resources. [David Buchanan] has written a piece investigating glitching attacks on PC memory, and the tool he’s used is the ubiquitous piezoelectric lighter.

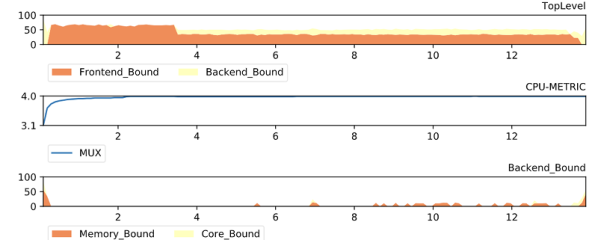

Attaching a short piece of wire to one of the lines on a SODIMM memory module, he can glitch a laptop at will with the lighter through the electromagnetic noise its discharge creates. It’s a cool trick, but the real meat of the write-up lies in his comprehensive description of how virtual memory works, and how a glitch can be used to break out of the “sandbox” of memory allocated to a particular process. He demonstrates it in a video which we’ve placed below the break, in which he gains root access and runs an arbitrary piece of code on a Linux laptop. It’s probable that not many of us have the inclination to do this for ourselves, but even so it’s fascinating to know how such an attack works.