Wander through a well-funded museum these days and you’re likely to find interactive exhibits scattered around, such as this sleek 50″ projection-based multitouch table. The company responsible for this beauty, Ideum, has discontinued the MT-50 model in favor of an LCD version, and has released the plans for the old model as part of the Open Exhibits initiative. This is a good thing for… well, everyone!

The frame consists of aluminum struts that crisscross through an all-steel body, which sits on casters for mobility. The computer specs seem comparable to a modern gaming rig, and rely on IEEE1394 inputs for the cameras. The costs start to pile up with the multiple row of high-intensity infrared LED strips, which can run $200 per roll. The glass is a custom made, 10mm thick sheet with projection film on one side and is micro-etched to reduce reflections and increase the viewing angle to nearly 180 degrees. The projector is an InFocus IN-1503, which has an impressively short projection throw ratio, and a final resolution of 1280×720.

The estimated price tag mentioned in the comments is pretty steep: $12k-16k. Let us know with your own comment what alternative parts might cut the cost, and watch the video overview of the table below, plus a video demonstration of its durability. For another DIY museum build, check out Bill Porter’s “Reaction Time Challenge.”

Continue reading “50″ Multitouch Table Is Expensive, Indestructable”

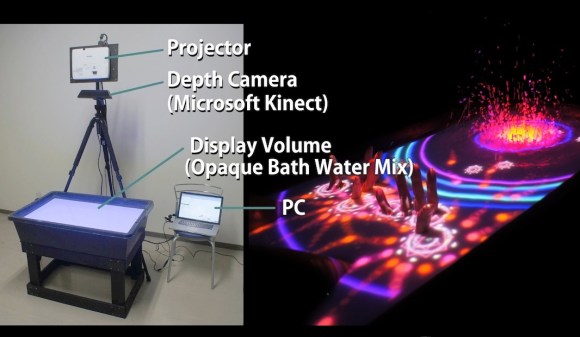

Are you ready to make a utility sink sized pool of water the location of your next living room game console? This demonstration is appealing, but maybe not ready for widespread adoption.

Are you ready to make a utility sink sized pool of water the location of your next living room game console? This demonstration is appealing, but maybe not ready for widespread adoption.