We could all use a hug once in a while. Most people would probably say the shared warmth is nice, and the squishiness of another living, breathing meatbag is pretty comforting. Hugs even have health benefits.

But maybe you’re new in town and don’t know anyone yet, or you’ve outlived all your friends and family. Or maybe you just don’t look like the kind of person who goes for hugs, and therefore you don’t get enough embraces. Nearly everyone needs and want hugs, whether they’re great, good, or just average.

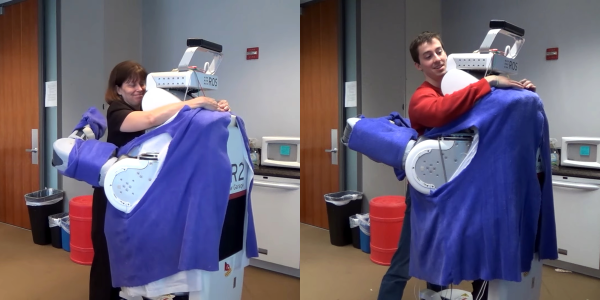

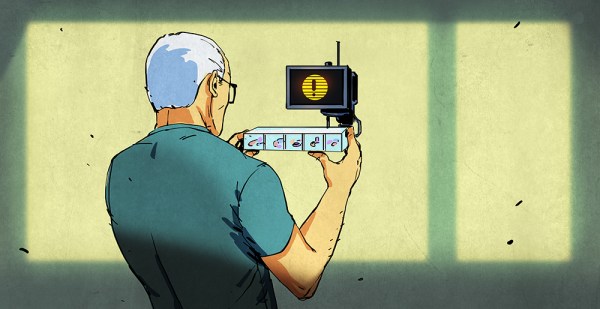

So what makes a good hug, anyway? It’s a bit like a handshake. It should be warm and dry, with a firmness appropriate to the situation. Ideally, you’re both done at the same time and things don’t get awkward. Could a robot possibly check all of these boxes? That’s the idea behind HuggieBot, the haphazardly humanoid invention of Katherine J. Kuchenbecker and team at the Max Planck Institute for Intelligent Systems in Stuttgart, Germany (translated). User feedback helped the team get their arms around the problem.