In the old days, you had a computer and it did one thing at a time. Literally. You would load your cards or punch tape or whatever and push a button. The computer would read your program, execute it, and spit out the results. Then it would go back to sleep until you fed it some more input.

The problem is computers — especially then — were expensive. And for a typical program, the computer is spending a lot of time waiting for things like the next punched card to show up or the magnetic tape to get to the right position. In those cases, the computer was figuratively tapping its foot waiting for the next event.

Someone smart realized that the computer could be working on something else while it was waiting, so you should feed more than one program in at a time. When program A is waiting for some I/O operation, program B could make some progress. Of course, if program A didn’t do any I/O then program B starved, so we invented preemptive multitasking. In that scheme, program A runs until it can’t run anymore or until a preset time limit occurs, whichever comes first. If time expires, the program is forced to sleep a bit so program B (and other programs) get their turn. This is how virtually all modern computers outside of tiny embedded systems work.

But there is a difference. Most computers now have multiple CPUs and special ways to quickly switch tasks. The desktop I’m writing this on has 12 CPUs and each one can act like two CPUs. So the computer can run up to 12 programs at one time and have 12 more that can replace any of the active 12 very quickly. Of course, the operating system can also flip programs on and off that stack of 24, so you can run a lot more than that, but the switch between the main 12 and the backup 12 is extremely fast.

So the case is stronger than ever for writing your solution using more than one program. There are a lot of benefits. For example, I once took over a program that did a lot of calculations and then spent hours printing out results. I spun off the printing to separate jobs on different printers and cut like 80% of the run time — which was nearly a day when I got started. But even outside of performance, process isolation is like the ultimate encapsulation. Things you do in program A shouldn’t be able to affect program B. Just like we isolate code in modules and objects, we can go further and isolate them in processes.

Doubled-Edged Sword

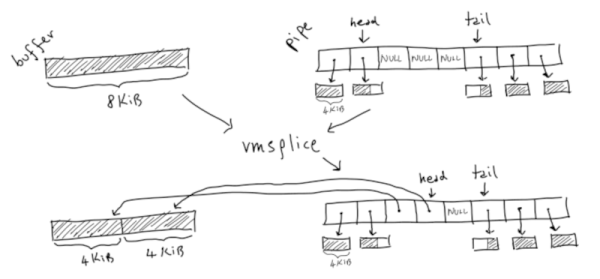

But that’s also a problem. Presumably, if you want to have two programs cooperate, they need to affect each other in some way. You could just use a file to talk between them but that’s notoriously inefficient. So operating systems like Linux provide IPC — interprocess communications. Just like you make some parts of an object public, you can expose certain things in your program to other programs.

Continue reading “Linux Fu: Simple Pipes” →