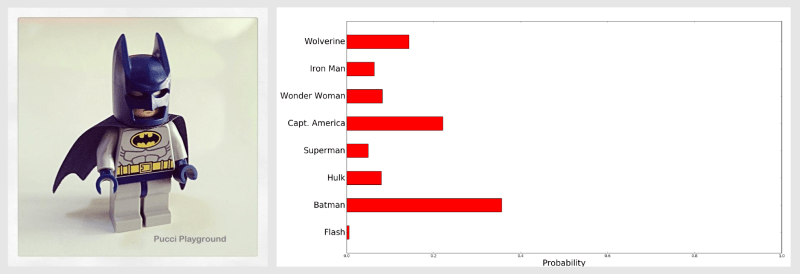

[Massimiliano Patacchiola] writes this handy guide on using a histogram intersection algorithm to identify different objects. In this case, lego superheroes. All you need to follow along are eyes, Python, a computer, and a bit of machine learning magic.

He gives a good introduction to the idea. You take a histogram of the colors in a properly cropped and filtered photo of the object you want to identify. You then feed that into a neural network and train it to identify the different superheroes by color. When you feed it a new image later, it will compare the new image’s histogram to its model and output confidences as to which set it belongs.

This is a useful thing to know. While a lot of vision algorithms try to make geometric assertions about the things they see, adding color to the mix can certainly help your friendly robot project recognize friend from foe.

I imagine as GPUs become more powerful, and affordable, machine learning will take it’s place among our other desktop tools.

Indeed, the array processing offered by contemporary graphics cards as used in high

end desktop computers ostensibly for gaming, could well be configured for several

aspects of machine learning. Its possible a variant of the hardware approach offered

by this co may be programmed to be resident predominantly in the GPU firmware

with the PC ending up for the most part being just means to offer a data repository

at a good speed… Here is the company & disclosing I am a shareholder. Currently

under trials in USA re casinos – viable also for advanced driver-less & other open

ended machine learning paradigms leading into several levels of AI…

http://www.brainchipinc.com/

Hmm, wasnt there a hack project some years ago where windows XP like OS which

is heavily UI/Graphic based was segmented/ported to a graphic card – CUDA I think.

Such that the PC ended up mostly handling files & input/output. ie The GPU handled

all the windows, scaling, graphic functions re parent/child & bulk of that OOP processes

to allow the main CPU to handle database searchers, comms & other task

more suited to its SISD/SIMD data vs the GPU’s array MIMD etc… ?

Maybe it was just a dream – can be tricky as I understand the GPU/Graphic card

chip designers mostly keep their code architecture under wraps re their programming

models for gamers – thus makes it hard – well other than BOINC etc to enable

gravitating the windows type user interface to be coded to run predominantly on

the GPU by the enthusiastic hackers…

Until fairly recently the asymmetry of GPU/CPU communications is what made the OS on a GPU not very practical. Machine learning provides an incentive both for more memory on-board as well as a more symmetrical communications.

“Neural Nets”

http://puu.sh/sNLPe/0cc418f9cc.jpg

:D :D :D Thanks for the morning laugh.

I think it would be interesting to go a bit deeper into the use of Machine Learning/Neural Networks in safety applications and how to determine what is sufficient software testing with this level of abstraction.