It’s true what they say — you never know what you can do until you try. Russell Kirsch, who developed the first digital image scanner and subsequently invented the pixel, was a firm believer in this axiom. And if Russell had never tried to get a picture of his three-month-old son into a computer back in 1957, you might be reading Hackaday in print right now. Russell’s work laid the foundation for the algorithms and storage methods that make digital imaging what it is today.

Russell A. Kirsch was born June 20, 1929 in New York City, the son of Russian and Hungarian immigrants. He got quite an education, beginning at Bronx High School of Science. Then he earned a bachelor’s of Electrical Engineering at NYU, a Master of Science from Harvard, and attended American University and MIT.

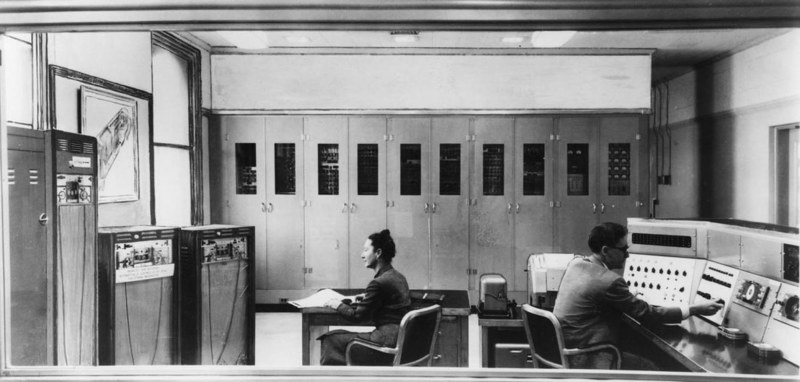

In 1951, Russell went to work for the National Bureau of Standards, now known as the National Institutes of Science and Technology (NIST). He spent nearly 50 years at NIST, and started out by working with one of the first programmable computers in America known as SEAC (Standards Eastern Automatic Computer). This room-sized computer built in 1950 was developed as an interim solution for the Census Bureau to do research (PDF).

Like the other computers of its time, SEAC spoke the language of punch cards, mercury memory, and wire storage. Russell Kirsch and his team were tasked with finding a way to feed pictorial data into the machine without any prior processing. Since the computer was supposed to be temporary, its use wasn’t as tightly controlled as other computers. Although it ran 24/7 and got plenty of use, SEAC was more accessible than other computers, which allowed time for bleeding edge experimentation. NIST ended up keeping SEAC around for the next thirteen years, until 1963.

The Original Pixel Pusher

The term ‘pixel’ is a shortened portmanteau of picture element. Technically speaking, pixels are the unit of length for digital imaging. Pixels are building blocks for anything that can be displayed on a computer screen, so they’re kind of the first addressable blinkenlights.

In 1957, Russell brought in a picture of his son Walden, which would become the first digital image (PDF). He mounted the photo on a rotating drum scanner that had a motor on one end and a strobing disk on the other. The drum was coupled to a photo-multiplier vacuum tube that spun around on a lead screw. Photo-multipliers are used to detect very low levels of light.

As the drum slowly rotated, a photo-multiplier moved back and forth, scanning the image through a square viewing hole in the wall of a box. The tube digitized the picture by transmitting ones and zeros to SEAC that described what it saw through the square viewing hole — 1 for white, and 0 for black. The digital image of Walden is 76 x 76 pixels, which was the maximum allowed by SEAC.

Variable-Shaped Pixels

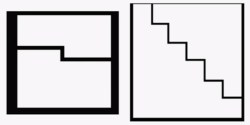

If Russell Kirsch had any regrets, it is that he designed pixels to be square. Ten years ago at the age of 81, he started working on a variable-shaped pixels with the hope of improving the future of digital imaging. He wrote a LISP program to explore the idea, and simulated triangular and rectangular pixels using a 6×6 array of square pixels for each.

In in the video below, Russell discusses the idea and proves that variable pixels make a better image with more information than square pixels do, and with significantly fewer pixels overall. It takes some finagling, as pixel pairs of triangles and rectangles must be carefully chosen, rotated, and mixed together to best represent the image, but the image quality is definitely worth the effort. Following that is a video of Russell discussing SEAC’s hardware.

Russell retired from NIST in 2001 and moved to Portland, Oregon. As of 2012, he could be found in the occasional coffeehouse, discussing technology with anyone he could engage. Unfortunately, Russell developed Alzheimer’s and died from complications on August 11, 2020. He was 91 years old.

Never heard about wire storage. I’m guessing the cabinets on the left with the loops drooping down were the wire storage where a loop of magnetic wire acted like a long shift register memory.

Reverb springs essentially. Magnetostrictive transducers on the ends. Those drooping things in the photo are magnetic tape memory in an endless loop. Not unlike an Echoplex early model. Wobegan if they jammed.

You are conflating reverb springs with tape delay, and they are completely different technologies.

This seems more like an audio wire recorder, which works like recording tape but with a spool of wire in place of tape.

SEAC used acoustic mercury delay lines as main memory and recording wire (dictating machine technology) as long-term storage.

https://en.wikipedia.org/wiki/Delay_line_memory

A pixel doesn’t really have a shape, since by itself it’s not meaningful. For example, in order to resolve a point, you need to have a contrast between a dark and a light area, which already implies more than one pixel. To represent any shape, you need a multitude of points, and a multitude of pixels, so the pixel itself does not meaningfully have a shape. The pixel as a square tile is just an artifact of the computer display – it is not what the pixel really represents, but simply a representation of a pixel.

What a pixel does have is distribution and density. When you place the pixels in a square grid, you get “square” pixels, but they may just as well be skewed with an offset of 1/2 every other row, or have different sizes, or have a random distribution and size like in a film photograph.

That’s why it’s a bit strange to talk about variable shape pixels. Sure, you can interpret them that way, but what you’re really doing is just shifting the underlying distribution of pixels to better capture the shape of interest. If you start with a square grid, you’re essentially creating an offset map for each pixel to shift them slightly “out of place” in a way that is optimal for that particular picture, and then later re-creating that offset on a display that is capable of much finer resolution. That way you can slightly increase the information density near edges and reduce it over areas which don’t have interesting detail. This allows you to use fewer pixels for the same overall image fidelity.

Only, this is what JPEG and the like already do, in a sense. They take pictures and break them down to 8×8 or 16×16 pixel blocks and the represent the content of each block as an average offset value, and a distribution deviating from that offset, so you get a gradient towards one direction, or a point in the middle, etc. You can think of it as if each of these blocks was actually a single pixel that merely contains additional information about where the intensity and color value of that pixel is in relation to its place in the larger pixel grid.

If someone wants to try the idea, I suggest taking a high resolution photograph and re-interpreting it as a hexagonal grid of points, where instead of treating each point as a discrete area, you treat them as a weighted distribution around that point, so that the sum of equidistant point values becomes the average of their values. For any arbitrary location or area over that grid, you take the closest point values that apply there and calculate their mean.

When you render a picture so encoded onto a square pixel grid monitor, you can scale it up and down to different sizes and it should never become “pixelated” – only more or less blurry. To display it, you place a square pixel on top of the grid and calculate the average of all the data points which have influence inside the square. If you’re being extra clever, you can do sub-pixel rendering by giving more or less emphasis on points to the left, center and right as you calculate each color channel.

Pixels are square, dude. Just look at them.

I repeat myself: ” The pixel as a square tile is just an artifact of the computer display – it is not what the pixel really represents, but simply a representation of a pixel.”

Earlier CRT monitors did not have square pixels. Instead the aperture mask projected three dots in a triangle pattern, and depending on the alignment of the beam and the display resolution, one or many such triangle patterns were used to represent one pixel.

In effect, a pixel was not square but a fuzzy blob. The modern habit of making “retro” games look all blocky is not an accurate representation of earlier display technology.

https://imgur.com/40hvQlg

These “First xyz…” stories often seem to be kinda fuzzy claims. The telefax (and later radiofax) have been around since the 1840’s. So, it would seem that this article is about the first creation of a Picture Element (Pixel) structure stored digitally. Is that the crux of the claim?

Those systems were analog and had no storage.

So, I’m right? Asking for a friend.

Not quite–those systems were analog, not digital. They were transmitted in a manner very similar to the way that analog television or slow-scan television is transmitted now.

Digital fax systems didn’t hit the market until the 1960’s, and even now there are still analog fax systems in operation.

The crucial differentiator though, is *digital* imaging. Much like how analog cameras predated digital ones by over a hundred years.

No we wouldn’t be “reading Hackaday in print right now.” If Russell Kirsch didn’t come up with this idea somebody else would have. Pointillism goes back to 1886 so it’s not really anything new.

Cross stitch embroidery looks quite like old computer graphics.

I would consider “AP Wirephoto” (facsimile) to be a “digital” photograph, and that was done years before 1957.

HaD had a story on those a long time ago.

I thought wirephoto was analog? Basically an audio recording that can be fed into a printer.

It was.

But Hellschreiber from the 1920’s is digital. It used a pixel grid of 7×7 to send characters over telephone lines or radio to a ticker tape printer at the receiving end.

https://hackaday.com/2015/12/30/messages-from-hell-human-signal-processing/

It was, but the Hellschreiber invented in the 1920s was digital, sending 7×7 pictures of characters over to a ticker tape printer as an early fax machine.

The Hellschrieber was not digital though, it was electromechanical. Recording/decoding telegraphs were a precursor to actual digital imaging, but they were not digital devices.

Something being electromechanical doesn’t mean it isn’t digital. The Zuse Z1 (1936) was an electromechanical (mostly mechanical) computer, but it was fully digital, and “contained almost all the parts of a modern computer, i.e. control unit, memory, micro sequences, floating-point logic, and input-output devices”: https://en.wikipedia.org/wiki/Z1_(computer)

So. In short; he actually wrote a compression algorithm containing 6*6 or 8*8 grids of pixels, each grid set to have the closest representation of color subsets, separated into 2 “half” pixels in a certain form, turned into 1 of 4 rotations, and each half those “pixel groups”/grids having a color assigned closest to their neighborly pixel grids.

This is physically not feasible at the current density (eg. “rotating” pixels/pixel grids) – imho

In principle, it’s still just grids of 4*4, 6*6 or 8*8 pixels.

Also, when he says he needs less pixels, I find that he either forgot to count the ones inside the grid, or.. forgot that every grid now has 2 colors in them; making it at least 2 “malformed” pixels.

I can imagine just having a panel with more pixels onto the same surface will need far less processing power behind it.

Instead of having to assign a rotation & a form + 2 colors per grid of x by x, still pixels..

Much respect for the dude though.

At least he tried to break his own norm.

“As of 2012, he could be found in the occasional coffeehouse, discussing technology with anyone he could engage.”

I wish I knew a place close-by where I could engage with people like that. People who in general think more deeply about problems and questions, and have interesting insights as well.

That kind of exchange would be heaven.

Sounds more like Professor Periwinkle ambushing unsuspecting patrons. https://www.imdb.com/title/tt0506597/

Or perhaps the Ancient Mariner.

Here you go—hopefully there’s one near you:

https://hackaday.io/hackerspaces

https://wiki.hackerspaces.org/

It’s pretty common to have an open house event one night a week or so, where you can hang out with technical people without needing to be a member.

In the video, he says that the resolution of its first scan is 176×176, not 76×76.

Actually some modern texture compression techniques use a pixel shape – sort of. ETC2, BC7 and ASTC can use two or more partitions to define a shape within a 4×4 block of pixels that can be considered to be a pixel shape at 1/4 resolution. each of the two halves of the partitions use a separate color or color line that can be thought of as two pixel colors at 1/4 resolution.

I drew his photo on https://pixelanarchy.online