It’s not uncommon for a new distro version to come out, and a grudging admission that maybe a faster laptop is on the cards. Perhaps after seeing this project though, you’ll never again complain about that two-generations-ago 64-bit multi-core behemoth, because [Dimitri Grinberg] — who else! — has succeeded in booting an up-to-date Linux on the real most basic of processors. We’re not talking about 386s, ATmegas, or 6502s, instead he’s gone right back to the beginning. The Intel 4004 was the first commercially available microprocessor back in 1971, and now it can run Linux.

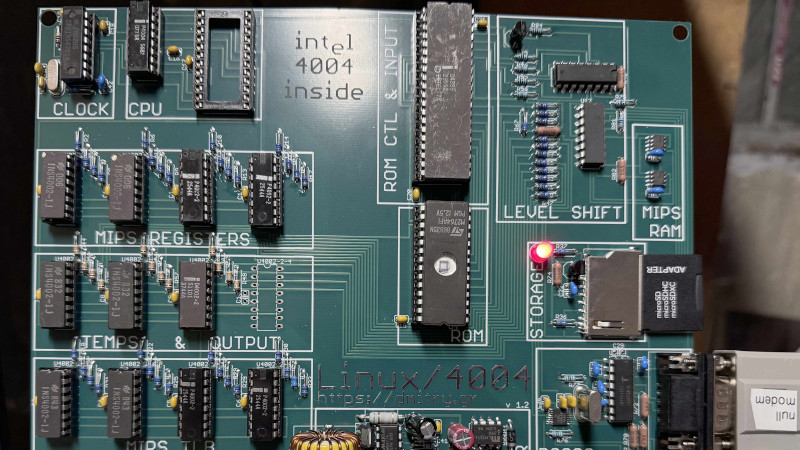

So, given the 4004’s very limited architecture and 4-bit bus, how can it perform this impossible feat? As you might expect, the kernel isn’t being compiled to run natively on such ancient hardware. Instead he’s achieved the equally impossible-sounding task of writing a MIPS emulator for the venerable silicon, and paring back the emulated hardware to the extent that it remains capable given the limitations of the 1970s support chips in interfacing to the more recent parts such as RAM for the MIPS, an SD card, and a VFD display. The result is shown in the video below the break, and even though it’s sped up it’s clear that this is not a quick machine by any means.

We’d recommend the article as a good read even if you’ll never put Linux on a 4004, because of its detailed description of the architecture. Meanwhile we’ve had a few 4004 stories over the years, and this one’s not even the first time we’ve seen it emulate something else.

“It’s not uncommon for a new distro version to come out, and a grudging admission that maybe a faster laptop is on the cards. ”

You mean a Windows distro?

Or those who think Fedora & friends is a good choice.

Damn, and there was me thinking Fedora and Friends was another cartoon I can put on my Plex server for my daughter 😁

No, but I hope you have Phineas and Ferb on the server!

B^)

Let’s not forget that even Debian based distros are no longer officially available in 32-bit compiles, never mind for 16-bit architecture. Understandable, of course, there hasn’t been a new 32-bit PC in twenty years or more, but 16-bit was the PC standard when Linux first dropped. In 1991 I was still running a 286 machine, with a whopping 4 MB of RAM and a first-gen VGA (1024×768 in 256 colors from a pallet of 32,768 — 256 KB VRAM), having moved RAM, video, and hard disks (40 MB x 2) from my 8088 machine in 1989. That old Laser 286, even after my upgrades, wouldn’t run any Linux distribution I’ve ever used (been Linux on my daily driver for thirteen years).

So, yes, Linux also has system spec creep — just not at the level Windows does.

On what planet has linux ever run on a 16 bit CPU? Linux started on the fully-32-bit 80386.

There is https://en.wikipedia.org/wiki/Embeddable_Linux_Kernel_Subset though it only came about later.

But in reality, Minix would have been a better choice for desktop usage with 286.

68010 with an MMU. Something like a Sun 2.

16 bit bus, but 32 bit architecture (which is what is relevant here)

There were still plenty of 32 bit PCs being sold new in 2004. Most desktops were moving over at the end of the Pentium 4 era but I’m not sure if there were ANY 64 bit laptop CPUs yet – the Dothan Pentium M generation that came out that spring was 32 bit only and I’m not sure if the interim Core Solo and Core Duo processors were 64 but the Core 2s that came in 2006 were definitely what pushed 64 bits into mainstream laptops.

For that matter. I’m not sure when the first Atom processors came out but the first gen netbooks that used it starting with the Eee PC and running at least into 2009 were all 32 bit only

You can still get a 32-bit installer for Debian 12 itself!

I had a 32-bit only eeePC 901 which launched in 2008, so more like 15 years ago (as I’m sure they were available new through 2009).

It famously had a factory Linux distro, making it doubly relevant.

I had an eeepc 701, it was awful but so cute.

The last time I saw such “torture” was when people ran Win95 or OS/2 on a 386/486 system with 4MB of RAM. Poor thing. 🥲

I worked on projects for IBM in the olden days. The PS/2 model 50 ran OS/2 fine with a 286 and 1MB of RAM. In late 1987, we were happy to have such a powerful processor and OS and then really excited when they added Presentation Manager the next year.

I believe you, but 1MB is really low memory. About good enough for OS/2 1.0 in text-mode.

I mean, by default, the OS/2 DOS compatibility box alone did reserve 640KB, leaving 384KB to OS/2.

Which in turn did require some bit of memory to load drivers and provide a HDD cache.

So even in the late 80s, graphical OS/2 (1.1 and up) needed 2,5 to 4MB for normal operation. It did boot with less, though.

This can be read in the following magazine article, too. It’s from November 1988.:

https://books.google.de/books?id=RzsEAAAAMBAJ&pg=PA113#v=onepage&q&f=false

OS/2 1.3 and 2.x shouldn’t run on anything with less than 6 to 8MB of RAM, I think.

Source: https://www.os2world.com/wiki/index.php/File:P71G5391-007.jpg

A 486 with 16 MB would be ideal, I think.

A 386DX-40 would still be “okay”, too, though.

That being said, PC users were notoriously short on memory back then.

The PCs were being sold with DOS in mind and RAM expansion was seen as unnecessary and a waste.

Users rather invested in other things. Until Windows 95 showed up, at least.

In the mid 1990’s, I ran my 386 with 4MB with multi-tasking software that allowed me to run a BBS in the background while also using Win95. It wasn’t a hotrod, but it worked tolerably well as a daily driver.

I ran Warp on a 386 with 4MB ram, used an alternate shell from the WPS, filebar or mdesk and it ran fine with some tuning

Maybe that’s true. If the memory footprint was reduced by a few hundred KBs, that makes quite a difference.

Here in Germany, early 90s, Vobis (and Escom) had sold OS/2 Warp 3 with PCs, which was unheard of the time. Microsoft was very angry.

Anyway, the problem was that Vobis had equipped the PCs with 4MB only.

This lead to buyers being very unhappy with OS/2, so they went back to DOS6+Windows 3.11.

So the poor 4MB RAM configuration was directly being responsible to OS/2’s “failure” here (some users invested in RAM and kept using OS/2).

If the PCs had been equipped with 6MB, at least, the outcome might have been very different.

Really, 4MB is barely enough, no matter what people tell.

(Your special modification being an exception, I think.)

Except for Windows 3 in Standard Mode running on a 286, maybe.

Here, 4MB was the maximum configuration the average 286 chipset would handle.

Power users on Amigas and Atari STs had this amount of memory before 1990, even. Before Windows 3 was released officially (Betas date back to late 80s).

Windows 95 did perform just as bad with 4MB of RAM as OS/2 did.

I saw this happen many times in the 90s.

Especially 386SX laptops and 486 laptops had 4MB of RAM back then and were being forced to run Windows 95.

It was horrible to witness. The HDDs did sound as they were dying in pain.

But the situation is hard to tell people who never had more than 4MB back then.

They lack the experience, simply. At least when it comes to 286/386/486 era technology.

They tthink it was just “normal” and never saw an 386/486 PC running OS/2 or Win95 totally smoothly, if merely being given a sane amount of minimum RAM!

It was rather contrary, they had bend told by others that “no one ever needs 2MB of RAM”, I heard by an acquaintance.

That’s why even Windows 3 had been called bloat-ware, initially.

Such people didn’t have 8 or 16MB of RAM before the Pentium 133 days, I suppose.

But that was 3, 4 or 5 years in the future from when OS/2 was being nolonger popular.

Kudos! The Intel 4004 microprocessor has a long tradition of emulating other architectures “bigger than itself.” The very first 4004 application, the Japanese Busicom 141PF, used a byte code interpreter to emulate a desktop calculator FSM originally designed for hardware that was impractical to implement as a chip set in 1970.

The magic is in the software, though.

I wouldn’t be surprised if some hardcore coder would run an emulator on an MC14500.

It’s not the hardware that’s to be admired, but solely the programmer, the individual, who had made the magic happen.

The greatest “hardware” is the human mind, not a hyped piece of molten sand such as 4004 or 6510.

Almost Too Easy.

Emulate a 4004 with an MC14500 and…… :)

-G.

Emulate a 4004 with a UE14500 (Usagi Electric’s Vacuum Tube recreation of an MC14500, this time with an ALU).

It’ll make emulation easier, but the vacuum tube computer runs at speeds measured in Hertz or maybe a few kHz.

:-D

I think that you can implement a computer of any arbitrary word length using an MC14500B, since it’s the external components that determine this. Which means that you should be easily able to make a Linux machine from it that will greatly outperform a 4004.

But saying that only the software is made of magic is simplistic. You don’t think that there is magic in a mechanical calculator that needs no software? There is nothing in hardware to be admired? Just try desk testing* a piece of software, and tell me there’s no magic when you run it again on the hardware.

in case you’re unfamiliar, desk testing is emulating the hardware using pencil and paper as memory and registers, and your brain as the ALU.

Whether the logic is etched in metal or electrons, it’s all beautiful.

Awesome article, it’s a treasure trove of hack knowledge :-)

It’s also kind of a paradoxon.

Normally, computers work on a timescale that’s smaller than that of humans.

But thanks to Linux and the RISC V emulator, it’s the opposite here.

We can do make inputs quicker on the keyboard than the computer can process.

If you’d type something at normal speed, the keyboard queue would run out buffers soon. It makes the computer look like if it has a reaction time of a room plant.

This is very fascintating, like a time lapse. Linux makes it possible. 🙂👍

I once used a computer that you could completely freeze just by rolling the trackball on its console. This was a drum-based Philco AN/FYQ-9, which was used to track aircraft on a RADAR screen, indicating their bearings and speeds. The trackball was used to move a cursor to the current position of an aircraft, and once you did this at least twice for a target, it would extrapolate the course of that target over time. But if you just kept moving the ball, it would never have time to update the display. Circa 1964.

Next, port it to the ENIAC

Insane work! I wonder if somebody made Linux boot on a computer made out of 74xx logic IC… Maybe i should not write this as Mr Grinberg will take the challenge…

My current plan is to beat this record every decade. Prospective next four steps: one bit industrial controller, 74xx, transistors only, vacuum tubes only.

Well, Magic-1 is built from 74xx ttl chips and runs Minix. Close enough?

Make it emulate a Beowulf cluster, and the ancient circle of snark will be complete.

What? No 555?

So, I guess it is up to me to ask…

“Does it run Doom?”

Yea. At 0.17FPY

B^)

Might be one of the few instances in which the answer to that question is “no.” I’m not sure… Google search is such dogwater now that it doesn’t give anything relevant. I wonder if emulating linux on it might be a step toward running the first Doom 4004 port–at ludicrously low clock speed of course

Two nibble [4 bits] floating point example used to point out accuracy issues with AI floating point arithmetic accelerators exposed by Guido Appenzeller in AI hardware, explained.

But this will not stop the AI industry?

Three Mile Island nuclear plant will reopen to power Microsoft data centers

A supported 16 bit version of Linux is called ELKS (reduced Linux kernel) https://github.com/ghaerr/elks

It even runs a reduced version of Doom called Elks Doom: https://github.com/FrenkelS/elksdoom

“you’ll never again complain about that two-generations-ago 64-bit multi-core behemoth”

Typing this from a >ten years old laptop, fairly weak i3 core, with many browser tabs open and ffmpeg converting a video in the background and a CAD program open, and no appreciable slowness at all to complain about. Linux Mint is good.

“Linux Mint is good.”

And the no.1 distro used by middle-aged men that ran off from Windows.

That’s at least my experience here. I know of a few Windows users who didn’t want to see Windows 10 anymore, but don’t like using a Linux-like Linux, either.

So they do all end up with Linux Mint, eventually.

If Lindows was still being sold, they perhaps would use this instead. Or Zeta, maybe. ;)

Linux Mint is a good transition distro. I usually recommend it. I also recommend KUbuntu as well. Since I run it everywhere on all my sytems (except RPI SBCs), I changed over my dad to it and it was easy to pick up for him as well. It has been great. I get very few ‘maintenance’ calls now.

I can’t imagine the ‘patience’ of trying to bring up Linux (in emulation even) on a 4004…. Just because…

MC14500B next!

I appreciate technical prowess Dmitry demonstrates by hosting his MIPS emulator on a 4004. That said [ was everything before a lie? ;-) ], I briefly hoped for a 4 bit Linux workalike without that extra level of indirection… getting a bit hand wavy to suggest limiting the indirection to some macros for emulating 16 bit operations, since we can’t “reflash” the 4004’s microcode.

Microcode? Looking for that in a 4004 is like looking for an ECU in a model T.

This comment is a few weeks late, my bad

The 4004 was entirely hardwired internally without any microsequenced portions. Because of that, it doesn’t contain any microcode. It wasn’t long before they did start doing it, but a few generational steps needed to happen.